1. Overview

In this tutorial, we'll venture into the realm of data modeling for event-driven architecture using Apache Kafka.

2. Setup

A Kafka cluster consists of multiple Kafka brokers that are registered with a Zookeeper cluster. To keep things simple, we'll use ready-made Docker images and docker-compose configurations published by Confluent.

First, let's download the docker-compose.yml for a 3-node Kafka cluster:

$ BASE_URL="https://raw.githubusercontent.com/confluentinc/cp-docker-images/5.3.3-post/examples/kafka-cluster"

$ curl -Os "$BASE_URL"/docker-compose.ymlNext, let's spin-up the Zookeeper and Kafka broker nodes:

$ docker-compose up -dFinally, we can verify that all the Kafka brokers are up:

$ docker-compose logs kafka-1 kafka-2 kafka-3 | grep started

kafka-1_1 | [2020-12-27 10:15:03,783] INFO [KafkaServer id=1] started (kafka.server.KafkaServer)

kafka-2_1 | [2020-12-27 10:15:04,134] INFO [KafkaServer id=2] started (kafka.server.KafkaServer)

kafka-3_1 | [2020-12-27 10:15:03,853] INFO [KafkaServer id=3] started (kafka.server.KafkaServer)3. Event Basics

Before we take up the task of data modeling for event-driven systems, we need to understand a few concepts such as events, event-stream, producer-consumer, and topic.

3.1. Event

An event in the Kafka-world is an information log of something that happened in the domain-world. It does it by recording the information as a key-value pair message along with few other attributes such as the timestamp, meta information, and headers.

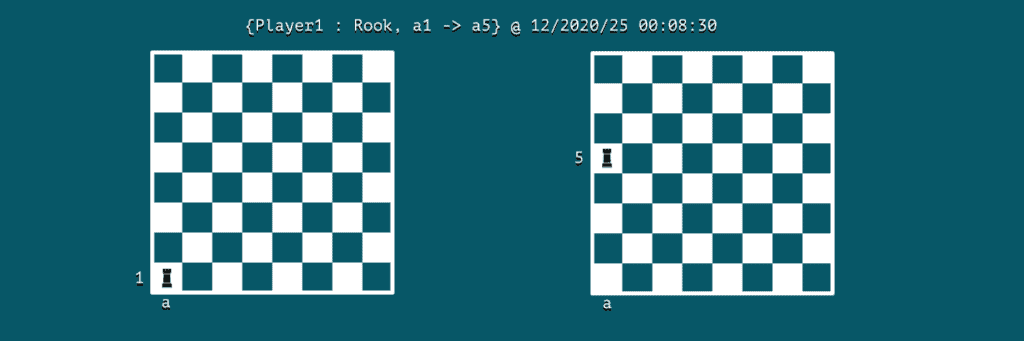

Let's assume that we're modeling a game of chess; then an event could be a move:

We can notice that event holds the key information of the actor, action, and time of its occurrence. In this case, Player1 is the actor, and the action is moving of rook from cell a1 to a5 at 12/2020/25 00:08:30.

3.2. Message Stream

Apache Kafka is a stream processing system that captures events as a message stream. In our game of chess, we can think of the event stream as a log of moves played by the players.

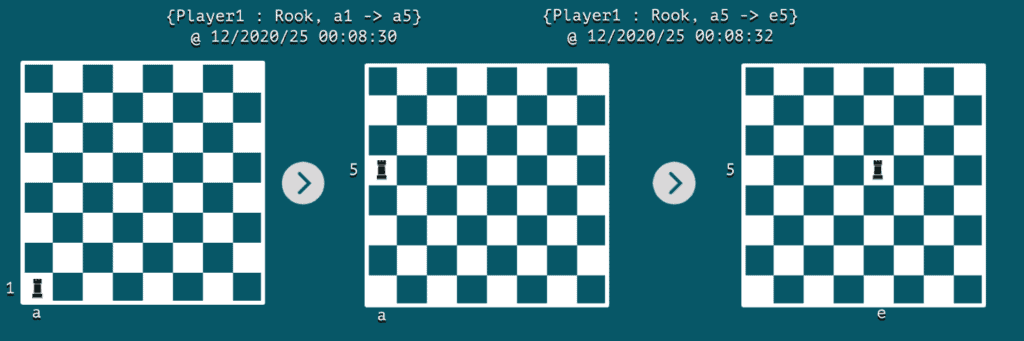

At the occurrence of each event, a snapshot of the board would represent its state. It's usually common to store the latest static state of an object using a traditional table schema.

On the other hand, the event stream can help us capture the dynamic change between two consecutive states in the form of events. If we play a series of these immutable events, we can transition from one state to another. Such is the relationship between an event stream and a traditional table, often known as stream table duality.

Let's visualize an event stream on the chessboard with just two consecutive events:

4. Topics

In this section, we'll learn how to categorize messages routed through Apache Kafka.

4.1. Categorization

In a messaging system such as Apache Kafka, anything that produces the event is commonly called a producer. While the ones reading and consuming those messages are called consumers.

In a real-world scenario, each producer can generate events of different types, so it'd be a lot of wasted effort by the consumers if we expect them to filter the messages relevant to them and ignore the rest.

To solve this basic problem, Apache Kafka uses topics that are essentially groups of messages that belong together. As a result, consumers can be more productive while consuming the event messages.

In our chessboard example, a topic could be used to group all the moves under the chess-moves topic:

$ docker run \

--net=host --rm confluentinc/cp-kafka:5.0.0 \

kafka-topics --create --topic chess-moves \

--if-not-exists

--zookeeper localhost:32181

Created topic "chess-moves".4.2. Producer-Consumer

Now, let's see how producers and consumers use Kafka's topics for message processing. We'll use kafka-console-producer and kafka-console-consumer utilities shipped with Kafka distribution to demonstrate this.

Let's spin up a container named kafka-producer wherein we'll invoke the producer utility:

$ docker run \

--net=host \

--name=kafka-producer \

-it --rm \

confluentinc/cp-kafka:5.0.0 /bin/bash

# kafka-console-producer --broker-list localhost:19092,localhost:29092,localhost:39092 \

--topic chess-moves \

--property parse.key=true --property key.separator=:

Simultaneously, we can spin up a container named kafka-consumer wherein we'll invoke the consumer utility:

$ docker run \

--net=host \

--name=kafka-consumer \

-it --rm \

confluentinc/cp-kafka:5.0.0 /bin/bash

# kafka-console-consumer --bootstrap-server localhost:19092,localhost:29092,localhost:39092 \

--topic chess-moves --from-beginning \

--property print.key=true --property print.value=true --property key.separator=:

Now, let's record some game moves through the producer:

>{Player1 : Rook, a1->a5}As the consumer is active, it'll pick up this message with key as Player1:

{Player1 : Rook, a1->a5}5. Partitions

Next, let's see how we can create further categorization of messages using partitions and boost the performance of the entire system.

5.1. Concurrency

We can divide a topic into multiple partitions and invoke multiple consumers to consume messages from different partitions. By enabling such concurrency behavior, the overall performance of the system can thereby be improved.

By default, Kafka would create a single partition of a topic unless explicitly specified at the time of topic creation. However, for a pre-existing topic, we can increase the number of partitions. Let's set partition number to 3 for the chess-moves topic:

$ docker run \

--net=host \

--rm confluentinc/cp-kafka:5.0.0 \

bash -c "kafka-topics --alter --zookeeper localhost:32181 --topic chess-moves --partitions 3"

WARNING: If partitions are increased for a topic that has a key, the partition logic or ordering of the messages will be affected

Adding partitions succeeded!5.2. Partition Key

Within a topic, Kafka processes messages across multiple partitions using a partition key. At one end, producers use it implicitly to route a message to one of the partitions. On the other end, each consumer can read messages from a specific partition.

By default, the producer would generate a hash value of the key followed by a modulus with the number of partitions. Then, it'd send the message to the partition identified by the calculated identifier.

Let's create new event messages with the kafka-console-producer utility, but this time we'll record moves by both the players:

# kafka-console-producer --broker-list localhost:19092,localhost:29092,localhost:39092 \

--topic chess-moves \

--property parse.key=true --property key.separator=:

>{Player1: Rook, a1 -> a5}

>{Player2: Bishop, g3 -> h4}

>{Player1: Rook, a5 -> e5}

>{Player2: Bishop, h4 -> g3}Now, we can have two consumers, one reading from partition-1 and the other reading from partition-2:

# kafka-console-consumer --bootstrap-server localhost:19092,localhost:29092,localhost:39092 \

--topic chess-moves --from-beginning \

--property print.key=true --property print.value=true \

--property key.separator=: \

--partition 1

{Player2: Bishop, g3 -> h4}

{Player2: Bishop, h4 -> g3}We can see that all moves by Player2 are being recorded into partition-1. In the same manner, we can check that moves by Player1 are being recorded into partition-0.

6. Scaling

How we conceptualize topics and partitions is crucial to horizontal scaling. On the one hand, a topic is more of a pre-defined categorization of data. On the other hand, a partition is a dynamic categorization of data that happens on the fly.

Further, there're practical limits on how many partitions we can configure within a topic. That's because each partition is mapped to a directory in the file system of the broker node. When we increase the number of partitions, we also increase the number of open file handles on our operating system.

As a rule of thumb, experts at Confluent recommend limiting the number of partitions per broker to 100 x b x r, where b is the number of brokers in a Kafka cluster, and r is the replication factor.

7. Conclusion

In this article, we used a Docker environment to cover the fundamentals of data modeling for a system that uses Apache Kafka for message processing. With a basic understanding of events, topics, and partitions, we're now ready to conceptualize event streaming and further use this architecture paradigm.

The post Data Modeling with Apache Kafka first appeared on Baeldung.