1. Overview

The JVM interprets and executes bytecode at runtime. In addition, it makes use of the just-in-time (JIT) compilation to boost performance.

In earlier versions of Java, we had to manually choose between the two types of JIT compilers available in the Hotspot JVM. One is optimized for faster application start-up, while the other achieves better overall performance. Java 7 introduced tiered compilation in order to achieve the best of both worlds.

In this tutorial, we'll look at the client and server JIT compilers. We'll review tiered compilation and its five compilation levels. Finally, we'll see how method compilation works by tracking the compilation logs.

2. JIT Compilers

A JIT compiler compiles bytecode to native code for frequently executed sections. These sections are called hotspots, hence the name Hotspot JVM. As a result, Java can run with similar performance to a fully compiled language. Let's look at the two types of JIT compilers available in the JVM.

2.1. C1 – Client Complier

The client compiler, also called C1, is a type of a JIT compiler optimized for faster start-up time. It tries to optimize and compile the code as soon as possible.

Historically, we used C1 for short-lived applications and applications where start-up time was an important non-functional requirement. Prior to Java 8, we had to specify the -client flag to use the C1 compiler. However, if we use Java 8 or higher, this flag will have no effect.

2.2. C2 – Server Complier

The server compiler, also called C2, is a type of a JIT compiler optimized for better overall performance. C2 observes and analyzes the code over a longer period of time compared to C1. This allows C2 to make better optimizations in the compiled code.

Historically, we used C2 for long-running server-side applications. Prior to Java 8, we had to specify the -server flag to use the C2 compiler. However, this flag will have no effect in Java 8 or higher.

We should note that the Graal JIT compiler is also available since Java 10, as an alternative to C2. Unlike C2, Graal can run in both just-in-time and ahead-of-time compilation modes to produce native code.

3. Tiered Compilation

The C2 compiler often takes more time and consumes more memory to compile the same methods. However, it generates better-optimized native code than that produced by C1.

The tiered compilation concept was first introduced in Java 7. Its goal was to use a mix of C1 and C2 compilers in order to achieve both fast startup and good long-term performance.

3.1. Best of Both Worlds

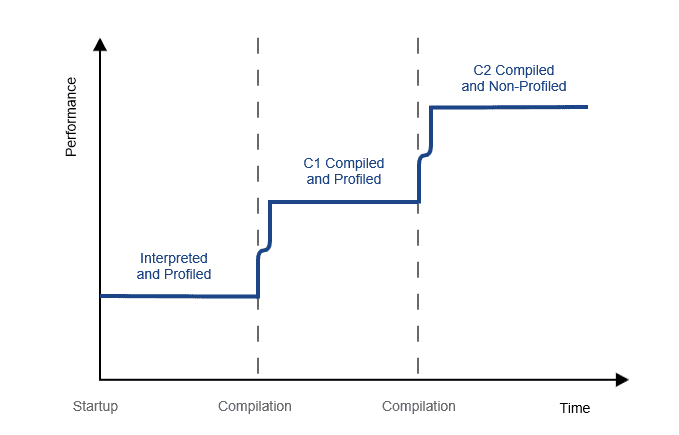

On application startup, the JVM initially interprets all bytecode and collects profiling information about it. The JIT compiler then makes use of the collected profiling information to find hotspots.

First, the JIT compiler compiles the frequently executed sections of code with C1 to quickly reach native code performance. Later, C2 kicks in when more profiling information is available. C2 recompiles the code with more aggressive and time-consuming optimizations to boost performance:

In summary, C1 improves performance faster, while C2 makes better performance improvements based on more information about hotspots.

3.2. Accurate Profiling

An additional benefit of tiered compilation is more accurate profiling information. Before tiered compilation, the JVM collected profiling information only during interpretation.

With tiered compilation enabled, the JVM also collects profiling information on the C1 compiled code. Since the compiled code achieves better performance, it allows the JVM to collect more profiling samples.

3.3. Code Cache

Code cache is a memory area where the JVM stores all bytecode compiled into native code. Tiered compilation increased the amount of code that needs to be cached up to four times.

Since Java 9, the JVM segments the code cache into three areas:

- The non-method segment – JVM internal related code (around 5 MB, configurable via -XX:NonNMethodCodeHeapSize)

- The profiled-code segment – C1 compiled code with potentially short lifetimes (around 122 MB by default, configurable via -XX:ProfiledCodeHeapSize)

- The non-profiled segment – C2 compiled code with potentially long lifetimes (similarly 122 MB by default, configurable via -XX:NonProfiledCodeHeapSize)

Segmented code cache helps to improve code locality and reduces memory fragmentation. Thus, it improves overall performance.

3.4. Deoptimization

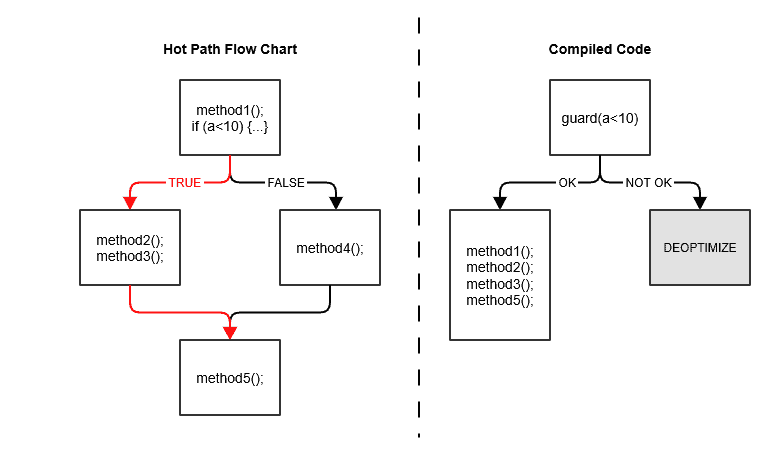

Even though C2 compiled code is highly optimized and long-lived, it can be deoptimized. As a result, the JVM would temporarily roll back to interpretation.

Deoptimization happens when the compiler’s optimistic assumptions are proven wrong — for example, when profile information does not match method behavior:

In our example, once the hot path changes, the JVM deoptimizes the compiled and inlined code.

4. Compilation Levels

Even though the JVM works with only one interpreter and two JIT compilers, there are five possible levels of compilation. The reason behind this is that the C1 compiler can operate on three different levels. The difference between those three levels is in the amount of profiling done.

4.1. Level 0 – Interpreted Code

Initially, JVM interprets all Java code. During this initial phase, the performance is usually not as good compared to compiled languages.

However, the JIT compiler kicks in after the warmup phase and compiles the hot code at runtime. The JIT compiler makes use of the profiling information collected on this level to perform optimizations.

4.2. Level 1 – Simple C1 Compiled Code

On this level, the JVM compiles the code using the C1 compiler, but without collecting any profiling information. The JVM uses level 1 for methods that are considered trivial.

Due to low method complexity, the C2 compilation wouldn't make it faster. Thus, the JVM concludes that there is no point in collecting profiling information for code that cannot be optimized further.

4.3. Level 2 – Limited C1 Compiled Code

On level 2, the JVM compiles the code using the C1 compiler with light profiling. The JVM uses this level when the C2 queue is full. The goal is to compile the code as soon as possible to improve performance.

Later, the JVM recompiles the code on level 3, using full profiling. Finally, once the C2 queue is less busy, the JVM recompiles it on level 4.

4.4. Level 3 – Full C1 Compiled Code

On level 3, the JVM compiles the code using the C1 compiler with full profiling. Level 3 is part of the default compilation path. Thus, the JVM uses it in all cases except for trivial methods or when compiler queues are full.

The most common scenario in JIT compilation is that the interpreted code jumps directly from level 0 to level 3.

4.5. Level 4 – C2 Compiled Code

On this level, the JVM compiles the code using the C2 compiler for maximum long-term performance. Level 4 is also a part of the default compilation path. The JVM uses this level to compile all methods except trivial ones.

Given that level 4 code is considered fully optimized, the JVM stops collecting profiling information. However, it may decide to deoptimize the code and send it back to level 0.

5. Compilation Parameters

Tiered compilation is enabled by default since Java 8. It's highly recommended to use it unless there's a strong reason to disable it.

5.1. Disabling Tiered Compilation

We may disable tiered compilation by setting the –XX:-TieredCompilation flag. When we set this flag, the JVM will not transition between compilation levels. As a result, we'll need to select which JIT compiler to use: C1 or C2.

Unless explicitly specified, the JVM decides which JIT compiler to use based on our CPU. For multi-core processors or 64-bit VMs, the JVM will select C2. In order to disable C2 and only use C1 with no profiling overhead, we can apply the -XX:TieredStopAtLevel=1 parameter.

To completely disable both JIT compilers and run everything using the interpreter, we can apply the -Xint flag. However, we should note that disabling JIT compilers will have a negative impact on performance.

5.2. Setting Thresholds for Levels

A compile threshold is the number of method invocations before the code gets compiled. In the case of tiered compilation, we can set these thresholds for compilation levels 2-4. For example, we can set a parameter -XX:Tier4CompileThreshold=10000.

In order to check the default thresholds used on a specific Java version, we can run Java using the -XX:+PrintFlagsFinal flag:

java -XX:+PrintFlagsFinal -version | grep CompileThreshold

intx CompileThreshold = 10000

intx Tier2CompileThreshold = 0

intx Tier3CompileThreshold = 2000

intx Tier4CompileThreshold = 15000We should note that the JVM doesn't use the generic CompileThreshold parameter when tiered compilation is enabled.

6. Method Compilation

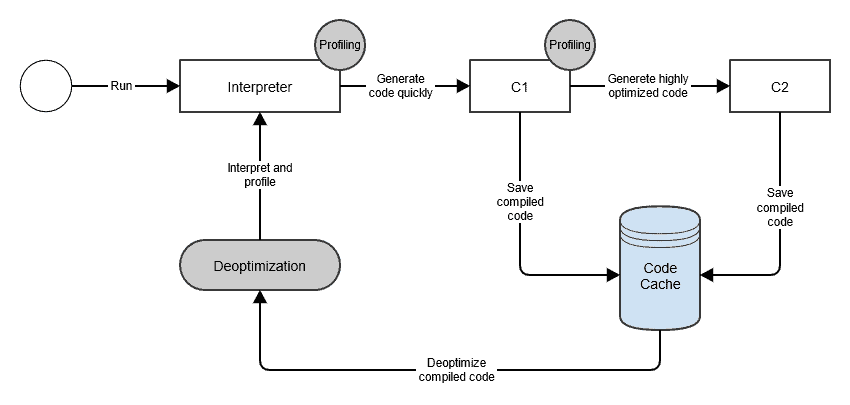

Let's now take a look at a method compilation life-cycle:

In summary, the JVM initially interprets a method until its invocations reach the Tier3CompileThreshold. Then, it compiles the method using the C1 compiler while profiling information continues to be collected. Finally, the JVM compiles the method using the C2 compiler when its invocations reach the Tier4CompileThreshold. Eventually, the JVM may decide to deoptimize the C2 compiled code. That means that the complete process will repeat.

6.1. Compilation Logs

By default, JIT compilation logs are disabled. To enable them, we can set the -XX:+PrintCompilation flag. The compilation logs are formatted as:

- Timestamp – In milliseconds since application start-up

- Compile ID – Incremental ID for each compiled method

- Attributes – The state of the compilation with five possible values:

- % – On-stack replacement occurred

- s – The method is synchronized

- ! – The method contains an exception handler

- b – Compilation occurred in blocking mode

- n – Compilation transformed a wrapper to a native method

- Compilation level – Between 0 and 4

- Method name

- Bytecode size

- Deoptimisation indicator – With two possible values:

- Made not entrant – Standard C1 deoptimization or the compiler’s optimistic assumptions proven wrong

- Made zombie – A cleanup mechanism for the garbage collector to free space from the code cache

6.2. An Example

Let's demonstrate the method compilation life-cycle on a simple example. First, we'll create a class that implements a JSON formatter:

public class JsonFormatter implements Formatter {

private static final JsonMapper mapper = new JsonMapper();

@Override

public <T> String format(T object) throws JsonProcessingException {

return mapper.writeValueAsString(object);

}

}Next, we'll create a class that implements the same interface, but implements an XML formatter:

public class XmlFormatter implements Formatter {

private static final XmlMapper mapper = new XmlMapper();

@Override

public <T> String format(T object) throws JsonProcessingException {

return mapper.writeValueAsString(object);

}

}Now, we'll write a method that uses the two different formatter implementations. In the first half of the loop, we'll use the JSON implementation and then switch to the XML one for the rest:

public class TieredCompilation {

public static void main(String[] args) throws Exception {

for (int i = 0; i < 1_000_000; i++) {

Formatter formatter;

if (i < 500_000) {

formatter = new JsonFormatter();

} else {

formatter = new XmlFormatter();

}

formatter.format(new Article("Tiered Compilation in JVM", "Baeldung"));

}

}

}Finally, we'll set the -XX:+PrintCompilation flag, run the main method, and observe the compilation logs.

6.3. Review Logs

Let's focus on log output for our three custom classes and their methods.

The first two log entries show that the JVM compiled the main method and the JSON implementation of the format method on level 3. Therefore, both methods were compiled by the C1 compiler. The C1 compiled code replaced the initially interpreted version:

567 714 3 com.baeldung.tieredcompilation.JsonFormatter::format (8 bytes)

687 832 % 3 com.baeldung.tieredcompilation.TieredCompilation::main @ 2 (58 bytes)A few hundred milliseconds later, the JVM compiled both methods on level 4. Hence, the C2 compiled versions replaced the previous versions compiled with C1:

659 800 4 com.baeldung.tieredcompilation.JsonFormatter::format (8 bytes)

807 834 % 4 com.baeldung.tieredcompilation.TieredCompilation::main @ 2 (58 bytes)Just a few milliseconds later, we see our first example of deoptimization. Here, the JVM marked obsolete (not entrant) the C1 compiled versions:

812 714 3 com.baeldung.tieredcompilation.JsonFormatter::format (8 bytes) made not entrant

838 832 % 3 com.baeldung.tieredcompilation.TieredCompilation::main @ 2 (58 bytes) made not entrantAfter a while, we'll notice another example of deoptimization. This log entry is interesting as the JVM marked obsolete (not entrant) the fully optimized C2 compiled versions. That means the JVM rolled back the fully optimized code when it detected that it wasn't valid anymore:

1015 834 % 4 com.baeldung.tieredcompilation.TieredCompilation::main @ 2 (58 bytes) made not entrant

1018 800 4 com.baeldung.tieredcompilation.JsonFormatter::format (8 bytes) made not entrant

Next, we'll see the XML implementation of the format method for the first time. The JVM compiled it on level 3, together with the main method:

1160 1073 3 com.baeldung.tieredcompilation.XmlFormatter::format (8 bytes)

1202 1141 % 3 com.baeldung.tieredcompilation.TieredCompilation::main @ 2 (58 bytes)A few hundred milliseconds later, the JVM compiled both methods on level 4. However, this time, it's the XML implementation that was used by the main method:

1341 1171 4 com.baeldung.tieredcompilation.XmlFormatter::format (8 bytes)

1505 1213 % 4 com.baeldung.tieredcompilation.TieredCompilation::main @ 2 (58 bytesSame as before, a few milliseconds later, the JVM marked obsolete (not entrant) the C1 compiled versions:

1492 1073 3 com.baeldung.tieredcompilation.XmlFormatter::format (8 bytes) made not entrant

1508 1141 % 3 com.baeldung.tieredcompilation.TieredCompilation::main @ 2 (58 bytes) made not entrantThe JVM continued to use the level 4 compiled methods until the end of our program.

7. Conclusion

In this article, we explored the tiered compilation concept in the JVM. We reviewed the two types of JIT compilers and how tiered compilation uses both of them to achieve the best results. We saw five levels of compilation and learned how to control them using JVM parameters.

In the examples, we explored the complete method compilation life-cycle by observing the compilation logs.

As always, the source code is available over on GitHub.

The post Tiered Compilation in JVM first appeared on Baeldung.