1. Overview

AWS Lambda allows us to create lightweight applications that can be deployed and scaled easily. Though we can use frameworks like Spring Cloud Function, for performance reasons, we usually use as little framework code as possible.

Sometimes we need to access a relational database from a Lambda. This is where Hibernate and JPA can be very useful. But, how do we add Hibernate to our Lambda without Spring?

In this tutorial, we'll look at the challenges of using any RDBMS within a Lambda, and how and when Hibernate can be useful. Our example will use the Serverless Application Model to build a REST interface to our data.

We'll look at how to test everything on our local machine using Docker and the AWS SAM CLI.

2. Challenges Using RDBMS and Hibernate in Lambdas

Lambda code needs to be as small as possible to speed up cold starts. Also, a Lambda should be able to do its job in milliseconds. However, using a relational database can involve a lot of framework code and can run more slowly.

In cloud-native applications, we try to design using cloud-native technologies. Serverless databases like Dynamo DB can be a better fit for Lambdas. However, the need for a relational database may come from some other priority within our project.

2.1. Using an RDBMS From a Lambda

Lambdas run for a small amount of time and then their container is paused. The container may be reused for a future invocation, or it may be disposed of by the AWS runtime if no longer needed. This means that any resources the container claims must be managed carefully within the lifetime of a single invocation.

Specifically, we cannot rely on conventional connection pooling for our database, as any connections opened could potentially stay open without being safely disposed of. We can use connection pools during the invocation, but we have to create the connection pool each time. Also, we need to shut down all connections and release all resources as our function ends.

This means that using a Lambda with a database can cause connection problems. A sudden upscale of our Lambda can consume too many connections. Although the Lambda may release connections straight away, we still rely on the database being able to prepare them for the next Lambda invocation. Therefore, it's often a good idea to use a maximum concurrency limit on any Lambda that uses a relational database.

In some projects, Lambda is not the best choice for connecting to an RDBMS, and a traditional Spring Data service, with a connection pool, perhaps running in EC2 or ECS, may be a better solution.

2.2. The Case for Hibernate

A good way to determine if we need Hibernate is to ask what sort of code we'd have to write without it.

If not using Hibernate would cause us to have to code complex joins or lots of boilerplate mapping between fields and columns, then from a coding perspective, Hibernate is a good solution. If our application does not experience a high load or the need for low latency, then the overhead of Hibernate may not be an issue.

2.3. Hibernate Is a Heavyweight Technology

However, we also need to consider the cost of using Hibernate in a Lambda.

The Hibernate jar file is 7 MB in size. Hibernate takes time at start-up to inspect annotations and create its ORM capability. This is enormously powerful, but for a Lambda, it can be overkill. As Lambdas are usually written to perform small tasks, the overhead of Hibernate may not be worth the benefits.

It may be easier to use JDBC directly. Alternatively, a lightweight ORM-like framework such as JDBI may provide a good abstraction over queries, without too much overhead.

3. An Example Application

In this tutorial, we'll build a tracking application for a low-volume shipping company. Let's imagine they collect large items from customers to create a Consignment. Then, wherever that consignment travels, it's checked in with a timestamp, so the customer can monitor it. Each consignment has a source and destination, for which we'll use what3words.com as our geolocation service.

Let's also imagine that they're using mobile devices with bad connections and retries. Therefore, after a consignment is created, the rest of the information about it can arrive in any order. This complexity, along with needing two lists for each consignment – the items and the check-ins – is a good reason to use Hibernate.

3.1. API Design

We'll create a REST API with the following methods:

- POST /consignment – create a new consignment, returning the ID, and supplying the source and destination; must be done before any other operations

- POST /consignment/{id}/item – add an item to the consignment; always adds to the end of the list

- POST /consignment/{id}/checkin – check a consignment in at any location along the way, supplying the location and a timestamp; will always be maintained in the database in order of timestamp

- GET /consignment/{id} – get the full history of a consignment, including whether it has reached its destination

3.2. Lambda Design

We'll use a single Lambda function to provide this REST API with the Serverless Application Model to define it. This means our single Lambda handler function will need to be able to satisfy all of the above requests.

To make it quick and easy to test, without the overhead of deploying to AWS, we'll test everything on our development machines.

4. Creating the Lambda

Let's set up a fresh Lambda to satisfy our API, but without implementing its data access layer yet.

4.1. Prerequisites

First, we need to install Docker if we do not have it already. We'll need it to host our test database, and it's used by the AWS SAM CLI to simulate the Lambda runtime.

We can test whether we have Docker:

$ docker --version

Docker version 19.03.12, build 48a66213fe

Next, we need to install the AWS SAM CLI and then test it:

$ sam --version

SAM CLI, version 1.1.0

Now we're ready to create our Lambda.

4.2. Creating the SAM Template

The SAM CLI provides us a way of creating a new Lambda function:

$ sam init

This will prompt us for the settings of the new project. Let's choose the following options:

1 - AWS Quick Start Templates

13 - Java 8

1 - maven

Project name - shipping-tracker

1 - Hello World Example: Maven

We should note that these option numbers may vary with later versions of the SAM tooling.

Now, there should be a new directory called shipping-tracker in which there's a stub application. If we look at the contents of its template.yaml file, we'll find a function called HelloWorldFunction with a simple REST API:

Events:

HelloWorld:

Type: Api

Properties:

Path: /hello

Method: get

By default, this satisfies a basic GET request on /hello. We should quickly test that everything is working, by using sam to build and test it:

$ sam build

... lots of maven output

$ sam start-api

Then we can test the hello world API using curl:

$ curl localhost:3000/hello

{ "message": "hello world", "location": "192.168.1.1" }

After that, let's stop sam running its API listener by using CTRL+C to abort the program.

Now that we have an empty Java 8 Lambda, we need to customize it to become our API.

4.3. Creating our API

To create our API, we need to add our own paths to the Events section of the template.yaml file:

CreateConsignment:

Type: Api

Properties:

Path: /consignment

Method: post

AddItem:

Type: Api

Properties:

Path: /consignment/{id}/item

Method: post

CheckIn:

Type: Api

Properties:

Path: /consignment/{id}/checkin

Method: post

ViewConsignment:

Type: Api

Properties:

Path: /consignment/{id}

Method: get

Let's also rename the function we're calling from HelloWorldFunction to ShippingFunction:

Resources:

ShippingFunction:

Type: AWS::Serverless::Function

Next, we'll rename the directory it's in to ShippingFunction and change the Java package from helloworld to com.baeldung.lambda.shipping. This means we'll need to update the CodeUri and Handler properties in template.yaml to point to the new location:

Properties:

CodeUri: ShippingFunction

Handler: com.baeldung.lambda.shipping.App::handleRequest

Finally, to make space for our own implementation, let's replace the body of the handler:

public APIGatewayProxyResponseEvent handleRequest(APIGatewayProxyRequestEvent input, Context context) {

Map<String, String> headers = new HashMap<>();

headers.put("Content-Type", "application/json");

headers.put("X-Custom-Header", "application/json");

return new APIGatewayProxyResponseEvent()

.withHeaders(headers)

.withStatusCode(200)

.withBody(input.getResource());

}

Though unit tests are a good idea, for this example, we'll also delete the provided unit tests by deleting the src/test directory.

4.4. Testing the Empty API

Now we've moved things around and created our API and a basic handler, let's double-check everything still works:

$ sam build

... maven output

$ sam start-api

Let's use curl to test the HTTP GET request:

$ curl localhost:3000/consignment/123

/consignment/{id}

We can also use curl -d to POST:

$ curl -d '{"source":"data.orange.brings", "destination":"heave.wipes.clay"}' \

-H 'Content-Type: application/json' \

http://localhost:3000/consignment/

/consignment

As we can see, both requests end successfully. Our stub code outputs the resource – the path of the request – which we can use when we set up routing to our various service methods.

4.5. Creating the Endpoints Within the Lambda

We're using a single Lambda function to handle our four endpoints. We could've created a different handler class for each endpoint in the same codebase or written a separate application for each endpoint, but keeping related APIs together allows a single fleet of Lambdas to serve them with common code, which can be a better use of resources.

However, we need to build the equivalent of a REST controller to dispatch each request to a suitable Java function. So, we'll create a stub ShippingService class and route to it from the handler:

public class ShippingService {

public String createConsignment(Consignment consignment) {

return UUID.randomUUID().toString();

}

public void addItem(String consignmentId, Item item) {

}

public void checkIn(String consignmentId, Checkin checkin) {

}

public Consignment view(String consignmentId) {

return new Consignment();

}

}

We'll also create empty classes for Consignment, Item, and Checkin. These will soon become our model.

Now that we have a service, let's use the resource to route to the appropriate service methods. We'll add a switch statement to our handler to route requests to the service:

Object result = "OK";

ShippingService service = new ShippingService();

switch (input.getResource()) {

case "/consignment":

result = service.createConsignment(

fromJson(input.getBody(), Consignment.class));

break;

case "/consignment/{id}":

result = service.view(input.getPathParameters().get("id"));

break;

case "/consignment/{id}/item":

service.addItem(input.getPathParameters().get("id"),

fromJson(input.getBody(), Item.class));

break;

case "/consignment/{id}/checkin":

service.checkIn(input.getPathParameters().get("id"),

fromJson(input.getBody(), Checkin.class));

break;

}

return new APIGatewayProxyResponseEvent()

.withHeaders(headers)

.withStatusCode(200)

.withBody(toJson(result));

We can use Jackson to implement our fromJson and toJson functions.

4.6. A Stubbed Implementation

So far, we've learned how to create an AWS Lambda to support an API, test it using sam and curl, and build basic routing functionality within our handler. We could add more error handling on bad inputs.

We should note that the mappings within the template.yaml already expect the AWS API Gateway to filter requests that are not for the right paths in our API. So, we need less error handling for bad paths.

Now, it's time to implement our service with its database, entity model, and Hibernate.

5. Setting up the Database

For this example, we'll use PostgreSQL as the RDBMS. Any relational database could work.

5.1. Starting PostgreSQL in Docker

First, we'll pull a PostgreSQL docker image:

$ docker pull postgres:latest

... docker output

Status: Downloaded newer image for postgres:latest

docker.io/library/postgres:latest

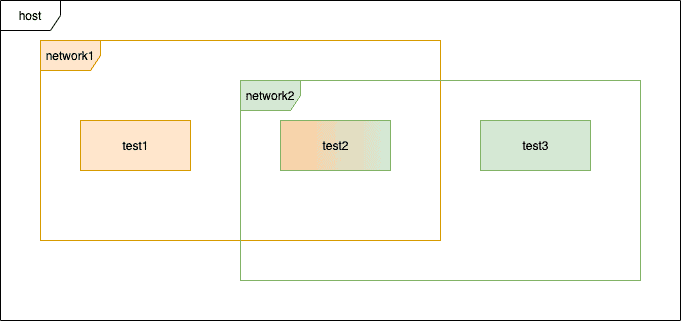

Let's now create a docker network for this database to run in. This network will allow our Lambda to communicate with the database container:

$ docker network create shipping

Next, we need to start the database container within that network:

docker run --name postgres \

--network shipping \

-e POSTGRES_PASSWORD=password \

-d postgres:latest

With –name, we've given the container the name postgres. With –network, we've added it to our shipping docker network. To set the password for the server, we used the environment variable POSTGRES_PASSWORD, set with the -e switch.

We also used -d to run the container in the background, rather than tie up our shell. PostgreSQL will start in a few seconds.

5.2. Adding a Schema

We'll need a new schema for our tables, so let's use the psql client inside our PostgreSQL container to add the shipping schema:

$ docker exec -it postgres psql -U postgres

psql (12.4 (Debian 12.4-1.pgdg100+1))

Type "help" for help.

postgres=#

Within this shell, we create the schema:

postgres=# create schema shipping;

CREATE SCHEMA

Then we use CTRL+D to exit the shell.

We now have PostgreSQL running, ready for our Lambda to use it.

6. Adding our Entity Model and DAO

Now we have a database, let's create our entity model and DAO. Although we're only using a single connection, let's use the Hikari connection pool to see how it could be configured for Lambdas that maybe need to run multiple connections against the database in a single invocation.

6.1. Adding Hibernate to the Project

We'll add dependencies to our pom.xml for both Hibernate and the Hikari Connection Pool. We'll also add the PostgreSQL JDBC driver:

<dependency>

<groupId>org.hibernate</groupId>

<artifactId>hibernate-core</artifactId>

<version>5.4.21.Final</version>

</dependency>

<dependency>

<groupId>org.hibernate</groupId>

<artifactId>hibernate-hikaricp</artifactId>

<version>5.4.21.Final</version>

</dependency>

<dependency>

<groupId>org.postgresql</groupId>

<artifactId>postgresql</artifactId>

<version>42.2.16</version>

</dependency>

6.2. Entity Model

Let's flesh out the entity objects. A Consignment has a list of items and check-ins, as well as its source, destination, and whether it has been delivered yet (that is, whether it has checked into its final destination):

@Entity(name = "consignment")

@Table(name = "consignment")

public class Consignment {

private String id;

private String source;

private String destination;

private boolean isDelivered;

private List items = new ArrayList<>();

private List checkins = new ArrayList<>();

// getters and setters

}

We've annotated the class as an entity and with a table name. We'll provide getters and setters, too. Let's mark the getters with the column names:

@Id

@Column(name = "consignment_id")

public String getId() {

return id;

}

@Column(name = "source")

public String getSource() {

return source;

}

@Column(name = "destination")

public String getDestination() {

return destination;

}

@Column(name = "delivered", columnDefinition = "boolean")

public boolean isDelivered() {

return isDelivered;

}

For our lists, we'll use the @ElementCollection annotation to make them ordered lists in separate tables with a foreign key relation to the consignment table:

@ElementCollection(fetch = EAGER)

@CollectionTable(name = "consignment_item", joinColumns = @JoinColumn(name = "consignment_id"))

@OrderColumn(name = "item_index")

public List getItems() {

return items;

}

@ElementCollection(fetch = EAGER)

@CollectionTable(name = "consignment_checkin", joinColumns = @JoinColumn(name = "consignment_id"))

@OrderColumn(name = "checkin_index")

public List getCheckins() {

return checkins;

}

Here's where Hibernate starts to pay for itself, performing the job of managing collections quite easily.

The Item entity is more straightforward:

@Embeddable

public class Item {

private String location;

private String description;

private String timeStamp;

@Column(name = "location")

public String getLocation() {

return location;

}

@Column(name = "description")

public String getDescription() {

return description;

}

@Column(name = "timestamp")

public String getTimeStamp() {

return timeStamp;

}

// ... setters omitted

}

It's marked as @Embeddable to enable it to be part of the list definition in the parent object.

Similarly, we'll define Checkin:

@Embeddable

public class Checkin {

private String timeStamp;

private String location;

@Column(name = "timestamp")

public String getTimeStamp() {

return timeStamp;

}

@Column(name = "location")

public String getLocation() {

return location;

}

// ... setters omitted

}

6.3. Creating a Shipping DAO

Our ShippingDao class will rely on being passed an open Hibernate Session. This will require the ShippingService to manage the session:

public void save(Session session, Consignment consignment) {

Transaction transaction = session.beginTransaction();

session.save(consignment);

transaction.commit();

}

public Optional<Consignment> find(Session session, String id) {

return Optional.ofNullable(session.get(Consignment.class, id));

}

We'll wire this into our ShippingService later on.

7. The Hibernate Lifecycle

So far, our entity model and DAO are comparable to non-Lambda implementations. The next challenge is creating a Hibernate SessionFactory within the Lambda's lifecycle.

7.1. Where Is The Database?

If we're going to access the database from our Lambda, then it needs to be configurable. Let's put the JDBC URL and database credentials into environment variables within our template.yaml:

Environment:

Variables:

DB_URL: jdbc:postgresql://postgres/postgres

DB_USER: postgres

DB_PASSWORD: password

These environment variables will get injected into the Java runtime. The postgres user is the default for our Docker PostgreSQL container. We assigned the password as password when we started the container earlier.

Within the DB_URL, we have the server name – //postgres is the name we gave our container – and the database name postgres is the default database.

It's worth noting that, though we're hard-coding these values in this example, SAM templates allow us to declare inputs and parameter overrides. Therefore, they can be made parameterizable later on.

7.2. Creating the Session Factory

We have both Hibernate and the Hikari connection pool to configure. To provide settings to Hibernate, we add them to a Map:

Map<String, String> settings = new HashMap<>();

settings.put(URL, System.getenv("DB_URL"));

settings.put(DIALECT, "org.hibernate.dialect.PostgreSQLDialect");

settings.put(DEFAULT_SCHEMA, "shipping");

settings.put(DRIVER, "org.postgresql.Driver");

settings.put(USER, System.getenv("DB_USER"));

settings.put(PASS, System.getenv("DB_PASSWORD"));

settings.put("hibernate.hikari.connectionTimeout", "20000");

settings.put("hibernate.hikari.minimumIdle", "1");

settings.put("hibernate.hikari.maximumPoolSize", "2");

settings.put("hibernate.hikari.idleTimeout", "30000");

settings.put(HBM2DDL_AUTO, "create-only");

settings.put(HBM2DDL_DATABASE_ACTION, "create");

Here, we're using System.getenv to pull runtime settings from the environment. We've added the HBM2DDL_ settings to make our application generate the database tables. However, we should comment out or remove these lines after the database schema is generated, and should avoid allowing our Lambda to do this in production. It's helpful for our testing now, though.

As we can see, many of the settings have constants already defined in the AvailableSettings class in Hibernate, though the Hikari-specific ones don't.

Now that we have the settings, we need to build the SessionFactory. We'll individually add our entity classes to it:

StandardServiceRegistry registry = new StandardServiceRegistryBuilder()

.applySettings(settings)

.build();

return new MetadataSources(registry)

.addAnnotatedClass(Consignment.class)

.addAnnotatedClass(Item.class)

.addAnnotatedClass(Checkin.class)

.buildMetadata()

.buildSessionFactory();

7.3. Add to the Handler

We need the handler to create and guarantee to close the session factory on each invocation. With that in mind, let's extract most of the controller functionality into a method called routeRequest and modify our handler to create the SessionFactory in a try-with-resources block:

try (SessionFactory sessionFactory = createSessionFactory()) {

ShippingService service = new ShippingService(sessionFactory, new ShippingDao());

return routeRequest(input, service);

}

We've also changed our ShippingService to have the SessionFactory and ShippingDao as properties, injected via the constructor, but it's not using them yet.

7.4. Testing Hibernate

At this point, though the ShippingService does nothing, invoking the Lambda should cause Hibernate to start up and generate DDL.

Let's double-check the DDL it generates before we comment out the settings for that:

$ sam build

$ sam local start-api --docker-network shipping

We build the application as before, but now we're adding the –docker-network parameter to sam local. This runs the test Lambda within the same network as our database so that the Lambda can reach the database container by using its container name.

When we first hit the endpoint using curl, our tables should be created:

$ curl localhost:3000/consignment/123

{"id":null,"source":null,"destination":null,"items":[],"checkins":[],"delivered":false}

The stub code still returned a blank Consignment. But, let's now check the database to see if the tables were created:

$ docker exec -it postgres pg_dump -s -U postgres

... DDL output

CREATE TABLE shipping.consignment_item (

consignment_id character varying(255) NOT NULL,

...

Once we're happy our Hibernate setup is working, we can comment out the HBM2DDL_ settings.

8. Complete the Business Logic

All that remains is to make the ShippingService use the ShippingDao to implement the business logic. Each method will create a session factory in a try-with-resources block to ensure it gets closed.

8.1. Create Consignment

A new consignment hasn't been delivered and should receive a new ID. Then we should save it in the database:

public String createConsignment(Consignment consignment) {

try (Session session = sessionFactory.openSession()) {

consignment.setDelivered(false);

consignment.setId(UUID.randomUUID().toString());

shippingDao.save(session, consignment);

return consignment.getId();

}

}

8.2. View Consignment

To get a consignment, we need to read it from the database by ID. Though a REST API should return Not Found on an unknown request, for this example, we'll just return an empty consignment if none is found:

public Consignment view(String consignmentId) {

try (Session session = sessionFactory.openSession()) {

return shippingDao.find(session, consignmentId)

.orElseGet(Consignment::new);

}

}

8.3. Add Item

Items will go into our list of items in the order received:

public void addItem(String consignmentId, Item item) {

try (Session session = sessionFactory.openSession()) {

shippingDao.find(session, consignmentId)

.ifPresent(consignment -> addItem(session, consignment, item));

}

}

private void addItem(Session session, Consignment consignment, Item item) {

consignment.getItems()

.add(item);

shippingDao.save(session, consignment);

}

Ideally, we'd have better error handling if the consignment did not exist, but for this example, non-existent consignments will be ignored.

8.4. Check-In

The check-ins need to be sorted in order of when they happen, not when the request is received. Also, when the item reaches the final destination, it should be marked as delivered:

public void checkIn(String consignmentId, Checkin checkin) {

try (Session session = sessionFactory.openSession()) {

shippingDao.find(session, consignmentId)

.ifPresent(consignment -> checkIn(session, consignment, checkin));

}

}

private void checkIn(Session session, Consignment consignment, Checkin checkin) {

consignment.getCheckins().add(checkin);

consignment.getCheckins().sort(Comparator.comparing(Checkin::getTimeStamp));

if (checkin.getLocation().equals(consignment.getDestination())) {

consignment.setDelivered(true);

}

shippingDao.save(session, consignment);

}

9. Testing the App

Let's simulate a package traveling from The White House to the Empire State Building.

An agent creates the journey:

$ curl -d '{"source":"data.orange.brings", "destination":"heave.wipes.clay"}' \

-H 'Content-Type: application/json' \

http://localhost:3000/consignment/

"3dd0f0e4-fc4a-46b4-8dae-a57d47df5207"

We now have the ID 3dd0f0e4-fc4a-46b4-8dae-a57d47df5207 for the consignment. Then, someone collects two items for the consignment – a picture and a piano:

$ curl -d '{"location":"data.orange.brings", "timeStamp":"20200101T120000", "description":"picture"}' \

-H 'Content-Type: application/json' \

http://localhost:3000/consignment/3dd0f0e4-fc4a-46b4-8dae-a57d47df5207/item

"OK"

$ curl -d '{"location":"data.orange.brings", "timeStamp":"20200101T120001", "description":"piano"}' \

-H 'Content-Type: application/json' \

http://localhost:3000/consignment/3dd0f0e4-fc4a-46b4-8dae-a57d47df5207/item

"OK"

Sometime later, there's a check-in:

$ curl -d '{"location":"united.alarm.raves", "timeStamp":"20200101T173301"}' \

-H 'Content-Type: application/json' \

http://localhost:3000/consignment/3dd0f0e4-fc4a-46b4-8dae-a57d47df5207/checkin

"OK"

And again later:

$ curl -d '{"location":"wink.sour.chasing", "timeStamp":"20200101T191202"}' \

-H 'Content-Type: application/json' \

http://localhost:3000/consignment/3dd0f0e4-fc4a-46b4-8dae-a57d47df5207/checkin

"OK"

The customer, at this point, requests the status of the consignment:

$ curl http://localhost:3000/consignment/3dd0f0e4-fc4a-46b4-8dae-a57d47df5207

{

"id":"3dd0f0e4-fc4a-46b4-8dae-a57d47df5207",

"source":"data.orange.brings",

"destination":"heave.wipes.clay",

"items":[

{"location":"data.orange.brings","description":"picture","timeStamp":"20200101T120000"},

{"location":"data.orange.brings","description":"piano","timeStamp":"20200101T120001"}

],

"checkins":[

{"timeStamp":"20200101T173301","location":"united.alarm.raves"},

{"timeStamp":"20200101T191202","location":"wink.sour.chasing"}

],

"delivered":false

}%

They see the progress, and it's not yet delivered.

A message should have been sent at 20:12 to say it reached deflection.famed.apple, but it gets delayed, and the message from 21:46 at the destination gets there first:

$ curl -d '{"location":"heave.wipes.clay", "timeStamp":"20200101T214622"}' \

-H 'Content-Type: application/json' \

http://localhost:3000/consignment/3dd0f0e4-fc4a-46b4-8dae-a57d47df5207/checkin

"OK"

The customer, at this point, requests the status of the consignment:

$ curl http://localhost:3000/consignment/3dd0f0e4-fc4a-46b4-8dae-a57d47df5207

{

"id":"3dd0f0e4-fc4a-46b4-8dae-a57d47df5207",

...

{"timeStamp":"20200101T191202","location":"wink.sour.chasing"},

{"timeStamp":"20200101T214622","location":"heave.wipes.clay"}

],

"delivered":true

}

Now it's delivered. So, when the delayed message gets through:

$ curl -d '{"location":"deflection.famed.apple", "timeStamp":"20200101T201254"}' \

-H 'Content-Type: application/json' \

http://localhost:3000/consignment/3dd0f0e4-fc4a-46b4-8dae-a57d47df5207/checkin

"OK"

$ curl http://localhost:3000/consignment/3dd0f0e4-fc4a-46b4-8dae-a57d47df5207

{

"id":"3dd0f0e4-fc4a-46b4-8dae-a57d47df5207",

...

{"timeStamp":"20200101T191202","location":"wink.sour.chasing"},

{"timeStamp":"20200101T201254","location":"deflection.famed.apple"},

{"timeStamp":"20200101T214622","location":"heave.wipes.clay"}

],

"delivered":true

}

The check-in is put in the right place in the timeline.

10. Conclusion

In this article, we discussed the challenges of using a heavyweight framework like Hibernate in a lightweight container such as AWS Lambda.

We built a Lambda and REST API and learned how to test it on our local machine using Docker and AWS SAM CLI. Then, we constructed an entity model for Hibernate to use with our database. We also used Hibernate to initialize our tables.

Finally, we integrated the Hibernate SessionFactory into our application, ensuring to close it before the Lambda exited.

As usual, the example code for this article can be found over on GitHub.

The post How to Implement Hibernate in an AWS Lambda Function in Java first appeared on Baeldung.

![]()

![]()