![]()

1. Overview

In this tutorial, we’ll discuss Java’s support for HarperDB, a high-performing flexible NoSQL database with the power of SQL. No doubt, the standard Java database connectivity helps integrate it with a wide range of leading BI, reporting, ETL Tools, and custom applications. It also provides REST APIs for performing DB administration and operations.

However, JDBC streamlines and accelerates the adoption of HarperDB within applications. It might simplify and expedite the process significantly.

For this tutorial, we’ll use the Java Test Container library. This would enable us to run a HarperDB Docker container and showcase a live integration.

Let’s explore the extent of JDBC support available for HarperDB through some examples.

2. JDBC Library

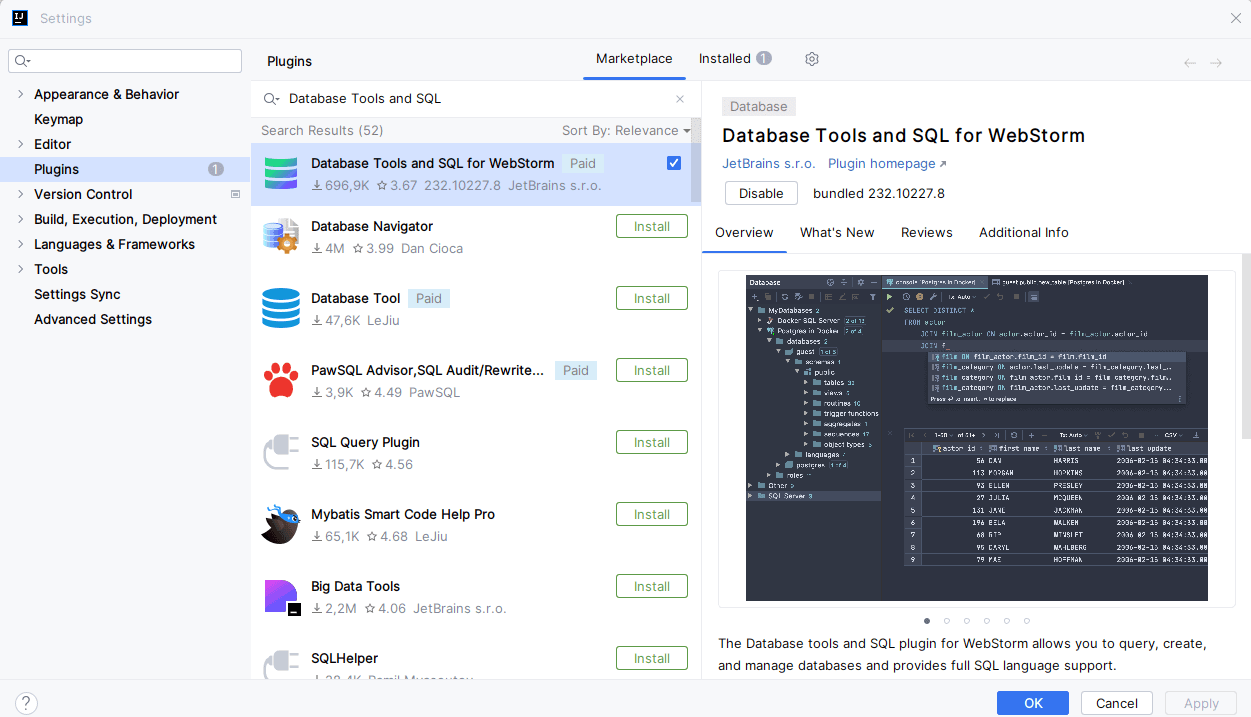

HarperDB ships with a JDBC library, which we’ll import in our pom.xml file:

<dependency>

<groupId>com.baeldung</groupId>

<artifactId>java-harperdb</artifactId>

<version>4.2</version>

<scope>system</scope>

<systemPath>${project.basedir}/lib/cdata.jdbc.harperdb.jar</systemPath>

</dependency>

Since it’s unavailable on a public Maven repository, we must import it from our local directory or a private Maven repository.

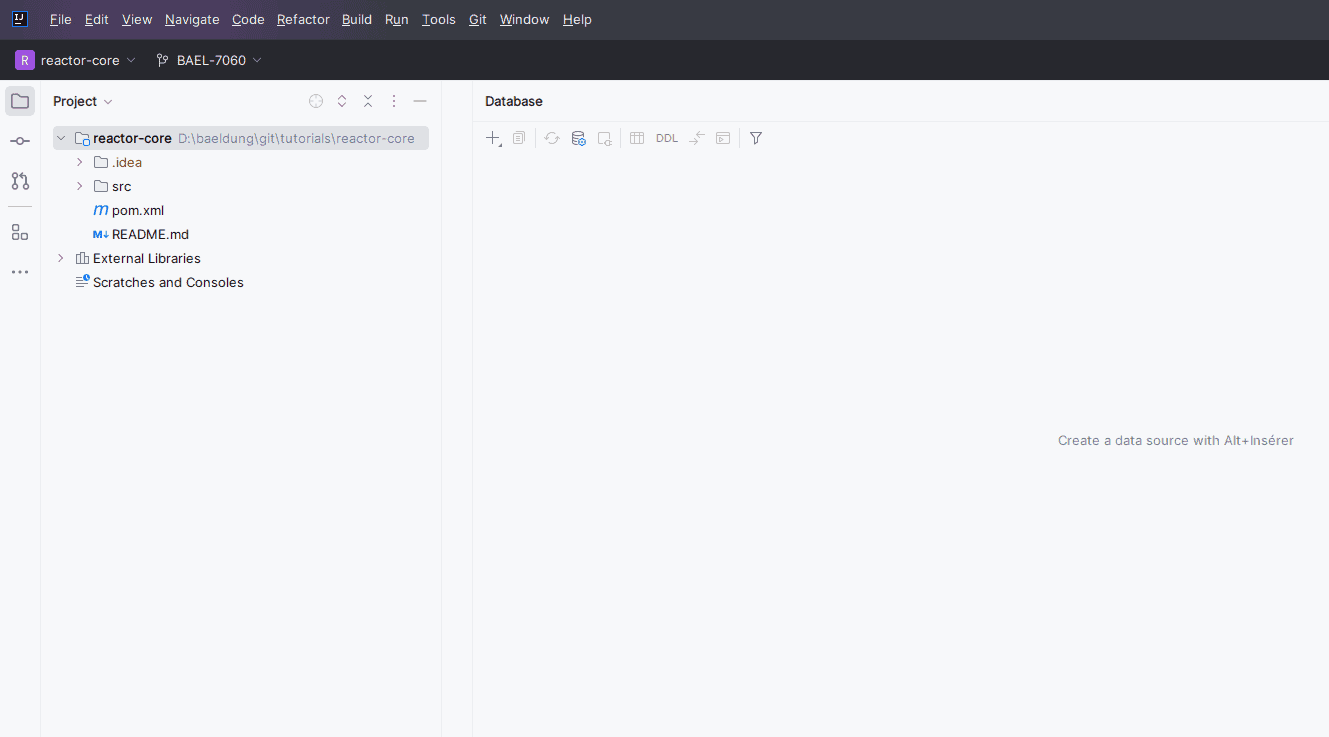

3. Create JDBC Connection

Before we can start executing the SQL statements in the Harper DB, we’ll explore how to acquire the java.sql.Connection object.

Let’s start with the first option:

@Test

void whenConnectionInfoInURL_thenConnectSuccess() {

assertDoesNotThrow(() -> {

final String JDBC_URL = "jdbc:harperdb:Server=127.0.0.1:" + port + ";User=admin;Password=password;";

try (Connection connection = DriverManager.getConnection(JDBC_URL)) {

connection.createStatement().executeQuery("select 1");

logger.info("Connection Successful");

}

});

}

There isn’t much difference compared to getting a connection for relational databases, except for the prefix jdbc:harperdb: in the JDBC URL. Usually, the password should always be encrypted and decrypted before being passed to the URL.

Moving on, let’s take a look at the second option:

@Test

void whenConnectionInfoInProperties_thenConnectSuccess() {

assertDoesNotThrow(() -> {

Properties prop = new Properties();

prop.setProperty("Server", "127.0.0.1:" + port);

prop.setProperty("User", "admin");

prop.setProperty("Password", "password");

try (Connection connection = DriverManager.getConnection("jdbc:harperdb:", prop)) {

connection.createStatement().executeQuery("select 1");

logger.info("Connection Successful");

}

});

}

In contrast to the earlier option, we used the Properties object to pass the connectivity details to DriveManager.

Applications often use connection pools for optimal performance. Hence, it’s reasonable to expect that HarperDB’s JDBC driver also incorporates the same:

@Test

void whenConnectionPooling_thenConnectSuccess() {

assertDoesNotThrow(() -> {

HarperDBConnectionPoolDataSource harperdbPoolDataSource = new HarperDBConnectionPoolDataSource();

final String JDBC_URL = "jdbc:harperdb:UseConnectionPooling=true;PoolMaxSize=2;Server=127.0.0.1:" + port

+ ";User=admin;Password=password;";

harperdbPoolDataSource.setURL(JDBC_URL);

try(Connection connection = harperdbPoolDataSource.getPooledConnection().getConnection()) {

connection.createStatement().executeQuery("select 1");

logger.info("Connection Successful");

}

});

}

To enable connection pooling, we used the property UseConnectionPooling=true. Also, we had to use the driver class HarperDBConnectionPoolDataSource to get the connection pool.

Additionally, other connection properties can be used for more options.

4. Create Schema and Tables

HaperDB provides RESTful database operation APIs for configuring and administering the database. It also has APIs for creating database objects and performing SQL CRUD operations on them.

However, DDL statements like Create Table, Create Schema, etc. aren’t supported. However, HarperDB provides stored procedures for creating schemas and tables:

@Test

void whenExecuteStoredToCreateTable_thenSuccess() throws SQLException {

final String CREATE_TABLE_PROC = "CreateTable";

try (Connection connection = getConnection()) {

CallableStatement callableStatement = connection.prepareCall(CREATE_TABLE_PROC);

callableStatement.setString("SchemaName", "Prod");

callableStatement.setString("TableName", "Subject");

callableStatement.setString("PrimaryKey", "id");

Boolean result = callableStatement.execute();

ResultSet resultSet = callableStatement.getResultSet();

while (resultSet.next()) {

String tableCreated = resultSet.getString("Success");

assertEquals("true", tableCreated);

}

}

}

The CallableStatement executes the CreateTable stored procedure and creates the table Subject in the Prod schema. The procedure takes SchemaName, TableName, and PrimaryKey as the input parameters. Interestingly we didn’t create the schema explicitly. The schema gets created if it’s not present in the database.

Similarly, other stored procedures like CreateHarperSchema, DropSchema, DropTable, etc. can be invoked by the CallableStatement.

5. CRUD Support

The HarperDB JDBC Driver supports the CRUD operations. We can create, query, update, and delete records from tables using java.sql.Statement and java.sql.PreparedSatement.

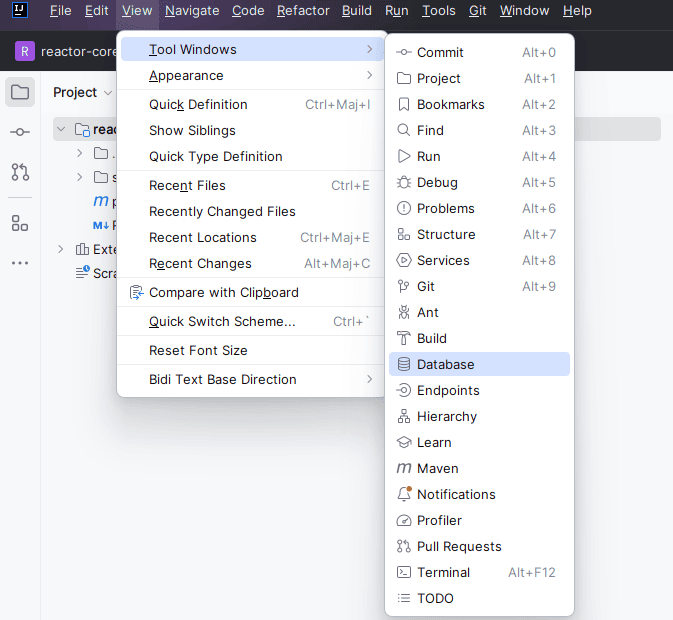

5.1. DB Model

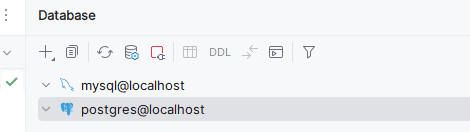

Before we move on to the next sections, let’s set up some data for executing SQL statements. Let’s assume a database schema called Demo that has three tables:

![]()

Subject and Teacher are two master tables. The table Teacher_Details has the details of the subjects taught by teachers. Unexpectedly, there are no foreign key constraints on the fields teacher_id and subject_id because there is no support for it in HarperDB.

Let’s take a look at the data in the Subject table:

[

{"id":1, "name":"English"},

{"id":2, "name":"Maths"},

{"id":3, "name":"Science"}

]

Similarly, let’s take a look at the data in the Teacher table:

[

{"id":1, "name":"James Cameron", "joining_date":"04-05-2000"},

{"id":2, "name":"Joe Biden", "joining_date":"20-10-2005"},

{"id":3, "name":"Jessie Williams", "joining_date":"04-06-1997"},

{"id":4, "name":"Robin Williams", "joining_date":"01-01-2020"},

{"id":5, "name":"Eric Johnson", "joining_date":"04-05-2022"},

{"id":6, "name":"Raghu Yadav", "joining_date":"02-02-1999"}

]

Now, let’s see the records in the Teacher_Details table:

[

{"id":1, "teacher_id":1, "subject_id":1},

{"id":2, "teacher_id":1, "subject_id":2},

{"id":3, "teacher_id":2, "subject_id":3 },

{"id":4, "teacher_id":3, "subject_id":1},

{"id":5, "teacher_id":3, "subject_id":3},

{"id":6, "teacher_id":4, "subject_id":2},

{"id":7, "teacher_id":5, "subject_id":3},

{"id":8, "teacher_id":6, "subject_id":1},

{"id":9, "teacher_id":6, "subject_id":2},

{"id":15, "teacher_id":6, "subject_id":3}

]

Notably, the column id in all the tables is the primary key.

5.2. Create Records With Insert

Let’s introduce some more subjects by creating some records in the Subject table:

@Test

void givenStatement_whenInsertRecord_thenSuccess() throws SQLException {

final String INSERT_SQL = "insert into Demo.Subject(id, name) values "

+ "(4, 'Social Studies'),"

+ "(5, 'Geography')";

try (Connection connection = getConnection()) {

Statement statement = connection.createStatement();

assertDoesNotThrow(() -> statement.execute(INSERT_SQL));

assertEquals(2, statement.getUpdateCount());

}

}

We used java.sql.Statement to insert two records into the Subject table.

Let’s implement a better version with the help of the java.sql.PrepareStatement by considering the Teacher table:

@Test

void givenPrepareStatement_whenAddToBatch_thenSuccess() throws SQLException {

final String INSERT_SQL = "insert into Demo.Teacher(id, name, joining_date) values"

+ "(?, ?, ?)";

try (Connection connection = getConnection()) {

PreparedStatement preparedStatement = connection.prepareStatement(INSERT_SQL);

preparedStatement.setInt(1, 7);

preparedStatement.setString(2, "Bret Lee");

preparedStatement.setString(3, "07-08-2002");

preparedStatement.addBatch();

preparedStatement.setInt(1, 8);

preparedStatement.setString(2, "Sarah Glimmer");

preparedStatement.setString(3, "07-08-1997");

preparedStatement.addBatch();

int[] recordsInserted = preparedStatement.executeBatch();

assertEquals(2, Arrays.stream(recordsInserted).sum());

}

}

So, we parameterized the insert statement and executed them in batches with the methods addBatch() and executeBatch(). Batch execution is crucial for processing large volumes of records. Hence its support in HarperDB’s JDBC driver is immensely valuable.

5.3. Create Records With Insert Into Select

HarperDB JDBC driver also provides the feature of creating temporary tables at run time. This temporary table can later be used for inserting into a final target table with a single insert into select statement. Similar to batch execution this also helps reduce the number of calls to the database.

Let’s see this feature in action:

@Test

void givenTempTable_whenInsertIntoSelectTempTable_thenSuccess() throws SQLException {

try (Connection connection = getConnection()) {

Statement statement = connection.createStatement();

assertDoesNotThrow(() -> {

statement.execute("insert into Teacher#TEMP(id, name, joining_date) "

+ "values('12', 'David Flinch', '04-04-2014')");

statement.execute("insert into Teacher#TEMP(id, name, joining_date) "

+ "values('13', 'Stephen Hawkins', '04-07-2017')");

statement.execute("insert into Teacher#TEMP(id, name, joining_date) "

+ "values('14', 'Albert Einstein', '12-08-2020')");

statement.execute("insert into Teacher#TEMP(id, name, joining_date) "

+ "values('15', 'Leo Tolstoy', '20-08-2022')");

});

assertDoesNotThrow(() -> statement.execute("insert into Demo.Teacher(id, name, joining_date) "

+ "select id, name, joining_date from Teacher#TEMP"));

ResultSet resultSet = statement.executeQuery("select count(id) as rows from Demo.Teacher where id in"

+ " (12, 13, 14, 15)");

resultSet.next();

int totalRows = resultSet.getInt("rows");

assertEquals(4, totalRows);

}

}

All temp tables should have the format [table name]#TEMP as in Teacher#TEMP. It gets created as soon as we execute the insert statement. Four records were inserted into the temporary table Teacher#TEMP. Then with a single insert into select statement all the records got inserted into the target Teacher table.

5.4. Read Records From Tables

Let’s begin by querying the Subject table with the help of java.sql.Statement:

@Test

void givenStatement_whenFetchRecord_thenSuccess() throws SQLException {

final String SQL_QUERY = "select id, name from Demo.Subject where name = 'Maths'";

try (Connection connection = getConnection()) {

Statement statement = connection.createStatement();

ResultSet resultSet = statement.executeQuery(SQL_QUERY);

while (resultSet.next()) {

Integer id = resultSet.getInt("id");

String name = resultSet.getString("name");

assertNotNull(id);

logger.info("Subject id:" + id + " Subject Name:" + name);

}

}

}

The executeQuery() method of the java.sql.Statement executes successfully and fetches the records.

Let’s see if the driver supports java.sql.PrepareStatement. This time let’s execute a query with a join condition to make it a little bit more exciting and complex:

@Test

void givenPreparedStatement_whenExecuteJoinQuery_thenSuccess() throws SQLException {

final String JOIN_QUERY = "SELECT t.name as teacher_name, t.joining_date as joining_date, s.name as subject_name "

+ "from Demo.Teacher_Details AS td "

+ "INNER JOIN Demo.Teacher AS t ON t.id = td.teacher_id "

+ "INNER JOIN Demo.Subject AS s on s.id = td.subject_id "

+ "where t.name = ?";

try (Connection connection = getConnection()) {

PreparedStatement preparedStatement = connection.prepareStatement(JOIN_QUERY);

preparedStatement.setString(1, "Eric Johnson");

ResultSet resultSet = preparedStatement.executeQuery();

while (resultSet.next()) {

String teacherName = resultSet.getString("teacher_name");

String subjectName = resultSet.getString("subject_name");

String joiningDate = resultSet.getString("joining_date");

assertEquals("Eric Johnson", teacherName);

assertEquals("Maths", subjectName);

}

}

}

We not only executed a parameterized query but also discovered that HarperDB can perform join queries on unstructured data.

5.5. Read Records From User-Defined Views

The HarperDB driver has the feature for creating user-defined views. These are virtual views that can be used in scenarios where we don’t have access to the table queries, i.e., when using the driver from a tool.

Let’s define a view in a file UserDefinedViews.json:

{

"View_Teacher_Details": {

"query": "SELECT t.name as teacher_name, t.joining_date as joining_date, s.name as subject_name from Demo.Teacher_Details AS td

INNER JOIN Demo.Teacher AS t ON t.id = td.teacher_id INNER JOIN Demo.Subject AS s on s.id = td.subject_id"

}

}

The query gets the details of the teacher by joining all the tables. The default schema of the view is UserViews.

The driver looks for the UserDefinedViews.json in the directory defined by the connection property Location. Let’s see how this works:

@Test

void givenUserDefinedView_whenQueryView_thenSuccess() throws SQLException {

URL url = ClassLoader.getSystemClassLoader().getResource("UserDefinedViews.json");

String folderPath = url.getPath().substring(0, url.getPath().lastIndexOf('/'));

try(Connection connection = getConnection(Map.of("Location", folderPath))) {

PreparedStatement preparedStatement = connection.prepareStatement("select teacher_name,subject_name"

+ " from UserViews.View_Teacher_Details where subject_name = ?");

preparedStatement.setString(1, "Science");

ResultSet resultSet = preparedStatement.executeQuery();

while(resultSet.next()) {

assertEquals("Science", resultSet.getString("subject_name"));

}

}

}

To create the database connection, the program passes on the folder path of the file UserDefinedViews.json to the method getConnection(). After this, the driver executes the query on the view View_Teacher_Details and gets the details of all the teachers who teach Science.

5.6. Save and Read Records From Cache

Applications prefer caching frequently used and accessed data to improve performance. HaperDB driver enables caching data in locations such as on a local disk or a database.

For our example, we’ll use an embedded Derby database as the cache in our Java application. But there is provision for selecting other databases for caching as well.

Let’s explore more on this:

@Test

void givenAutoCache_whenQuery_thenSuccess() throws SQLException {

URL url = ClassLoader.getSystemClassLoader().getResource("test.db");

String folderPath = url.getPath().substring(0, url.getPath().lastIndexOf('/'));

logger.info("Cache Location:" + folderPath);

try(Connection connection = getConnection(Map.of("AutoCache", "true", "CacheLocation", folderPath))) {

PreparedStatement preparedStatement = connection.prepareStatement("select id, name from Demo.Subject");

ResultSet resultSet = preparedStatement.executeQuery();

while(resultSet.next()) {

logger.info("Subject Name:" + resultSet.getString("name"));

}

}

}

We’ve used two connection properties AutoCache and CacheLocation. AutoCache=true means all queries to the table are going to be cached to the location specified in the property CacheLocation. However, the driver provides explicit caching capability as well using the CACHE statements.

5.7. Update Records

Let’s see an example of updating the subjects taught by the teachers with java.sql.Statement:

@Test

void givenStatement_whenUpdateRecord_thenSuccess() throws SQLException {

final String UPDATE_SQL = "update Demo.Teacher_Details set subject_id = 2 "

+ "where teacher_id in (2, 5)";

final String UPDATE_SQL_WITH_SUB_QUERY = "update Demo.Teacher_Details "

+ "set subject_id = (select id from Demo.Subject where name = 'Maths') "

+ "where teacher_id in (select id from Demo.Teacher where name in ('Joe Biden', 'Eric Johnson'))";

try (Connection connection = getConnection()) {

Statement statement = connection.createStatement();

assertDoesNotThrow(() -> statement.execute(UPDATE_SQL));

assertEquals(2, statement.getUpdateCount());

}

try (Connection connection = getConnection()) {

assertThrows(SQLException.class, () -> connection.createStatement().execute(UPDATE_SQL_WITH_SUB_QUERY));

}

}

The first update statement successfully executes when we directly use the id of the teacher and subject and don’t look up the values from the other tables. However, the second update fails when we try to look up the id values from the Teacher and Subject tables. This happens because currently, HarperDB doesn’t support subqueries.

Let’s use java.sql.PreparedStatement to update the subjects taught by a teacher:

@Test

void givenPreparedStatement_whenUpdateRecord_thenSuccess() throws SQLException {

final String UPDATE_SQL = "update Demo.Teacher_Details set subject_id = ? "

+ "where teacher_id in (?, ?)";

try (Connection connection = getConnection()) {

PreparedStatement preparedStatement = connection.prepareStatement(UPDATE_SQL);

preparedStatement.setInt(1, 1);

//following is not supported by the HarperDB driver

//Integer[] teacherIds = {4, 5};

//Array teacherIdArray = connection.createArrayOf(Integer.class.getTypeName(), teacherIds);

preparedStatement.setInt(2, 4);

preparedStatement.setInt(3, 5);

assertDoesNotThrow(() -> preparedStatement.execute());

assertEquals(2, preparedStatement.getUpdateCount());

}

}

Unfortunately, the HarperDB JDBC driver doesn’t support creating a java.sql.Array object and hence we cannot pass an array of teacher ids as a parameter in the in clause. That is why we have to call setInt() multiple times to set the teacher ids. This is a drawback and can cause lots of inconvenience.

5.8. Delete Records

Let’s execute a delete statement on the table Teacher_Details:

@Test

void givenStatement_whenDeleteRecord_thenSuccess() throws SQLException {

final String DELETE_SQL = "delete from Demo.Teacher_Details where teacher_id = 6 and subject_id = 3";

try (Connection connection = getConnection()) {

Statement statement = connection.createStatement();

assertDoesNotThrow(() -> statement.execute(DELETE_SQL));

assertEquals(1, statement.getUpdateCount());

}

}

java.sql.Statement helped delete the record successfully.

Moving on, let’s try using java.sql.PreparedStatement:

@Test

void givenPreparedStatement_whenDeleteRecord_thenSuccess() throws SQLException {

final String DELETE_SQL = "delete from Demo.Teacher_Details where teacher_id = ? and subject_id = ?";

try (Connection connection = getConnection()) {

PreparedStatement preparedStatement = connection.prepareStatement(DELETE_SQL);

preparedStatement.setInt(1, 6);

preparedStatement.setInt(2, 2);

assertDoesNotThrow(() -> preparedStatement.execute());

assertEquals(1, preparedStatement.getUpdateCount());

}

}

We could parameterize and execute the delete statement successfully.

6. Conclusion

In this article, we learned about the JDBC support in HarperDB. HarperDB is a NoSQL database but its JDBC driver enables Java applications to execute SQL statements. There are a few SQL features that HarperDB doesn’t yet support.

Furthermore, the driver is also not one hundred percent compliant with JDBC protocol. But it compensates it with some of its features like user-defined views, temporary tables, caching, etc.

As usual, the codes used for the examples in this article are available over on GitHub.

![]()