Java Arrays Tutorial

Fix Ambiguous Method Call Error in Mockito

1. Overview

In this tutorial, we’ll see how to avoid ambiguous method calls in the specific context of the Mockito framework.

In Java, method overloading allows a class to have multiple methods with the same name but different parameters. An ambiguous method call happens when the compiler can’t determine the concrete method to invoke based on the provided arguments.

2. Introducing Mockito’s ArgumentMatchers

Mockito is a mocking framework for unit testing Java applications. The latest version of the library can be found in the Maven Central Repository. Let’s add the dependency to our pom.xml:

<dependency>

<groupId>org.mockito</groupId>

<artifactId>mockito-core</artifactId>

<version>5.11.0</version>

<scope>test</scope>

</dependency>ArgumentMatchers are part of the Mockito framework: thanks to them, we can specify the mocked method’s behavior when the arguments match given conditions.

3. Overloaded Methods Definition

First, let’s define a method that takes an Integer as a parameter and always return 1 as a result:

Integer myMethod(Integer i) {

return 1;

}For the sake of our demonstration, we’ll want our overloaded method to use a custom type. Thus, let’s define this dummy class:

class MyOwnType {}We can now add an overloaded myMethod() that accepts a MyOwnType object as an argument and always return baeldung as a result:

String myMethod(MyOwnType myOwnType) {

return "baeldung";

}Intuitively, if we pass a null argument to myMethod(), the compiler won’t know which version it should use. Moreover, we can note that the method’s return type has no impact on this problem.

4. Ambiguous Call with isNull()

Let’s naively try to mock a call to myMethod() with a null parameter by using the base isNull() ArgumentMatcher:

@Test

void givenMockedMyMethod_whenMyMethod_ThenMockedResult(@Mock MyClass myClass) {

when(myClass.myMethod(isNull())).thenReturn(1);

}Given we called the class where myMethod() is defined MyClass, we nicely injected a mocked MyClass object via the test’s method parameters. We can also note that we didn’t add any assertion to the test yet. Let’s run this code:

java.lang.Error: Unresolved compilation problem:

The method myMethod(Integer) is ambiguous for the type MyClass

As we can see, the compiler can’t decide which version of myMethod() to use, thus throwing an error. Let’s underline that the compiler’s decision is only based on the method argument. Since we wrote thenReturn(1) in our instruction, as readers, we could guess that the intention is to use the version of myMethod() that returns an Integer. However, the compiler won’t use this part of the instruction in its decision process.

To solve this problem, we need to use the overloaded isNull() ArgumentMatcher that takes a class as an argument instead. For instance, to tell the compiler it should use the version that takes an Integer as a parameter, we can write:

@Test

void givenMockedMyMethod_whenMyMethod_ThenMockedResult(@Mock MyClass myClass) {

when(myClass.myMethod(isNull(Integer.class))).thenReturn(1);

assertEquals(1, myClass.myMethod((Integer) null));

}We added an assertion to complete the test, and it now runs successfully. Similarly, we can modify our test to use the other version of the method:

@Test

void givenCorrectlyMockedNullMatcher_whenMyMethod_ThenMockedResult(@Mock MyClass myClass) {

when(myClass.myMethod(isNull(MyOwnType.class))).thenReturn("baeldung");

assertEquals("baeldung", myClass.myMethod((MyOwnType) null));

}Lastly, let’s notice that we needed to give the type of null in the call to myMethod() in the assertion as well. Otherwise, this would throw for the same reason!

5. Ambiguous Call With any()

In the same way, we can try to mock a myMethod() call that accepts any argument by using the any() ArgumentMatcher:

@Test

void givenMockedMyMethod_whenMyMethod_ThenMockedResult(@Mock MyClass myClass) {

when(myClass.myMethod(any())).thenReturn(1);

}Running this code once again results in an ambiguous method call error. All the remarks we made in the previous case are still valid here. In particular, the compiler fails before even looking at the thenReturn() method’s argument.

The solution is also similar: we need to use a version of the any() ArgumentMatcher that clearly states what is the type of the expected argument:

@Test

void givenMockedMyMethod_whenMyMethod_ThenMockedResult(@Mock MyClass myClass) {

when(myClass.myMethod(anyInt())).thenReturn(1);

assertEquals(1, myClass.myMethod(2));

}Most base Java types already have a Mockito method defined for this purpose. In our case, the anyInt() method will accept any Integer argument. On the other hand, the other version of myMethod() accepts an argument of our custom MyOwnType type. Thus, we’ll need to use the overloaded version of the any() ArgumentMatcher that takes the object’s type as an argument:

@Test

void givenCorrectlyMockedNullMatcher_whenMyMethod_ThenMockedResult(@Mock MyClass myClass) {

when(myClass.myMethod(any(MyOwnType.class))).thenReturn("baeldung");

assertEquals("baeldung", myClass.myMethod((MyOwnType) null));

}Our tests are now working fine: we successfully eliminated the ambiguous method call error!

6. Conclusion

In this article, we understood why we can face an ambiguous method call error with the Mockito framework. Additionally, we showcased the solution to the problem. In real life, this kind of problem is most likely to arise when we’ve got overloaded methods with tons of arguments, and we decide to use the less constraining isNull() or any() ArgumentMatcher because the value of some of the arguments isn’t relevant to our test. In simple cases, most modern IDEs can point out the problem before we even need to run the test.

As always, the code is available over on GitHub.

Include null Value in JSON Serialization

1. Introduction

When we work with Java objects and need to convert them into JSON format, it’s crucial to handle null values appropriately. Omitting null values from the JSON output might not align with our data requirements, especially when data integrity is paramount.

In this tutorial, we’ll delve into effective methods for including null values during JSON serialization in Java.

2. Use Case Scenario: Customer Management System

Let’s consider that we’re developing a customer management system where each customer is a Java object with attributes like name, email, and age. Moreover, some customers may lack email addresses.

When serializing customer data into JSON for storage or transmission, including null values for missing email addresses is crucial to maintain data consistency.

For the sake of completeness, let’s define the Customer class used in our examples:

public class Customer {

@JsonProperty

private final String name;

@JsonProperty

private final String address;

@JsonProperty

private final int age;

public Customer(String name, String address, int age) {

this.name = name;

this.address = address;

this.age = age;

}

// ...

}Here, we define the Customer class which includes fields for name, address, and age, initialized through a constructor method. The toString() method also provides a string representation of the object in a JSON-like format for easy debugging.

Please note that including @JsonProperty annotations ensures that all relevant fields are accurately serialized into the JSON output, including null values when applicable.

3. Using Jackson Library

Jackson, a renowned Java library for JSON processing, typically excludes null values from JSON output by default. However, we can leverage Jackson’s annotations to include null values explicitly:

String expectedJson = "{\"name\":\"John\",\"address\":null,\"age\":25}";

Customer obj = new Customer("John", null, 25);

@Test

public void givenObjectWithNullField_whenJacksonUsed_thenIncludesNullValue() throws JsonProcessingException {

ObjectMapper mapper = new ObjectMapper();

mapper.setSerializationInclusion(JsonInclude.Include.ALWAYS);

String json = mapper.writeValueAsString(obj);

assertEquals(expectedJson, json);

}In this method, we begin by establishing the expectedJson string. Subsequently, we instantiate a Customer object with values John, null, and 25.

Moving forward, we configure an ObjectMapper instance to consistently include null values during serialization by invoking the setSerializationInclusion() method with the parameter JsonInclude.Include.ALWAYS. This meticulous setup ensures that even if the address is null, it’s accurately represented in the resulting JSON output.

Lastly, we use the assertEquals() method to verify that the json string matches the expectedJson.

4. Using Gson Library

Gson is an alternative Java library that can be used for JSON serialization and deserialization. It offers similar options to include null values explicitly. Let’s see an example:

@Test

public void givenObjectWithNullField_whenGsonUsed_thenIncludesNullValue() {

Gson gson = new GsonBuilder().serializeNulls().create();

String json = gson.toJson(obj);

assertEquals(expectedJson, json);

}Here, we employ the GsonBuilder to set up a Gson instance using the serializeNulls() method. This configuration ensures that null values are incorporated during serialization, ensuring precise representation in the resulting JSON output, even if the address field is null.

5. Conclusion

In conclusion, it’s essential to handle null values appropriately when working with Java objects and converting them into JSON format.

In this tutorial, we learned how to do it during JSON serialization.

As always, the source code for the examples is available over on GitHub.

@Subselect Annotation in Hibernate

1. Introduction

In this tutorial, we’ll review the @Subselet annotation in Hibernate, how to use it, and its benefits. We’ll also see Hibernate’s constraints on entities annotated as @Subselect and their consequences.

2. @Subselect Annotation Overview

@Subselect allows us to map an immutable entity to the SQL query. So let’s unroll this explanation a bit, starting from what entity to SQL query mapping even means.

2.1. Mapping To SQL Query

Normally, when we create our entities in Hibernate, we annotate them with @Entity. This annotation indicates that this is an entity, and it should be managed by persistence context. We may, optionally, provide @Table annotation as well to indicate to what table Hibernate should map this entity exactly. So, by default, whenever we create an entity in Hibernate, it assumes that an entity is mapped directly to a particular table. In most cases, that is exactly what we want, but not always.

Sometimes, our entity is not directly mapped into a particular table in DB but is a result of a SQL query execution. As an example, we might have a Client entity, where each instance of this entity is a row in a ResultSet of a SQL query (or SQL view) execution:

SELECT

u.id as id,

u.firstname as name,

u.lastname as lastname,

r.name as role

FROM users AS u

INNER JOIN roles AS r

ON r.id = u.role_id

WHERE u.type = 'CLIENT'The important thing is that there might be no dedicated clients table in DB at all. So that’s what mapping an entity into a SQL query means – we fetch entities from a sub-select SQL query, not from a table. This query may select from any tables and perform any logic within – Hibernate doesn’t care.

2.2. Immutability

So, we may have an entity that is not mapped to a particular table. As a direct consequence, it is unclear how to perform any INSERT/UPDATE statements. There is simply no clients (as in the example above) table that we can just insert records to.

Indeed, Hibernate doesn’t have a clue about what kind of SQL we execute to retrieve the data. Therefore, Hibernate cannot do any write operations on such an entity – it becomes read-only. The tricky thing here is that we can still ask Hibernate to insert this entity, but it will fail since it is impossible (at least according to ANSI SQL) to issue an INSERT into the sub-select.

3. Usage Example

Now, once we understand what @Subselect annotation does, let’s try to get our hands dirty and try to use it. Here, we have a simple RuntimeConfiguration:

@Data

@Entity

@Immutable

// language=sql

@Subselect(value = """

SELECT

ss.id,

ss.key,

ss.value,

ss.created_at

FROM system_settings AS ss

INNER JOIN (

SELECT

ss2.key as k2,

MAX(ss2.created_at) as ca2

FROM system_settings ss2

GROUP BY ss2.key

) AS t ON t.k2 = ss.key AND t.ca2 = ss.created_at

WHERE ss.type = 'SYSTEM' AND ss.active IS TRUE

""")

public class RuntimeConfiguration {

@Id

private Long id;

@Column(name = "key")

private String key;

@Column(name = "value")

private String value;

@Column(name = "created_at")

private Instant createdAt;

}

This entity represents a runtime parameter of our application. But, to just return the set of the up-to-date parameters that belong to our application, we need to execute a specific SQL query over system_settings table. As we can see, @Subselect annotation’s body contains that SQL statement. Now, because each RuntimeConfiguration entry is essentially a key value pair, we might want to implement a simple query – fetch the most recent active RuntimeConfiguration record that has a specific key.

Please also note that we marked our entity with @Immutable. Hibernate will, therefore, disable any dirty check tracking for our entity to avoid accidental UPDATE statements.

So, if we want to fetch RuntimeConfiguration with a specific key, we can do something like this:

@Test

void givenEntityMarkedWithSubselect_whenSelectingRuntimeConfigByKey_thenSelectedSuccessfully() {

String key = "config.enabled";

CriteriaBuilder criteriaBuilder = entityManager.getCriteriaBuilder();

CriteriaQuery<RuntimeConfiguration> query = criteriaBuilder.createQuery(RuntimeConfiguration.class);

var root = query.from(RuntimeConfiguration.class);

RuntimeConfiguration configurationParameter = entityManager

.createQuery(query.select(root).where(criteriaBuilder.equal(root.get("key"), key))).getSingleResult();

Assertions.assertThat(configurationParameter.getValue()).isEqualTo("true");

}Here, we’re using Hibernate Criteria API to query RuntimeConfiguration by key. Now, let’s check what query Hibernate actually produces in order to fulfill our request:

select

rc1_0.id,

rc1_0.created_at,

rc1_0.key,

rc1_0.value

from

( SELECT

ss.id,

ss.key,

ss.value,

ss.created_at

FROM

system_settings AS ss

INNER JOIN

( SELECT

ss2.key as k2, MAX(ss2.created_at) as ca2

FROM

system_settings ss2

GROUP BY

ss2.key ) AS t

ON t.k2 = ss.key

AND t.ca2 = ss.created_at

WHERE

ss.type = 'SYSTEM'

AND ss.active IS TRUE ) rc1_0

where

rc1_0.key=?As we can see, Hibernate just selects records from the SQL statement provided in @Subselect. Now, each filter that we provide will be applied to a resulting subselect records set.

4. Alternatives

Experienced Hibernate developers might notice that there are already some ways to achieve a similar result. One of these is using projection mapping into a DTO, and another is view mapping. Those two have their pros and cons. Let’s discuss them one by one.

4.1. Projection Mapping

So, let’s talk about DTO projections a bit. It allows the SQL queries to be mapped into a DTO projection that is not an entity. It is also considered faster to work with DTO projections than with entities. DTO projections are also immutable, which means Hibernate doesn’t manage such entities and doesn’t apply any dirty checks on them.

Though all of the above is true, DTO projections themselves have limitations. One of the most important is that DTO projections do not support associations. This is pretty obvious since we’re dealing with a DTO projection, which is not a managed entity. That makes DTO projections fast in Hibernate, but it also implies that Persistence Context does not manage these DTOs. So, we cannot have any OneToX or ManyToX fields on DTOs.

However, if we’re mapping an entity to an SQL statement, we’re still mapping an entity. It might have managed associations. So, this constraint is not applicable to entity-to-query mapping.

Another significant, essential, and conceptual difference is that @Subselect allows us to represent the entity as an SQL query. Hibernate will do what the annotation name suggests. It’ll just use the provided SQL query to select from it (so our query becomes a sub-select) and then apply additional filters. So, let’s assume that to fetch entity X, we need to perform some filtering, grouping, etc.. Then, if we use DTO projections, we would always have to write filters, groupings, etc., down in each JPQL or native query. When using @Subselect, we can specify this query once and select from it.

4.2. View Mapping

Although this is not widely known, Hibernate can map our entities to SQL views out of the box. That is very similar regarding entity to SQL query mapping. The view itself is almost always read-only in DB. Some exceptions exist in different RDBMS, like simple views in PostgreSQL, but that is entirely vendor-specific. It implies that our entities are also immutable, and we’ll just read from the underlying view, we won’t update/insert any data.

In general, the difference between @Subselect and entity-to-view mapping is very small. The former uses the exact SQL statement that we provide in annotation, while the latter uses an existing view. Both approaches support managed associations, so choosing one of these options entirely depends on our requirements.

5. Conclusion

In this article, we discussed how to use @Subselect to select entities not from a particular table but from a sub-select query. This is very convenient if we do not want to duplicate the same parts of SQL statements to fetch our entities. But this implies that our entities that use @Subselect are de-facto immutable, and we should not try to persist them from the application code. There are some alternatives to @Subselect, for instance, entity to view mapping in Hibernate or even DTO projection usage. They both have their advantages and disadvantages, therefore in order to make a choice we need to, as always, comply to requirements and common sense.

As always, the source code is available over on GitHub.

Java Weekly, Issue 535 – Out with the RestTemplate (again)

1. Spring and Java

>> Java 22 Delivers Foreign Memory & Memory API, Unnamed Variables & Patterns, and Return of JavaOne [infoq.com]

Last week, we celebrated the release of Java 22, but now it’s time to look into the amazing new features… and have a glimpse at what’s coming to Java 23!

>> Spring: Internals of RestClient [foojay.io]

RestTemplate is deprecated, and it’s time to dig deeper into its successor – the mighty RestClient

>> Reflectionless Templates With Spring [spring.io]

Template engines that don’t rely on reflection? Very cool

Also worth reading:

- >> Hello, Java 22! [spring.io]

- >> JDK 22 Security Enhancements [seanjmullan.org]

- >> MethodHandle primer [jornvernee.io]

- >> Token Exchange support in Spring Security 6.3.0-M3 [spring.io]

- >> The “Spring Way” of Doing Things: 9 Ways to Improve Your Spring Boot Skills [foojay.io]

- >> FEPCOS-J (4) Easy programming of a multithreaded TCP/IP server in Java [foojay.io]

- >> JDK 22 in Two Minutes! – Sip of Java [inside.java]

Webinars and presentations:

- >> Spring Tips: the Exposed ORM for Kotlin [spring.io]

- >> Vim Tips I Wish I Knew Earlier [sebastian-daschner.com]

- >> A Bootiful Podcast: Stuart Marks (aka ”Dr. Deprecator”) on Java, its amazing features, and more [spring.io]

Time to upgrade:

- >> Spring Authorization Server 1.2.3, 1.1.6 and 1.0.6 Available Now Including Fixes for CVE-2024-22258 [spring.io]

- >> Spring Session 3.2.2 and 3.1.5 are available now. [spring.io]

- >> Hibernate 6.5.0.CR1 [in.relation.to]

- >> Spring Integration 6.3.0-M2, 6.2.3 & 6.1.7 Available Now [spring.io]

- >> Spring Boot 3.1.10 available now [spring.io]

- >> Spring Boot 3.2.4 available now and 3.3.0-M3 [spring.io]

- >> Spring Shell 3.1.10 and 3.2.3 are now available [spring.io]

- >> Java News Roundup: JDK 22, GraalVM for JDK 22, Proposed Schedule for JDK 23, JMC 9.0 [infoq.com]

2. Technical & Musings

>> Localize applications with AI [foojay.io]

Another cool use case for AI – localization. Seems to work pretty well.

>> TDD: You’re Probably Doing It Just Fine [thecodewhisperer.com]

You don’t need to do TDD all the time – it’s ok to apply it selectively.

Also worth reading:

- >> Hello eBPF: Ring buffers in libbpf (6) [foojay.io]

- >> Announcing Oracle GraalVM for JDK 22 [oracle.com]

- >> Testing Event Sourcing, Emmett edition [event-driven.io]

- >> Pruning dead exception handlers [jornvernee.io]

3. Pick of the Week

>> Context-switching – one of the worst productivity killers in the engineering industry [eng-leadership.com]

Find Map Keys with Duplicate Values in Java

1. Overview

Map is an efficient structure for key-value storage and retrieval.

In this tutorial, we’ll explore different approaches to finding map keys with duplicate values in a Set. In other words, we aim to transform a Map<K, V> to a Map<V, Set<K>>.

2. Preparing the Input Examples

Let’s consider a scenario where we possess a map that stores the association between developers and the operating systems they primarily work on:

Map<String, String> INPUT_MAP = Map.of(

"Kai", "Linux",

"Eric", "MacOS",

"Kevin", "Windows",

"Liam", "MacOS",

"David", "Linux",

"Saajan", "Windows",

"Loredana", "MacOS"

);Now, we aim to find the keys with duplicate values, grouping different developers that use the same operating system in a Set:

Map<String, Set<String>> EXPECTED = Map.of(

"Linux", Set.of("Kai", "David"),

"Windows", Set.of("Saajan", "Kevin"),

"MacOS", Set.of("Eric", "Liam", "Loredana")

);While we primarily handle non-null keys and values, it’s worth noting that HashMap allows both null values and one null key. Therefore, let’s create another input based on INPUT_MAP to cover scenarios involving null keys and values:

Map<String, String> INPUT_MAP_WITH_NULLS = new HashMap<String, String>(INPUT_MAP) {{

put("Tom", null);

put("Jerry", null);

put(null, null);

}};As a result, we expect to obtain the following map:

Map<String, Set<String>> EXPECTED_WITH_NULLS = new HashMap<String, Set<String>>(EXPECTED) {{

put(null, new HashSet<String>() {{

add("Tom");

add("Jerry");

add(null);

}});

}};We’ll address various approaches in this tutorial. Next, let’s dive into the code.

3. Prior to Java 8

In our first idea to solve the problem, we initialize an empty HashMap<V, Set<K>> and populate it using in a classic for-each loop:

static <K, V> Map<V, Set<K>> transformMap(Map<K, V> input) {

Map<V, Set<K>> resultMap = new HashMap<>();

for (Map.Entry<K, V> entry : input.entrySet()) {

if (!resultMap.containsKey(entry.getValue())) {

resultMap.put(entry.getValue(), new HashSet<>());

}

resultMap.get(entry.getValue())

.add(entry.getKey());

}

return resultMap;

}As the code shows, transformMap() is a generic method. Within the loop, we use each entry’s value as the key for the resultant map and collect values associated with the same key into a Set.

transformMap() does the job no matter whether the input map contains null keys or values:

Map<String, Set<String>> result = transformMap(INPUT_MAP);

assertEquals(EXPECTED, result);

Map<String, Set<String>> result2 = transformMap(INPUT_MAP_WITH_NULLS);

assertEquals(EXPECTED_WITH_NULLS, result2);4. Java 8

Java 8 brought forth a wealth of new capabilities, simplifying collection operations with tools like the Stream API. Furthermore, it enriched the Map interface with additions such as computeIfAbsent().

In this section, we’ll tackle the problem using the functionalities introduced in Java 8.

4.1. Using Stream with groupingBy()

The groupingBy() collector, which ships with the Stream API, allows us to group stream elements based on a classifier function, storing them as lists under corresponding keys in a Map.

Hence, we can construct a stream of Entry<K, V> and employ groupingBy() to collect the stream into a Map<V, List<K>>. This aligns closely with our requirements. The only remaining step is converting the List<K> into a Set<K>.

Next, let’s implement this idea and see how to convert List<K> to a Set<K>:

Map<String, Set<String>> result = INPUT_MAP.entrySet()

.stream()

.collect(groupingBy(Map.Entry::getValue, mapping(Map.Entry::getKey, toSet())));

assertEquals(EXPECTED, result);As we can see in the above code, we used the mapping() collector to map the grouped result (List) to a different type (Set).

Compared to our transformMap() solution, the groupingBy() approach is easier to read and understand. Furthermore, as a functional solution, this approach allows us to apply further operations fluently.

However, the groupingBy() solution raises NullPointerException when it transforms our INPUT_MAP_WITH_NULLS input:

assertThrows(NullPointerException.class, () -> INPUT_MAP_WITH_NULLS.entrySet()

.stream()

.collect(groupingBy(Map.Entry::getValue, mapping(Map.Entry::getKey, toSet()))));This is because groupingBy() cannot handle null keys:

public static <T, K, D, A, M extends Map<K, D>>

Collector<T, ?, M> groupingBy(Function<? super T, ? extends K> classifier,...){

...

BiConsumer<Map<K, A>, T> accumulator = (m, t) -> {

K key = Objects.requireNonNull(classifier.apply(t), "element cannot be mapped to a null key");

...

}Next, let’s see if we can build a solution for both non-null and nullable keys or values.

4.2. Using forEach() and computeIfAbsent()

Java 8’s forEach() provides a concise way to iterate collections. computeIfAbsent() computes a new value for a specified key if the key is not already associated with a value or is mapped to null.

Next, let’s combine these two methods to solve our problem:

Map<String, Set<String>> result = new HashMap<>();

INPUT_MAP.forEach((key, value) -> result.computeIfAbsent(value, k -> new HashSet<>())

.add(key));

assertEquals(EXPECTED, result);This solution follows the same logic as transformMap(). However, with the power of Java 8, the implementation is more elegant and compact.

Furthermore, the forEach() + computeIfAbsent() solution works with maps containing null keys or values:

Map<String, Set<String>> result2 = new HashMap<>();

INPUT_MAP_WITH_NULLS.forEach((key, value) -> result2.computeIfAbsent(value, k -> new HashSet<>())

.add(key));

assertEquals(EXPECTED_WITH_NULLS, result2);5. Leveraging Guava Multimap

Guava, a widely used library, offers the Multimap interface, enabling the association of a single key with multiple values.

Now, let’s develop solutions based on Multimaps to address our problem.

5.1. Using Stream API and a Multimap Collector

We’ve seen how the Java Stream API empowers us to craft compact, concise, and functional implementations. Guava provides Multimap collectors, such as Multimaps.toMultimap(), that allow us to collect elements from a stream into a Multimap conveniently.

Next, let’s see how to combine a stream and Multimaps.toMultimap() to solve the problem:

Map<String, Set<String>> result = INPUT_MAP.entrySet()

.stream()

.collect(collectingAndThen(Multimaps.toMultimap(Map.Entry::getValue, Map.Entry::getKey, HashMultimap::create), Multimaps::asMap));

assertEquals(EXPECTED, result);The toMultimap() method takes three arguments:

- A Function to get key

- A Function to get value

- Multimap Supplier – Provide a Multimap object. In this example, we used HashMultimap to obtain a SetMultimap.

Thus, toMultimap() collect Map.Entry<K, V> objects into a SetMultimap<V, K>. Then, we employed collectingAndThen() to perform Multimaps.asMap() to convert the collected SetMultimap<V, K> to a Map<V, Set<K>>.

This approach works with maps holding null keys or values:

Map<String, Set<String>> result2 = INPUT_MAP_WITH_NULLS.entrySet()

.stream()

.collect(collectingAndThen(Multimaps.toMultimap(Map.Entry::getValue, Map.Entry::getKey, HashMultimap::create), Multimaps::asMap));

assertEquals(EXPECTED_WITH_NULLS, result2);5.2. Using invertFrom() and forMap()

Alternatively, we can use Multimaps.invertFrom() and Multimaps.forMap() to achieve our goal:

SetMultimap<String, String> multiMap = Multimaps.invertFrom(Multimaps.forMap(INPUT_MAP), HashMultimap.create());

Map<String, Set<String>> result = Multimaps.asMap(multiMap);

assertEquals(EXPECTED, result);

SetMultimap<String, String> multiMapWithNulls = Multimaps.invertFrom(Multimaps.forMap(INPUT_MAP_WITH_NULLS), HashMultimap.create());

Map<String, Set<String>> result2 = Multimaps.asMap(multiMapWithNulls);

assertEquals(EXPECTED_WITH_NULLS, result2);The forMap() method returns a Multimap<K, V> view of the specified Map<K, V>. Subsequently, as the name implies, invertFrom() converts the Multimap<K, V> view to a Multimap<V, K> and transfers it to the target Multimap provided as the second argument. Finally, we use Multimaps.asMap() again to convert Multimap<V, K> to Map<V, Set<K>>.

Also, as we can see, this approach works maps with null keys or values.

6. Conclusion

In this article, we’ve explored various ways to find map keys with duplicate values in a Set, including a classic loop-based solution, Java 8-based solutions, and approaches using Guava.

As always, the complete source code for the examples is available over on GitHub.

Merge Overlapping Intervals in a Java Collection

1. Overview

In this tutorial, we’ll look at taking a Collection of intervals and merging any that overlap.

2. Understanding the Problem

First, let’s define what an interval is. We’ll describe it as a pair of numbers where the second is greater than the first. This could also be dates, but we’ll stick with integers here. So for example, an interval could be 2 -> 7, or 100 -> 200.

We can create a simple Java class to represent an interval. We’ll have start as our first number and end as our second and higher number:

class Interval {

int start;

int end;

// Usual constructor, getters, setters and equals functions

}Now, we want to take a Collection of Intervals and merge any that overlap. We can define overlapping intervals as anywhere the first interval ends after the second interval starts. For example, if we take two intervals 2 -> 10 and 5 -> 15 we can see that they overlap and the result of merging them would be 2 -> 15.

3. Algorithm

To perform the merge we’ll need code following this pattern:

List<Interval> doMerge(List<Interval> intervals) {

// Sort the intervals based on the start

intervals.sort((one, two) -> one.start - two.start);

// Create somewhere to put the merged list, start it off with the earliest starting interval

ArrayList<Interval> merged = new ArrayList<>();

merged.add(intervals.get(0));

// Loop over each interval and merge if start is before the end of the

// previous interval

intervals.forEach(interval -> {

if (merged.get(merged.size() - 1).end > interval.start) {

merged.get(merged.size() - 1).setEnd(interval.end);

} else {

merged.add(interval);

}

});

return merged;

}To start we’ve defined a method that takes a List of Intervals and returns the same but sorted. Of course, there are other types of Collections our Intervals could be stored in, but we’ll use a List today.

The first step in the processing we need to take is to sort our Collection of Intervals. We’ve sorted based on the Interval’s start so that when we loop over them each Interval is followed by the one most likely to overlap with it.

We can use List.sort() to do this assuming our Collection is a List. However, if we had a different kind of Collection we’d have to use something else. List.sort() takes a Comparator and sorts in place, so we don’t need to reassign our variable. For our Comparator, we can subtract the start of the second Interval from the start of the first. This results in higher starts being placed after lower starts in our Collection.

Next, we need somewhere to put the results of our merging process. We’ve created an ArrayList called merged for this purpose. We can also get it started by putting in the first interval as we know it has the earliest start.

Now for the interesting part. We need to loop over every Interval and do one of two things. If the Interval starts before the end of the previous Interval, we merge them by setting the end of the previous Interval to the end of the current Interval. Alternatively, if the Interval starts after the end of the previous Interval we simply add it to the Collection.

Finally, we returned the list of merged Intervals.

4. Practical Example

Let’s see that method in action with a test. We can define four Intervals we want to merge:

ArrayList<Interval> intervals = new ArrayList<>(Arrays.asList(

new Interval(2, 5),

new Interval(13, 20),

new Interval(11, 15),

new Interval(1, 3)

));After merging we’d expect the result to be only two Intervals:

ArrayList<Interval> intervalsMerged = new ArrayList<>(Arrays.asList(

new Interval(1, 5),

new Interval(11, 20)

));We can now use the method we created earlier and confirm it works as expected:

@Test

void givenIntervals_whenMerging_thenReturnMergedIntervals() {

MergeOverlappingIntervals merger = new MergeOverlappingIntervals();

ArrayList<Interval> result = (ArrayList<Interval>) merger.doMerge(intervals);

assertArrayEquals(intervalsMerged.toArray(), result.toArray());

}5. Conclusion

In this article, we learned how to merge overlapping intervals in a Collection in Java. We saw that the process of merging the intervals is fairly simple. First, we have to know how to properly sort them, by start value. Then we just have to understand that we need to merge them when one interval starts before the previous one ends.

As always, the full code for the examples is available over on GitHub.

Finding Element by Attribute in Selenium

1. Introduction

Selenium offers plenty of methods to locate elements on a webpage, and we often need to find an element based on its attribute.

Attributes are additional pieces of information that can be added to provide more context or functionality. They can be broadly categorized into two types:

- Standard Attributes: These attributes are predefined and recognized by browsers. Examples include id, class, src, href, alt, title, etc. Standard attributes have predefined meanings and are widely used across different HTML elements.

- Custom Attributes: Custom attributes are those that are not predefined by HTML specifications but are often created by developers for their specific needs. These attributes typically start with “data-” followed by a descriptive name. For example, data-id, data-toggle, data-target, etc. Custom attributes are useful for storing additional information or metadata related to an element, and they are commonly used in web development to pass data between HTML and JavaScript.

In this tutorial, we’ll dive into using CSS to pinpoint elements on a web page. We’ll explore finding elements by their attribute name or description, with options for both full and partial matches. By the end, we’ll be masters at finding any element on a page with ease!

2. Find Element by Attribute Name

One of the simplest scenarios is finding elements based on the presence of a specific attribute. Consider a webpage with multiple buttons, each tagged with a custom attribute called “data-action.” Now, let’s say we want to locate all buttons on the page with this attribute. In such cases, we can use [attribute] locator:

driver.findElements(By.cssSelector("[data-action]"));In the code above, the [data-action] will select all elements on a page with a target attribute, and we’ll receive a list of WebElements.

3. Find Element by Attribute Value

When we need to locate a concrete element with a unique attribute value, we can use the strict match variant of the CSS locator [attribute=value]. This method allows us to find elements with exact attribute value matches.

Let’s proceed with our web page where buttons have a “data-action” attribute, each assigned a distinct action value. For instance, data-action=’delete’, data-action=’edit’, and so forth. If we want to locate buttons with a particular action, such as “delete”, we can employ the attribute selector with an exact match:

driver.findElement(By.cssSelector("[data-action='delete']"));4. Find Element by Starting Attribute Value

In situations where the exact attribute value may vary but starts from a specific substring, we can use another approach.

Let’s consider a scenario where our application has many pop-ups, each featuring an “Accept” button with a custom attribute named “data-action”. These buttons may have distinct identifiers appended after a shared prefix, such as “btn_accept_user_pop_up”, “btn_accept_document_pop_up”, and so forth. We can write a universal locator in the base class using [attribute^=value] locator:

driver.findElement(By.cssSelector("[data-action^='btn_accept']"));This locator will find an element where the “data-action” attribute value starts from “btn_accept”, so we can write a base class with a universal “Accept” button locator for each pop-up.

5. Find the Element by Ending the Attribute Value

Similarly, when our attribute value ends with a specific suffix, let’s use [attribute$=value]. Imagine that we have a list of URLs on a page and want to get all PDF document references:

driver.findElements(By.cssSelector("[href^='.pdf']"));In this example, the driver will find all elements where “href” attribute value ends with “.pdf”.

6. Find Element by Part of Attribute Value

When we’re not sure about our attribute prefix or suffix, there will be helpful a contains approach [attribute*=value]. Let’s consider a scenario where we want to get all elements with a reference to a specific resource path:

driver.findElements(By.cssSelector("[href*='archive/documents']"));In this example, we’ll receive all elements with reference to a document from the archive folder.

7. Find Element by Specific Class

We can locate an element by using its class as an attribute. This is a common technique, especially when checking if an element is enabled, disabled, or has gained some other capability reflected in its class. Consider that we want to find a disabled button:

<a href="#" class="btn btn-primary btn-lg disabled" role="button" aria-disabled="true">Accept</a>This time, let’s use a strict match for the role and contain a match for a class:

driver.findElement(By.cssSelector("[role='button'][class*='disabled']"));In this example, “class” was used as attribute locator [attribute*=value] and detected in value “btn btn-primary btn-lg disabled” a “disabled” part.

8. Conclusion

In this tutorial, we’ve explored different ways to locate elements based on their attributes.

We’ve categorized attributes into two main types: standard, which are recognized by browsers and have predefined meanings, and custom, which are created by developers to fulfill specific requirements.

Using CSS selectors, we’ve learned how to efficiently find elements based on attribute names, values, prefixes, suffixes, or even substrings. Understanding these methods gives us powerful tools to locate elements easily, making our automation tasks smoother and more efficient.

As always, all code examples are available over on GitHub.

The @Struct Annotation Type in Hibernate – Structured User-Defined Types

1. Overview

In this tutorial, we’ll review Hibernate’s @Struct annotation which let’s developers create structured user-defined types.

Support for structured user-defined types, a.k.a structured types, was introduced in the SQL:1999 standard, which was a feature of object-relational (ORM) databases.

Structured or composite types have their use cases, especially since support for JSON was introduced in the SQL:2016 standard. Values of these structured types provide access to their sub-parts and do not have an identifier or primary key like a row in a table.

2. Struct Mapping

Hibernate lets you specify structured types through the annotation type @Struct for the class annotated with the @Embeddable annotation or the @Embedded attribute.

2.1. @Struct for Mapping Structured Types

Consider the below example of a Department class which has an @Embedded Manager class (a structured type):

@Entity

public class Department {

@Id

@GeneratedValue

private Integer id;

@Column

private String departmentName;

@Embedded

private Manager manager;

}The Manager class defined with @Struct annotation is given below:

@Embeddable

@Struct(name = "Department_Manager_Type", attributes = {"firstName", "lastName", "qualification"})

public class Manager {

private String firstName;

private String lastName;

private String qualification;

}2.2. The Difference Between @Embeddable and @Struct Annotations

A class annotated with @Struct maps the class to the structured user-defined type in the database. For example, without the @Struct annotation, the @Embedded Manager object, despite being a separate type, will be part in the Department table as shown in the DDL below:

CREATE TABLE DEPRARTMENT (

Id BIGINT,

DepartmentName VARCHAR,

FirstName VARCHAR,

LastName VARCHAR,

Qualification VARCHAR

);The Manager class, with the @Struct annotation, will produce a user-defined type similar to:

create type Department_Manager_Type as (

firstName VARCHAR,

lastName VARCHAR,

qualification VARCHAR

)With the added @Struct annotation, the Department object as shown in the DDL below:

CREATE TABLE DEPRARTMENT (

Id BIGINT,

DepartmentName VARCHAR,

Manager Department_Manager_Type

);2.3. @Struct Annotation and Order of Attributes

Since a structured type has multiple attributes, the order of the attributes is very important for mapping data to the right attributes. One way to define the order of attributes is through the “attributes” field of the @Struct annotation.

In the Manager class above, you can see the “attributes” field of the @Struct annotation, which specifies that Hibernate expects the order of the Manager attributes (while Serializing and De-serializing it) to be “firstName”, “lastName” and finally “qualification”.

The second way to define the order of attributes is by using a Java record to implicitly specify the order through the canonical constructor, for example:

@Embeddable

@Struct(name = "Department_Manager")

public record Manager(String lastName, String firstName, String qualification) {}Above, the Manager record attributes will have the following order: “lastName”, “firstName” and “qualification”.

3. JSON Mapping

Since JSON is a predefined unstructured type, no type name or attribute order has to be defined. Mapping an @Embeddable as JSON can be done by annotating the embedded field/property with @JdbcTypeCode(SqlTypes.JSON).

For example, the below class holds a Manager object which is also a JSON unstructured type:

@Entity

public class Department_JsonHolder {

@Id

@GeneratedValue

private int id;

@JdbcTypeCode(SqlTypes.JSON)

@Column(name = "department_manager_json")

private Manager manager;

}Below is the expected DDL code for the class above:

create table Department_JsonHolder as (

id int not null primary key,

department_manager_json json

)Below is the example HQL query to select attributes from the department_manager_json column:

select djh.manager.firstName, djh.manager.lastName, djh.manager.qualifications

from department_jsonholder djh4. Conclusion

The difference between an @Embeddable and an @Embeddable @Struct is that the latter is actually a user-defined type in the underlying database. Although many databases support user-defined types, the hibernate dialects that have support for @Struct annotation are:

- Oracle

- DB2

- PostgreSQL

In this article, we discussed Hibernate’s @Struct annotation, how to use it, and when to add it to a domain class.

The source code for the article is available over on GitHub.

Get Last n Characters From a String

1. Overview

In this tutorial, we’ll explore a few different ways to get the last n characters from a String.

Let’s pretend that we have the following String whose value represents a date:

String s = "10-03-2024";From this String, we want to extract the year. In other words, we want just the last four characters, so, n is:

int n = 4;2. Using the substring() Method

We can use an overload of the substring() method that obtains the characters starting inclusively at the beginIndex and ending exclusively at the endIndex:

@Test

void givenString_whenUsingTwoArgSubstringMethod_thenObtainLastNCharacters() {

int beginIndex = s.length() - n;

String result = s.substring(beginIndex, s.length());

assertThat(result).isEqualTo("2024");

}Since we want the last n characters, we first determine our beginIndex by subtracting n from the length of the String. Finally, we supply the endIndex as simply the length of the String.

And since we’re only interested in the last n characters, we can make use of the more convenient overload of the substring() method that only takes the beginIndex as a method argument:

@Test

void givenString_whenUsingOneArgSubstringMethod_thenObtainLastNCharacters() {

int beginIndex = s.length() - n;

assertThat(s.substring(beginIndex)).isEqualTo("2024");

}This method returns the characters ranging from the beginIndex inclusively and terminates at the end of the String.

Finally, it’s worth mentioning the existence of the subSequence() method of the String class, which uses substring() under the hood. Even though it could be used to solve our use case, it would be considered more appropriate to just use the substring() method from a readability perspective.

3. Using the StringUtils.right() Method From Apache Commons Lang 3

To use Apache Commons Lang 3, we need to add its dependency to our pom.xml:

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

<version>3.14.0</version>

</dependency>We can use the StringUtils.right() method to obtain the last n characters:

@Test

void givenString_whenUsingStringUtilsRight_thenObtainLastNCharacters() {

assertThat(StringUtils.right(s, n)).isEqualTo("2024");

}Here, we just need to supply the String in question and the number of characters we want to return from the end of the String. Thus, this would be the preferred solution for our use case as we remove the need to calculate indexes, resulting in more maintainable and bug-free code.

4. Using the Stream API

Now, let’s explore what a functional programming solution might look like.

One option for our Stream source would be to use the chars() method. This method returns an IntStream whose primitive int elements represent the characters in the String:

@Test

void givenString_whenUsingIntStreamAsStreamSource_thenObtainLastNCharacters() {

String result = s.chars()

.mapToObj(c -> (char) c)

.skip(s.length() - n)

.map(String::valueOf)

.collect(Collectors.joining());

assertThat(result).isEqualTo("2024");

}Here, we use the mapToObj() intermediate operation to convert each int element to a Character object. Now that we have a Character stream, we can use the skip() operation to retain only the elements after a certain index.

Next, we use the map() operation with the String::valueOf method reference. We convert each Character element to a String because we want to use the terminal collect() operation with the Collectors.joining() static method.

Another functional approach uses the toCharArray() method initially instead:

@Test

void givenString_whenUsingStreamOfCharactersAsSource_thenObtainLastNCharacters() {

String result = Arrays.stream(ArrayUtils.toObject(s.toCharArray()))

.skip(s.length() - n)

.map(String::valueOf)

.collect(Collectors.joining());

assertThat(result).isEqualTo("2024");

}This method returns an array of char primitives. We can use the ArrayUtils.toObject() from Apache Commons Lang 3 to convert our array to an array of Character elements. Finally, we obtain a Character stream using the static method Arrays.stream(). From here, our logic remains the same to obtain the last n characters.

As we can see above, we need to do a lot of work to achieve our goal using a functional programming approach. This highlights that functional programming should only be used when it’s appropriate to do so.

5. Conclusion

In this article, we’ve explored several different ways to get the last n characters from a String. We highlighted that an imperative approach over a functional one yielded a more concise and readable solution. We should note that the examples of extracting the year from a String explored in this article were just for demonstration purposes. More appropriate ways of achieving this exist such as parsing the String as a LocalDate and using the getYear() method.

As always, the code samples used in this article are available over on GitHub.

Logging in Spring Boot With Loki

1. Introduction

Grafana Labs developed Loki, an open-source log aggregation system inspired by Prometheus. Its purpose is to store and index log data, facilitating efficient querying and analysis of logs generated by diverse applications and systems.

In this article, we’ll set up logging with Grafana Loki for a Spring Boot application. Loki will collect and aggregate the application logs, and Grafana will display them.

2. Running Loki and Grafana Services

We’ll first spin up Loki and Grafana services so that we can collect and observe logs. Docker containers will help us to more easily configure and run them.

First, let’s compose Loki and Grafana services in a docker-compose file:

version: "3"

networks:

loki:

services:

loki:

image: grafana/loki:2.9.0

ports:

- "3100:3100"

command: -config.file=/etc/loki/local-config.yaml

networks:

- loki

grafana:

environment:

- GF_PATHS_PROVISIONING=/etc/grafana/provisioning

- GF_AUTH_ANONYMOUS_ENABLED=true

- GF_AUTH_ANONYMOUS_ORG_ROLE=Admin

entrypoint:

- sh

- -euc

- |

mkdir -p /etc/grafana/provisioning/datasources

cat <<EOF > /etc/grafana/provisioning/datasources/ds.yaml

apiVersion: 1

datasources:

- name: Loki

type: loki

access: proxy

orgId: 1

url: http://loki:3100

basicAuth: false

isDefault: true

version: 1

editable: false

EOF

/run.sh

image: grafana/grafana:latest

ports:

- "3000:3000"

networks:

- lokiNext, we need to bring up the services using the docker-compose command:

docker-compose upFinally, let’s confirm if both services are up:

docker ps

211c526ea384 grafana/loki:2.9.0 "/usr/bin/loki -conf…" 4 days ago Up 56 seconds 0.0.0.0:3100->3100/tcp surajmishra_loki_1

a1b3b4a4995f grafana/grafana:latest "sh -euc 'mkdir -p /…" 4 days ago Up 56 seconds 0.0.0.0:3000->3000/tcp surajmishra_grafana_13. Configuring Loki With Spring Boot

Once we’ve spun up Grafana and the Loki service, we need to configure our application to send logs to it. We’ll use loki-logback-appender, which will be responsible for sending logs to the Loki aggregator to store and index logs.

First, we need to add loki-logback-appender in the pom.xml file:

<dependency>

<groupId>com.github.loki4j</groupId>

<artifactId>loki-logback-appender</artifactId>

<version>1.4.1</version>

</dependency>Second, we need to create a logging-spring.xml file under the src/main/resources folder. This file will control the logging behavior, such as the format of logs, the endpoint of our Loki service, and others, for our Spring Boot application:

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<appender name="LOKI" class="com.github.loki4j.logback.Loki4jAppender">

<http>

<url>http://localhost:3100/loki/api/v1/push</url>

</http>

<format>

<label>

<pattern>app=${name},host=${HOSTNAME},level=%level</pattern>

<readMarkers>true</readMarkers>

</label>

<message>

<pattern>

{

"level":"%level",

"class":"%logger{36}",

"thread":"%thread",

"message": "%message",

"requestId": "%X{X-Request-ID}"

}

</pattern>

</message>

</format>

</appender>

<root level="INFO">

<appender-ref ref="LOKI" />

</root>

</configuration>Once we finish setting up, let’s write a simple service that logs data at INFO level:

@Service

class DemoService{

private final Logger LOG = LoggerFactory.getLogger(DemoService.class);

public void log(){

LOG.info("DemoService.log invoked");

}

}4. Test Verification

Let’s conduct a live test by spinning up Grafana and Loki containers, and then executing the service method to push the logs to Loki. Afterward, we’ll query Loki using the HTTP API to confirm if the log was indeed pushed. For spinning up Grafana and Loki containers, please see the earlier section.

First, let’s execute the DemoService.log() method, which will call Logger.info(). This sends a message using the loki-logback-appender, which Loki will collect:

DemoService service = new DemoService();

service.log();Second, we’ll create a request for invoking the REST endpoint provided by the Loki HTTP API. This GET API accepts query parameters representing the query, start time, and end time. We’ll add those parameters as part of our request object:

HttpHeaders headers = new HttpHeaders();

headers.setContentType(MediaType.APPLICATION_JSON);

String query = "{level=\"INFO\"} |= `DemoService.log invoked`";

// Get time in UTC

LocalDateTime currentDateTime = LocalDateTime.now(ZoneOffset.UTC);

String current_time_utc = currentDateTime.format(DateTimeFormatter.ofPattern("yyyy-MM-dd'T'HH:mm:ss'Z'"));

LocalDateTime tenMinsAgo = currentDateTime.minusMinutes(10);

String start_time_utc = tenMinsAgo.format(DateTimeFormatter.ofPattern("yyyy-MM-dd'T'HH:mm:ss'Z'"));

URI uri = UriComponentsBuilder.fromUriString(baseUrl)

.queryParam("query", query)

.queryParam("start", start_time_utc)

.queryParam("end", current_time_utc)

.build()

.toUri();Next, let’s use the request object to execute REST request:

RestTemplate restTemplate = new RestTemplate();

ResponseEntity<String> response = restTemplate.exchange(uri, HttpMethod.GET, new HttpEntity<>(headers), String.class);Now we need to process the response and extract the log messages we’re interested in. We’ll use an ObjectMapper to read the JSON response and extract the log messages:

ObjectMapper objectMapper = new ObjectMapper();

List<String> messages = new ArrayList<>();

String responseBody = response.getBody();

JsonNode jsonNode = objectMapper.readTree(responseBody);

JsonNode result = jsonNode.get("data")

.get("result")

.get(0)

.get("values");

result.iterator()

.forEachRemaining(e -> {

Iterator<JsonNode> elements = e.elements();

elements.forEachRemaining(f -> messages.add(f.toString()));

});

Finally, let’s assert that the messages we receive in the response contain the message that was logged by the DemoService:

assertThat(messages).anyMatch(e -> e.contains(expected));5. Log Aggregation and Visualization

Our service logs are pushed to the Loki service because of the configuration setup with the loki-logback-appender. We can visualize it by visiting http://localhost:3000 (where the Grafana service is deployed) in our browser.

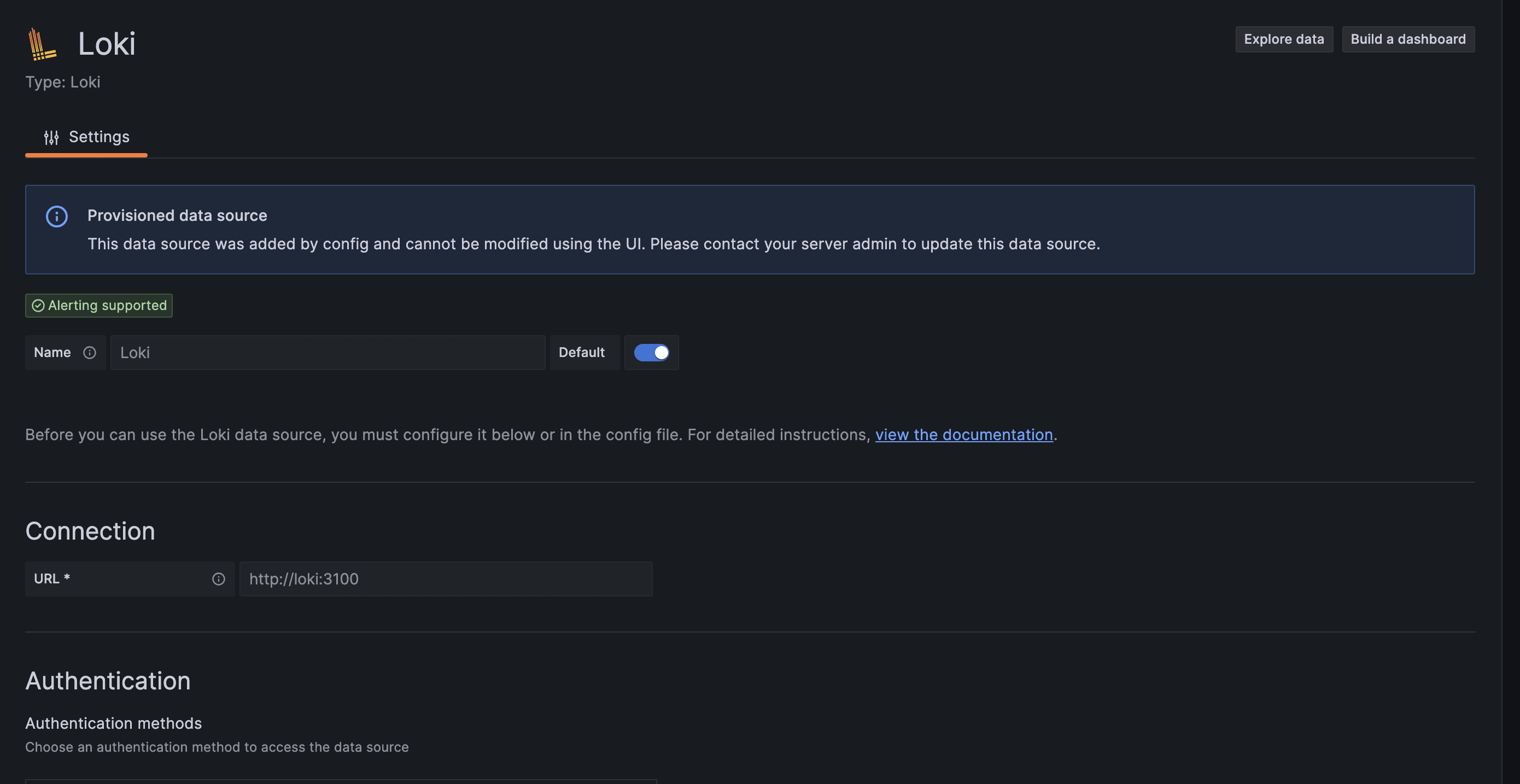

To see logs that have been stored and indexed in Loki, we need to use Grafana. The Grafana datasource provides configurable connection parameters for Loki where we need to enter the Loki endpoint, authentication mechanism, and more.

First, let’s configure the Loki endpoint to which logs have been pushed:

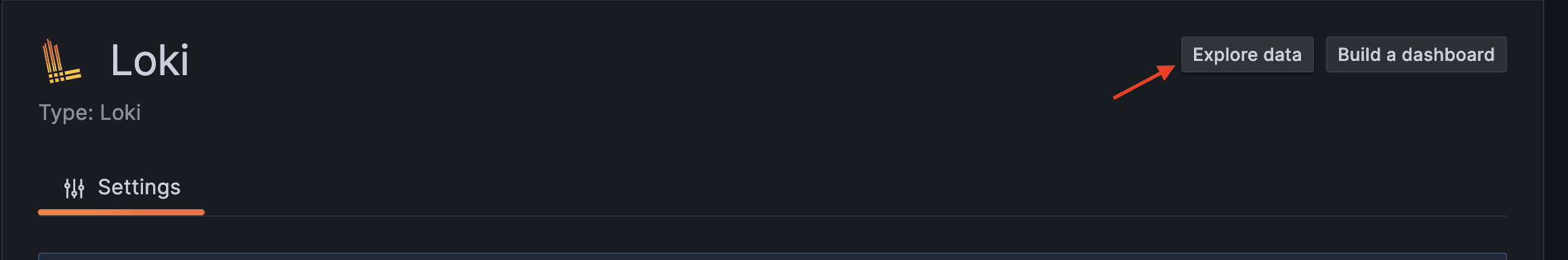

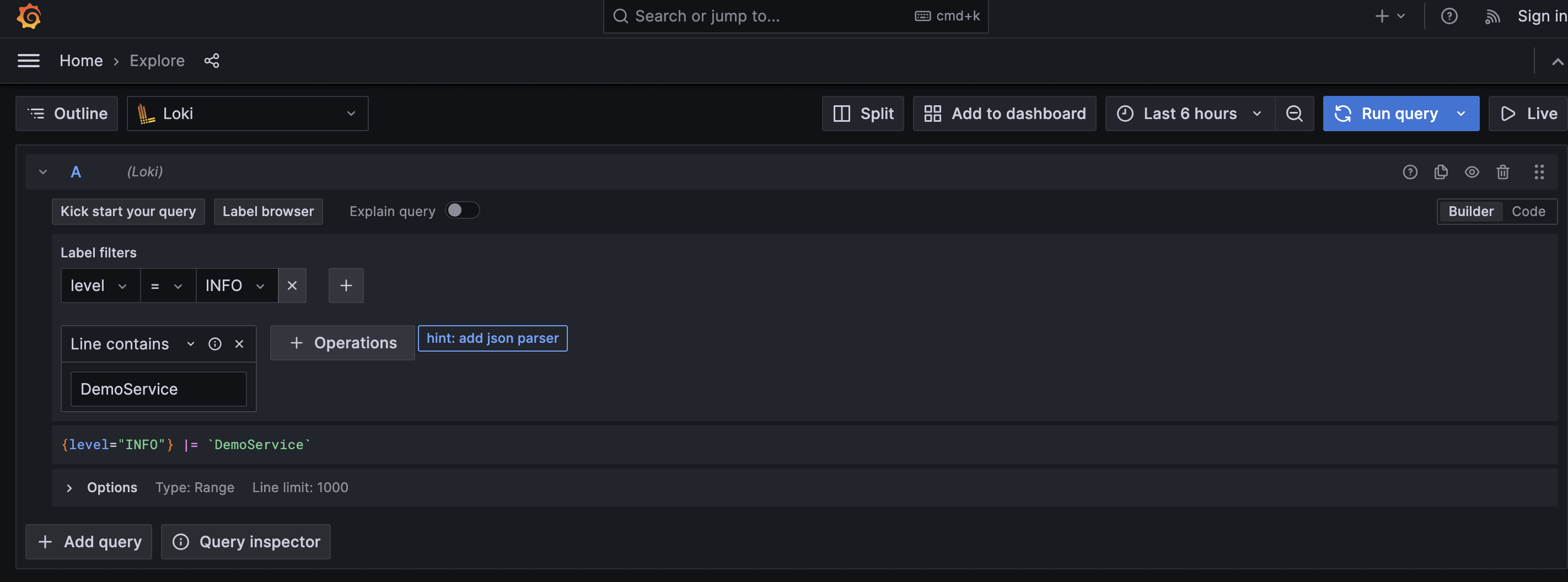

Once we’ve successfully configured the data source, let’s move to explore the data page for querying our logs:

We can write our query to fetch app logs into Grafana for visualization. In our demo service, we’re pushing INFO logs, so we need to add that to our filter and run the query:

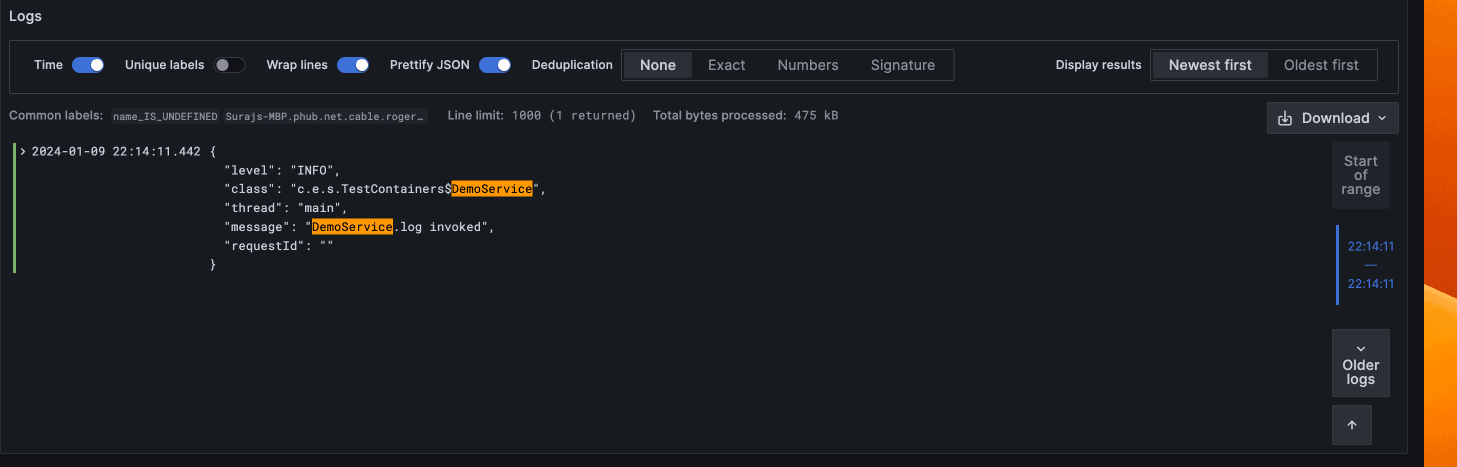

Once we run the query, we’ll see all the INFO logs that match our search:

6. Conclusion

In this article, we set up logging for a Spring Boot application with Grafana Loki. We also verified our setup with a unit test and visualization, using simple logic that logged INFO logs and setting a Loki data source in Grafana.

As always, the example code is available over on GitHub.

Check if an Element Is Present in a Set in Java

1. Overview

In this short tutorial, we’ll cast light on how to check if an element is present in a Set in Java.

First, we’ll kick things off by exploring solutions using core JDK. Then, we’ll elucidate how to achieve the same outcome using external libraries such as Apache Commons.

2. Using Core JDK

Java provides several convenient ways to check whether a Set contains a given element. Let’s explore these and see how to use them in practice.

2.1. Set#contains() Method

As the name implies, this method checks if a specific Set contains the given element. It’s one of the easiest solutions that we can use to answer our central question:

@Test

void givenASet_whenUsingContainsMethod_thenCheck() {

assertThat(CITIES.contains("London")).isTrue();

assertThat(CITIES.contains("Madrid")).isFalse();

}Typically, the contains() method returns true if the given element is present in the Set, and false otherwise.

According to the documentation, the method returns true if and only:

object==null ? element==null : object.equals(element);This is why it’s important to implement the equals() method within the class to which the Set objects belong. That way, we can customize the equality logic to account for either all or some of the class fields.

In short, the contains() method offers the most concise and straightforward way to check if an element is present in a given Set compared to the other methods.

2.2. Collections#disjoint() Method

The Collections utility class provides another method, called disjoint(), that we can use to check if a Set contains a given element.

This method accepts two collections as parameters and returns true if they have no elements in common:

@Test

public void givenASet_whenUsingCollectionsDisjointMethod_thenCheck() {

boolean isPresent = !Collections.disjoint(CITIES, Collections.singleton("Paris"));

assertThat(isPresent).isTrue();

}Overall, we created an immutable Set containing only the given string “Paris”. Furthermore, we used the disjoint() method with the negation operator to check whether the two collections have elements in common.

2.3. Stream#anyMatch() Method

The Stream API offers the anyMatch() method that we can use to verify if any element of a given collection matches the provided predicate.

So, let’s see it in action:

class CheckIfPresentInSetUnitTest {

private static final Set<String> CITIES = new HashSet<>();

@BeforeAll

static void setup() {

CITIES.add("Paris");

CITIES.add("London");

CITIES.add("Tokyo");

CITIES.add("Tamassint");

CITIES.add("New york");

}

@Test

void givenASet_whenUsingStreamAnyMatchMethod_thenCheck() {

boolean isPresent = CITIES.stream()

.anyMatch(city -> city.equals("London"));

assertThat(isPresent).isTrue();

}

}As we can see, we used the predicate city.equals(“London”) to check if there is any city in the stream that matches the condition of being equal to “London”.

2.4. Stream#filter() Method

Another solution would be using the filter() method. It returns a new stream consisting of elements that satisfy the provided condition.

In other words, it filters the stream based on the specified condition:

@Test

void givenASet_whenUsingStreamFilterMethod_thenCheck() {

long resultCount = CITIES.stream()

.filter(city -> city.equals("Tamassint"))

.count();

assertThat(resultCount).isPositive();

}As shown above, we filtred our Set to include only elements that are equal to the value “Tamassint”. Then, we used the terminal operation count() to return the number of the filtered elements.

3. Using Apache Commons Collections

The Apache Commons Collections library is another option to consider if we want to check if a given element is present in a Set. Let’s start by adding its dependency to the pom.xml file:

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-collections4</artifactId>

<version>4.4</version>

</dependency>

3.1. CollectionUtils#containsAny() Method

CollectionUtils provides a set of utility methods to perform common operations on collections. Among these methods, we find containsAny(Collection<?> coll1, Collection<?> coll2). This method returns true if at least one element of the second collection is also contained in the first collection.

So, let’s see it in action:

@Test

void givenASet_whenUsingCollectionUtilsContainsAnyMethod_thenCheck() {

boolean isPresent = CollectionUtils.containsAny(CITIES, Collections.singleton("Paris"));

assertThat(isPresent).isTrue();

}Similarly, we created a singleton Set containing one element, “Paris”. Then, we used the containsAny() method to check if our collection CITIES contains the given value “Paris”.

3.2. SetUtils#intersection() Method

Alternatively, we can use the SetUtils utility class to tackle our challenge. This class offers the intersection(Set<? extends E> a, Set<? extends E> b) method that returns a new Set containing elements that are both present in the specified two Sets:

@Test

void givenASet_whenUsingSetUtilsIntersectionMethod_thenCheck() {

Set<String> result = SetUtils.intersection(CITIES, Collections.singleton("Tamassint"));

assertThat(result).isNotEmpty();

}In a nutshell, the idea here is to verify whether the city “Tamassint” is present in CITIES by checking if the intersection between CITIES and the given singleton Set is not empty.

4. Conclusion

In this short article, we explored the nitty-gritty of how to check if a Set contains a given element in Java.

First, we saw how to do this using ready-to-use JDK methods. Then, we demonstrated how to accomplish the same goal using the Apache Commons Collections library.

As always, the code used in this article can be found over on GitHub.

Convert TemporalAccessor to LocalDate

1. Introduction

Dealing with date and time values is a common task. Sometimes, we may need to convert a TemporalAccessor object to a LocalDate object to perform date-specific operations. Hence, this could be useful when parsing date-time strings or extracting date components from a date-time object.

In this tutorial, we’ll explore different approaches to achieve this conversion in Java.

2. Using LocalDate.from() Method

One straightforward approach to convert a TemporalAccessor to a LocalDate is to use the LocalDate.from(TemporalAccessor temporal) method. Practically, this method extracts date components (year, month, and day) from the TemporalAccessor and constructs a LocalDate object. Let’s see an example:

String dateString = "2022-03-28";

TemporalAccessor temporalAccessor = DateTimeFormatter.ISO_LOCAL_DATE.parse(dateString);@Test

public void givenTemporalAccessor_whenUsingLocalDateFrom_thenConvertToLocalDate() {

LocalDate convertedDate = LocalDate.from(temporalAccessor);

assertEquals(LocalDate.of(2022, 3, 28), convertedDate);

}In this code snippet, we initialize a String variable dateString with the value (2022-03-28), representing a date in the (ISO 8601) format. In addition, we use the DateTimeFormatter.ISO_LOCAL_DATE.parse() method to parse this string into a TemporalAccessor object temporalAccessor.

Subsequently, we employ the LocalDate.from(temporalAccessor) method to convert temporalAccessor into a LocalDate object convertedDate, effectively extracting and constructing the date components.

Finally, through the assertion assertEquals(LocalDate.of(2022, 3, 28), convertedDate), we ensure that the conversion results in convertedDate matching the expected date.

3. Using TemporalQueries

Another approach to convert a TemporalAccessor to a LocalDate is by using TemporalQueries. We can define a custom TemporalQuery to extract the necessary date components and construct a LocalDate object. Here’s an example:

@Test

public void givenTemporalAccessor_whenUsingTemporalQueries_thenConvertToLocalDate() {

int year = temporalAccessor.query(TemporalQueries.localDate()).getYear();

int month = temporalAccessor.query(TemporalQueries.localDate()).getMonthValue();

int day = temporalAccessor.query(TemporalQueries.localDate()).getDayOfMonth();

LocalDate convertedDate = LocalDate.of(year, month, day);

assertEquals(LocalDate.of(2022, 3, 28), convertedDate);

}In this test method, we invoke the temporalAccessor.query(TemporalQueries.localDate()) method to obtain a LocalDate instance representing the date extracted from temporalAccessor.

We then retrieve the year, month, and day components from this LocalDate instance using getYear(), getMonthValue(), and getDayOfMonth() methods, respectively. Subsequently, we construct a LocalDate object convertedDate using the LocalDate.of() method with these extracted components.

4. Conclusion

In conclusion, converting a TemporalAccessor to a LocalDate in Java can be achieved using the LocalDate.from() or TemporalQueries. Besides, these methods provide flexible and efficient ways to perform the conversion, enabling seamless integration of date-time functionalities in Java applications.

As usual, the accompanying source code can be found over on GitHub.

Get a Path to a Resource in a Java JAR File

1. Introduction

Resources within a JAR file are typically accessed using a path relative to the root of the JAR file in Java. Furthermore, it’s essential to understand how these paths are structured to retrieve resources effectively.

In this tutorial, we’ll explore different methods to obtain the path to a resource within a Java JAR file.

2. Getting URL for Resource Using Class.getResource() Method

The Class.getResource() method provides a straightforward way to obtain the URL of a resource within a JAR file. Let’s see how we can use this method:

@Test

public void givenFile_whenClassUsed_thenGetResourcePath() {

URL resourceUrl = GetPathToResourceUnitTest.class.getResource("/myFile.txt");

assertNotNull(resourceUrl);

}In this test, we call the getResource() method on the GetPathToResourceUnitTest.class, passing the path to the resource file “/myFile.txt” as an argument. Then, we assert that the obtained resourceUrl is not null, indicating that the resource file was successfully located.

3. Using ClassLoader.getResource() Method

Alternatively, we can use the ClassLoader.getResource() method to access resources within a JAR file. This method is useful when the resource path is not known at compile time:

@Test

public void givenFile_whenClassLoaderUsed_thenGetResourcePath() {

URL resourceUrl = GetPathToResourceUnitTest.class.getClassLoader().getResource("myFile.txt");

assertNotNull(resourceUrl);

}In this test, we’re employing the class loader GetPathToResourceUnitTest.class.getClassLoader() to fetch the resource file. Unlike the previous approach, this method doesn’t rely on the class’s package structure. Instead, it searches for the resource file at the root level of the classpath.

This means it can locate resources regardless of their location within the project structure, making it more flexible for accessing resources located outside the class’s package.

4. Using Paths.get() Method

Starting from Java 7, we can use the Paths.get() method to obtain a Path object representing a resource within a JAR file. Here’s how:

@Test

public void givenFile_whenPathUsed_thenGetResourcePath() throws Exception {

Path resourcePath = Paths.get(Objects.requireNonNull(GetPathToResourceUnitTest.class.getResource("/myFile.txt")).toURI());

assertNotNull(resourcePath);

}Here, we first call the getResource() method to retrieve the URL of the resource file. Then, we convert this URL to a URI and pass it to Paths.get() to obtain a Path object representing the resource file’s location.

This approach is beneficial when we need to work with the resource file as a Path object. It enables more advanced file operations, such as reading or writing content to the file. Moreover, it offers a convenient way to interact with resources within the context of the Java NIO package.

5. Conclusion

In conclusion, accessing resources within a Java JAR file is crucial for many applications. Whether we prefer the simplicity of Class.getResource(), the flexibility of ClassLoader.getResource(), or the modern approach with Paths.get(), Java provides various methods to accomplish this task efficiently.

As usual, the accompanying source code can be found over on GitHub.

Java Weekly, Issue 536 – Java keeps evolving through JEPs

1. Spring and Java

>> Code Before super() – JEP 447 [javaspecialists.eu]

A revolution is coming to Java! Soon, you will be able to add custom code before a super() call

>> Java users on macOS 14 running on Apple silicon systems should skip macOS 14.4 and update directly to macOS 14.4.1 [oracle.com]

The situation got stable – Java now works correctly on Apple Silicon.

>> JEP targeted to JDK 23: 466: Class-File API (Second Preview) [openjdk.org]

It’s good to see JDK provide low-level public APIs. No more need for Unsafe!

Also worth reading:

- >> Oracle Announces Free Java SE Technical Support for Academic Institutions in Oracle Academy [oracle.com]

- >> Aspect-Oriented Programming (AOP) Made Easy With Java [foojay.io]

- >> A preview of Jakarta Data 1.0 [in.relation.to]

- >> A summary of Jakarta Persistence 3.2 [in.relation.to]

Webinars and presentations:

- >> A Bootiful Podcast: Joseph Ottinger and Andrew Lombardi on “Beginning Spring 6” [spring.io]

- >> Project Wayfield – The JDK Wayland Desktop on Linux [inside.java]

- >> Foojay Podcast #46: JUG Switzerland [foojay.io]

Time to upgrade:

- >> GraalVM for JDK 22 Delivers Support for JDK 22 JEPs and New Truffle Version [infoq.com]

- >> New Java Platform Extension for VS Code Release [inside.java]

- >> Spring for GraphQL 1.2.6 released [spring.io]

- >> Spring Cloud 2023.0.1 (aka Leyton) Has Been Released [spring.io]

2. Technical & Musings

>> The xz Backdoor Should Not Happen Again [techblog.bozho.net]

This shouldn’t happen again, but if the approach towards open source doesn’t change, it probably will.

>> Fixing duplicate API requests [frankel.ch]

Distributed systems are fun until you realize that you always need to be ready to handle duplicate requests.

Also worth reading:

- >> Tips for reading code [foojay.io]

- >> On Tech Debt: My Rust Library is now a CDO [lucumr.pocoo.org]

- >> Uncovering the Seams in Mainframes for Incremental Modernisation [martinfowler.com]

- >> PostgreSQL Heap-Only-Tuple or HOT Update Optimization [vladmihalcea.com]

- >> Uncovering Seams in a Mainframe’s external interfaces [martinfowler.com]

- >> Quality Outreach Heads-up – JDK 20-23: Support for Unicode CLDR Version 42 [inside.java]

- >> Skin in the Game [lucumr.pocoo.org]

- >> How the Company-Startup Thing Worked Out For Me, Year 12 [ozar.me]

3. Pick of the Week

Let’s dig into the Azure Spring Apps platform, built by Microsoft in collaboration with VMware:

>> Learn more and deploy your first Spring Boot app to Azure Spring Apps [microsoft.com]

Internet Address Resolution SPI in Java

1. Introduction

In this tutorial, we’ll discuss Java’s JEP 418, which establishes a new Service Provider Interface (SPI) for Internet host and address resolution.

2. Internet Address Resolution

Any device connected to a computer network is assigned a numeric value or an IP (Internet Protocol) Address. IP addresses help identify the device on a network uniquely, and they also help in routing packets to and from the devices.

They are typically of two types. IPv4 is the fourth generation of the IP standard and is a 32-bit address. Due to the rapid growth of the Internet, a newer v6 of the IP standard has also been published, which is much larger and contains hexadecimal characters.

Additionally, there is another relevant type of address. Network devices, such as an Ethernet port or a Network Interface Card (NIC), have the MAC (Media Access Control) address. These are globally distributed, and all network interface devices can be uniquely identified with MAC addresses.

Internet Address Resolution broadly refers to converting a higher-level network address, such as a domain (e.g., baeldung) or a URL (https://www.baeldung.com), to a lower-level network address, such as an IP address or a MAC address.

3. Internet Address Resolution in Java

Java provides multiple ways to resolve internet addresses today using the java.net.InetAddress API. The API internally uses the operating system’s native resolver for DNS lookup.

The OS native address resolution, which the InetAddress API currently uses, involves multiple steps. A system-level DNS cache is involved, where commonly queried DNS mappings are stored. If a cache miss occurs in the local DNS cache, the system resolver configuration provides information about a DNS server to perform subsequent lookups.

The OS then queries the configured DNS server obtained in the previous step for the information. This step might happen a couple of more times recursively.

If a match and lookup is successful, the DNS address is cached at all the servers and returned to the original client. However, if there is no match, an iterative lookup process is triggered to a Root Server, providing information about the Authoritative Nave Servers (ANS). These Authoritative Name Servers (ANS) store information about Top Level Domains (TLD) such as the .org, .com, etc.

These steps ultimately match the domain to an Internet address if it is valid or returns failure to the client.

4. Using Java’s InetAddress API

The InetAddress API provides numerous methods for performing DNS query and resolution. These APIs are available as part of the java.net package.

4.1. getAllByName() API

The getAllByName() API tries to map a hostname to a set of IP addresses:

InetAddress[] inetAddresses = InetAddress.getAllByName(host);

Assert.assertTrue(Arrays.stream(inetAddresses).map(InetAddress::getHostAddress).toArray(String[]::new) > 1);This is also termed forward lookup.

4.2. getByName() API

The getByName() API is similar to the previous forward lookup API except that it only maps the host to the first matching IP Address:

InetAddress inetAddress = InetAddress.getByName("www.google.com");

Assert.assertNotNull(inetAddress.getHostAddress()); // returns an IP Address

4.3. getByAddress() API

This is the most basic API to perform a reverse lookup, wherein it takes an IP address as its input and tries to return a host associated with it:

InetAddress inetAddress = InetAddress.getByAddress(ip);

Assert.assertNotNull(inetAddress.getHostName()); // returns a host (eg. google.com)4.4. getCanonicalHostName() API and getHostName() API

These APIs perform a similar reverse lookup and try to return the Fully Qualified Domain Name(FQDN) associated with it:

InetAddress inetAddress = InetAddress.getByAddress(ip);

Assert.assertNotNull(inetAddress.getCanonicalHostName()); // returns a FQDN

Assert.assertNotNull(inetAddress.getHostName());5. Service Provider Interface (SPI)

The Service Provider Interface (SPI) pattern is an important design pattern used in software development. The purpose of this pattern is to allow for pluggable components and implementations for a specific service.

It allows developers to extend the capabilities of a system without modifying any of the core expectations of the service and use any of the implementations without being tied down to a single one.

5.1. SPI Components in InetAddress