![]()

1. Overview

Message conversion is the process of transforming messages between different formats and representations as they’re transmitted and received by applications.

AWS SQS allows text payloads, and Spring Cloud AWS SQS integration provides familiar Spring abstractions to manage serializing and deserializing text payloads to and from POJOs and records using JSON by default.

In this tutorial, we’ll use an event-driven scenario to go through three common use cases for message conversion: POJO/record serialization and deserialization, setting a custom ObjectMapper, and deserializing to a subclass/interface implementation.

To test our use cases, we’ll leverage the environment and test setup from the Spring Cloud AWS SQS V3 introductory article.

2. Dependencies

Let’s start by importing the Spring Cloud AWS Bill of Materials, which manages versions for our dependencies, ensuring version compatibility between them:

<dependencyManagement>

<dependencies>

<dependency>

<groupId>io.awspring.cloud</groupId>

<artifactId>spring-cloud-aws</artifactId>

<version>${spring-cloud-aws.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

Now, we can add the core and SQS starter dependencies:

<dependency>

<groupId>io.awspring.cloud</groupId>

<artifactId>spring-cloud-aws-starter</artifactId>

</dependency>

<dependency>

<groupId>io.awspring.cloud</groupId>

<artifactId>spring-cloud-aws-starter-sqs</artifactId>

</dependency>

For this tutorial, we’ll use the Spring Boot Web starter. Notably, we don’t specify a version, since we’re importing Spring Cloud AWS’ BOM:

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

Lastly, let’s add the test dependencies – LocalStack and TestContainers with JUnit 5, the awaitility library for verifying asynchronous message consumption, and AssertJ to handle the assertions using a fluent API, besides Spring Boot’s test dependency:

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.testcontainers</groupId>

<artifactId>localstack</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.testcontainers</groupId>

<artifactId>junit-jupiter</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.awaitility</groupId>

<artifactId>awaitility</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.assertj</groupId>

<artifactId>assertj-core</artifactId>

<scope>test</scope>

</dependency>

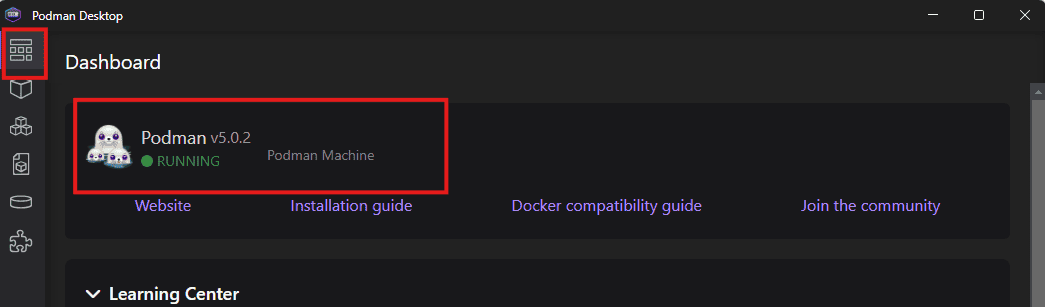

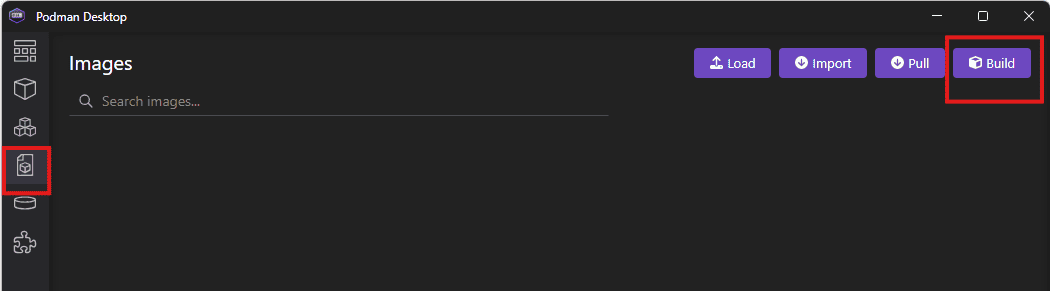

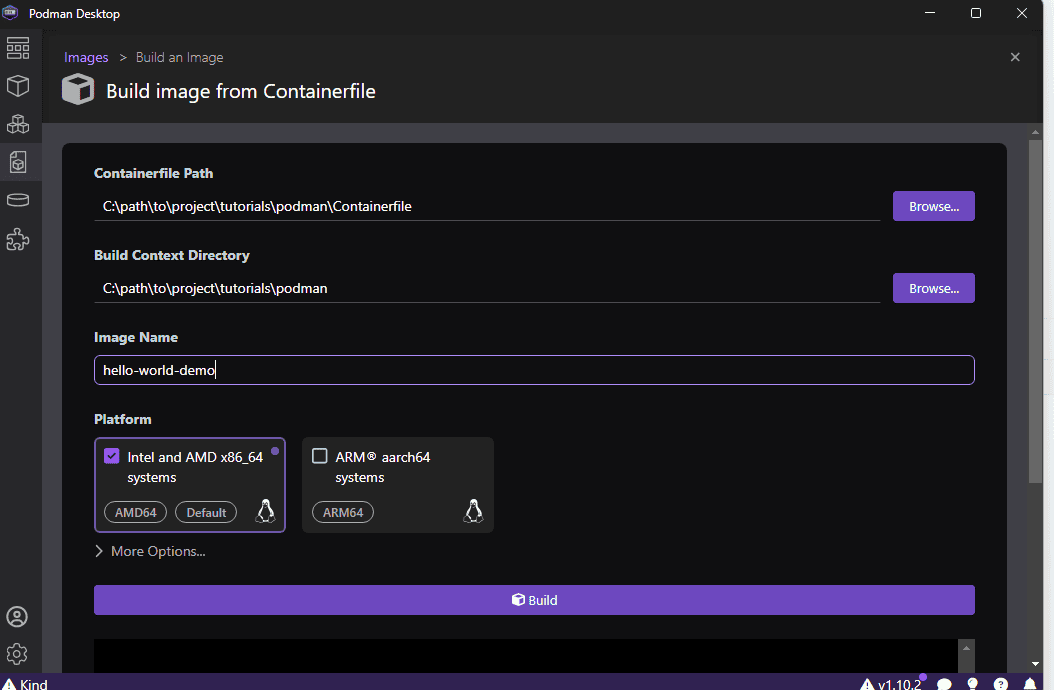

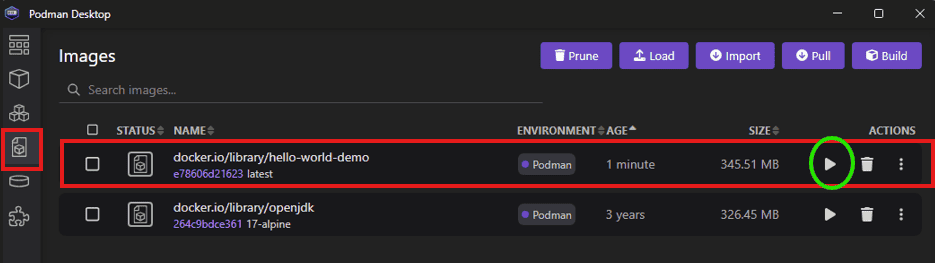

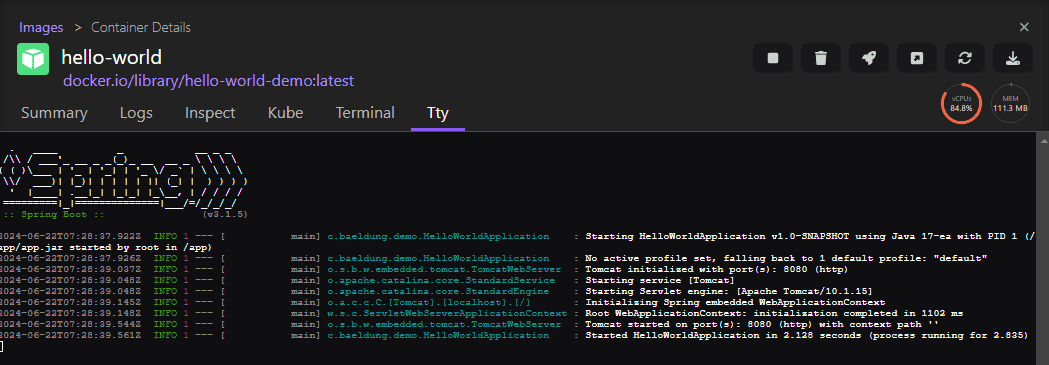

3. Setting up the Local Test Environment

Now that we’ve added the dependencies, we’ll set our test environment up by creating the BaseSqsLiveTest which should be extended by our test suites:

@Testcontainers

public class BaseSqsLiveTest {

private static final String LOCAL_STACK_VERSION = "localstack/localstack:3.4.0";

@Container

static LocalStackContainer localStack = new LocalStackContainer(DockerImageName.parse(LOCAL_STACK_VERSION));

@DynamicPropertySource

static void overrideProperties(DynamicPropertyRegistry registry) {

registry.add("spring.cloud.aws.region.static", () -> localStack.getRegion());

registry.add("spring.cloud.aws.credentials.access-key", () -> localStack.getAccessKey());

registry.add("spring.cloud.aws.credentials.secret-key", () -> localStack.getSecretKey());

registry.add("spring.cloud.aws.sqs.endpoint", () -> localStack.getEndpointOverride(SQS)

.toString());

}

}

4. Setting up the Queue Names

To leverage Spring Boot’s Configuration Externalization, we’ll add the queue names in our application.yml file:

events:

queues:

shipping:

simple-pojo-conversion-queue: shipping_pojo_conversion_queue

custom-object-mapper-queue: shipping_custom_object_mapper_queue

deserializes-subclass: deserializes_subclass_queue

We now create a @ConfigurationProperties annotated class, which we’ll inject into our tests to retrieve the queue names:

@ConfigurationProperties(prefix = "events.queues.shipping")

public class ShipmentEventsQueuesProperties {

private String simplePojoConversionQueue;

private String customObjectMapperQueue;

private String subclassDeserializationQueue;

// ...getters and setters

}

Lastly, we add the @EnableConfigurationProperties annotation to a @Configuration class:

@EnableConfigurationProperties({ ShipmentEventsQueuesProperties.class })

@Configuration

public class ShipmentServiceConfiguration {

}

5. Setting up the Application

We’ll create a Shipment microservice that reacts to ShipmentRequestedEvents to illustrate our use cases.

First, let’s create the Shipment entity which will hold information about the shipments:

public class Shipment {

private UUID orderId;

private String customerAddress;

private LocalDate shipBy;

private ShipmentStatus status;

public Shipment(){}

public Shipment(UUID orderId, String customerAddress, LocalDate shipBy, ShipmentStatus status) {

this.orderId = orderId;

this.customerAddress = customerAddress;

this.shipBy = shipBy;

this.status = status;

}

// ...getters and setters

}

Next, let’s add a ShipmentStatus enum:

public enum ShipmentStatus {

REQUESTED,

PROCESSED,

CUSTOMS_CHECK,

READY_FOR_DISPATCH,

SENT,

DELIVERED

}

We’ll also need the ShipmentRequestedEvent:

public class ShipmentRequestedEvent {

private UUID orderId;

private String customerAddress;

private LocalDate shipBy;

public ShipmentRequestedEvent() {

}

public ShipmentRequestedEvent(UUID orderId, String customerAddress, LocalDate shipBy) {

this.orderId = orderId;

this.customerAddress = customerAddress;

this.shipBy = shipBy;

}

public Shipment toDomain() {

return new Shipment(orderId, customerAddress, shipBy, ShipmentStatus.REQUESTED);

}

// ...getters and setters

}

To process our shipments, we’ll create a simple ShipmentService class with a simulated repository that we’ll use to assert our tests:

@Service

public class ShipmentService {

private static final Logger logger = LoggerFactory.getLogger(ShipmentService.class);

private final Map<UUID, Shipment> shippingRepository = new ConcurrentHashMap<>();

public void processShippingRequest(Shipment shipment) {

logger.info("Processing shipping for order: {}", shipment.getOrderId());

shipment.setStatus(ShipmentStatus.PROCESSED);

shippingRepository.put(shipment.getOrderId(), shipment);

logger.info("Shipping request processed: {}", shipment.getOrderId());

}

public Shipment getShipment(UUID requestId) {

return shippingRepository.get(requestId);

}

}

6. Processing POJOs and Records With Default Configuration

Spring Cloud AWS SQS pre-configures a SqsMessagingMessageConverter that serializes and deserializes POJOs and records to and from JSON when sending and receiving messages using SqsTemplate, @SqsListener annotations, or manually instantiated SqsMessageListenerContainers.

Our first use case will be sending and receiving a simple POJO to illustrate this default configuration. We’ll use the @SqsListener annotation to receive messages and Spring Boot’s auto-configuration to configure deserialization when necessary.

First, we’ll create the test to send the message:

@SpringBootTest

public class ShipmentServiceApplicationLiveTest extends BaseSqsLiveTest {

@Autowired

private SqsTemplate sqsTemplate;

@Autowired

private ShipmentService shipmentService;

@Autowired

private ShipmentEventsQueuesProperties queuesProperties;

@Test

void givenPojoPayload_whenMessageReceived_thenDeserializesCorrectly() {

UUID orderId = UUID.randomUUID();

ShipmentRequestedEvent shipmentRequestedEvent = new ShipmentRequestedEvent(orderId, "123 Main St", LocalDate.parse("2024-05-12"));

sqsTemplate.send(queuesProperties.getSimplePojoConversionQueue(), shipmentRequestedEvent);

await().atMost(Duration.ofSeconds(10))

.untilAsserted(() -> {

Shipment shipment = shipmentService.getShipment(orderId);

assertThat(shipment).isNotNull();

assertThat(shipment).usingRecursiveComparison()

.ignoringFields("status")

.isEqualTo(shipmentRequestedEvent);

assertThat(shipment

.getStatus()).isEqualTo(ShipmentStatus.PROCESSED);

});

}

}

Here, we’re creating the event, sending it to the queue using the auto-configured SqsTemplate, and waiting for the status to become PROCESSED. which indicates the message has successfully been received and processed.

When the test is triggered, it fails after 10 seconds since we don’t have a listener for the queue yet.

Let’s address this by creating our first @SqsListener:

@Component

public class ShipmentRequestListener {

private final ShipmentService shippingService;

public ShipmentRequestListener(ShipmentService shippingService) {

this.shippingService = shippingService;

}

@SqsListener("${events.queues.shipping.simple-pojo-conversion-queue}")

public void receiveShipmentRequest(ShipmentRequestedEvent shipmentRequestedEvent) {

shippingService.processShippingRequest(shipmentRequestedEvent.toDomain());

}

}

And when we run the test again, it passes after a moment.

Notably, the listener has the @Component annotation and we’re referencing the queue name we set in the application.yml file.

This example shows how Spring Cloud AWS can deal with POJO conversion out-of-the-box, which works the same way for Java records.

7. Configuring a Custom Object Mapper

A common use case for message conversion is setting up a custom ObjectMapper with application-specific configurations.

For our next scenario, we’ll configure an ObjectMapper with a LocalDateDeserializer to read dates in the “dd-MM-yyyy” format.

Again, we’ll first create our test scenario. In this case, we’ll send the raw JSON payload directly through the SqsAsyncClient that is auto-configured by the framework:

@Autowired

private SqsAsyncClient sqsAsyncClient;

@Test

void givenShipmentRequestWithCustomDateFormat_whenMessageReceived_thenDeserializesDateCorrectly() {

UUID orderId = UUID.randomUUID();

String shipBy = LocalDate.parse("2024-05-12")

.format(DateTimeFormatter.ofPattern("dd-MM-yyyy"));

var jsonMessage = """

{

"orderId": "%s",

"customerAddress": "123 Main St",

"shipBy": "%s"

}

""".formatted(orderId, shipBy);

sendRawMessage(queuesProperties.getCustomObjectMapperQueue(), jsonMessage);

await().atMost(Duration.ofSeconds(10))

.untilAsserted(() -> {

var shipment = shipmentService.getShipment(orderId);

assertThat(shipment).isNotNull();

assertThat(shipment.getShipBy()).isEqualTo(LocalDate.parse(shipBy, DateTimeFormatter.ofPattern("dd-MM-yyyy")));

});

}

private void sendRawMessage(String queueName, String jsonMessage) {

sqsAsyncClient.getQueueUrl(req -> req.queueName(queueName))

.thenCompose(resp -> sqsAsyncClient.sendMessage(req -> req.messageBody(jsonMessage)

.queueUrl(resp.queueUrl())))

.join();

}

Let’s also add the listener for this queue:

@SqsListener("${events.queues.shipping.custom-object-mapper-queue}")

public void receiveShipmentRequestWithCustomObjectMapper(ShipmentRequestedEvent shipmentRequestedEvent) {

shippingService.processShippingRequest(shipmentRequestedEvent.toDomain());

}

When we run the test now, it fails and we see a message similar to this in the stacktrace:

Cannot deserialize value of type `java.time.LocalDate` from String "12-05-2024"

That’s because we’re not using the standard “yyyy-MM-dd” date format.

To address that, we’ll need to configure an ObjectMapper capable of parsing this date format. We can simply declare it as a bean in a @Configuration annotated class and auto-configuration properly sets it to both the auto-configured SqsTemplate and @SqsListener methods:

@Bean

public ObjectMapper objectMapper() {

ObjectMapper mapper = new ObjectMapper();

JavaTimeModule module = new JavaTimeModule();

LocalDateDeserializer customDeserializer = new LocalDateDeserializer(DateTimeFormatter.ofPattern("dd-MM-yyyy", Locale.getDefault()));

module.addDeserializer(LocalDate.class, customDeserializer);

mapper.registerModule(module);

return mapper;

}

When we run the test once again, it passes as expected.

8. Configuring Inheritance and Interfaces Deserialization

Another common scenario is having a superclass or interface with a variety of subclasses or implementations, and it’s necessary to inform the framework to which specific class the message should be deserialized to based on criteria, such as a MessageHeader or part of the message.

To illustrate this use case, let’s add some complexity to our scenario, and include two types of shipment: InternationalShipment and DomesticShipment, each a subclass of Shipment with specific properties.

8.1. Creating the Entities and Events

public class InternationalShipment extends Shipment {

private String destinationCountry;

private String customsInfo;

public InternationalShipment(UUID orderId, String customerAddress, LocalDate shipBy, ShipmentStatus status,

String destinationCountry, String customsInfo) {

super(orderId, customerAddress, shipBy, status);

this.destinationCountry = destinationCountry;

this.customsInfo = customsInfo;

}

// ...getters and setters

}

public class DomesticShipment extends Shipment {

private String deliveryRouteCode;

public DomesticShipment(UUID orderId, String customerAddress, LocalDate shipBy, ShipmentStatus status,

String deliveryRouteCode) {

super(orderId, customerAddress, shipBy, status);

this.deliveryRouteCode = deliveryRouteCode;

}

public String getDeliveryRouteCode() {

return deliveryRouteCode;

}

public void setDeliveryRouteCode(String deliveryRouteCode) {

this.deliveryRouteCode = deliveryRouteCode;

}

}

And let’s add their respective events:

public class DomesticShipmentRequestedEvent extends ShipmentRequestedEvent {

private String deliveryRouteCode;

public DomesticShipmentRequestedEvent(){}

public DomesticShipmentRequestedEvent(UUID orderId, String customerAddress, LocalDate shipBy, String deliveryRouteCode) {

super(orderId, customerAddress, shipBy);

this.deliveryRouteCode = deliveryRouteCode;

}

public DomesticShipment toDomain() {

return new DomesticShipment(getOrderId(), getCustomerAddress(), getShipBy(), ShipmentStatus.REQUESTED, deliveryRouteCode);

}

// ...getters and setters

}

public class InternationalShipmentRequestedEvent extends ShipmentRequestedEvent {

private String destinationCountry;

private String customsInfo;

public InternationalShipmentRequestedEvent(){}

public InternationalShipmentRequestedEvent(UUID orderId, String customerAddress, LocalDate shipBy, String destinationCountry,

String customsInfo) {

super(orderId, customerAddress, shipBy);

this.destinationCountry = destinationCountry;

this.customsInfo = customsInfo;

}

public InternationalShipment toDomain() {

return new InternationalShipment(getOrderId(), getCustomerAddress(), getShipBy(), ShipmentStatus.REQUESTED, destinationCountry,

customsInfo);

}

// ...getters and setters

}

8.2. Adding Service and Listener Logic

We’ll add two methods to our Service, each to process a different type of shipment:

@Service

public class ShipmentService {

// ...previous code stays the same

public void processDomesticShipping(DomesticShipment shipment) {

logger.info("Processing domestic shipping for order: {}", shipment.getOrderId());

shipment.setStatus(ShipmentStatus.READY_FOR_DISPATCH);

shippingRepository.put(shipment.getOrderId(), shipment);

logger.info("Domestic shipping processed: {}", shipment.getOrderId());

}

public void processInternationalShipping(InternationalShipment shipment) {

logger.info("Processing international shipping for order: {}", shipment.getOrderId());

shipment.setStatus(ShipmentStatus.CUSTOMS_CHECK);

shippingRepository.put(shipment.getOrderId(), shipment);

logger.info("International shipping processed: {}", shipment.getOrderId());

}

}

And now let’s add the listener that processes the messages. It’s worth noting that we’re using the superclass type in the listener method, as this method receives messages from both subtypes:

@SqsListener(queueNames = "${events.queues.shipping.subclass-deserialization-queue}")

public void receiveShippingRequestWithType(ShipmentRequestedEvent shipmentRequestedEvent) {

if (shipmentRequestedEvent instanceof InternationalShipmentRequestedEvent event) {

shippingService.processInternationalShipping(event.toDomain());

} else if (shipmentRequestedEvent instanceof DomesticShipmentRequestedEvent event) {

shippingService.processDomesticShipping(event.toDomain());

} else {

throw new RuntimeException("Event type not supported " + shipmentRequestedEvent.getClass()

.getSimpleName());

}

}

With the scenario set up, we can create the test. First, let’s create an event of each type:

@Test

void givenPayloadWithSubclasses_whenMessageReceived_thenDeserializesCorrectType() {

var domesticOrderId = UUID.randomUUID();

var domesticEvent = new DomesticShipmentRequestedEvent(domesticOrderId, "123 Main St", LocalDate.parse("2024-05-12"), "XPTO1234");

var internationalOrderId = UUID.randomUUID();

InternationalShipmentRequestedEvent internationalEvent = new InternationalShipmentRequestedEvent(internationalOrderId, "123 Main St", LocalDate.parse("2024-05-24"), "Canada", "HS Code: 8471.30, Origin: China, Value: $500");

}

Continuing on the same test method, we’ll now send the events. By default, SqsTemplate sends a header with the specific type information for deserialization. By leveraging this, we can simply send the messages using the auto-configured SqsTemplate and it deserializes the messages correctly:

sqsTemplate.send(queuesProperties.getSubclassDeserializationQueue(), internationalEvent);

sqsTemplate.send(queuesProperties.getSubclassDeserializationQueue(), domesticEvent);

Lastly, we assert that the status for each shipping corresponds to the appropriate status according to its type:

await().atMost(Duration.ofSeconds(10))

.untilAsserted(() -> {

var domesticShipment = (DomesticShipment) shipmentService.getShipment(domesticOrderId);

assertThat(domesticShipment).isNotNull();

assertThat(domesticShipment).usingRecursiveComparison()

.ignoringFields("status")

.isEqualTo(domesticEvent);

assertThat(domesticShipment.getStatus()).isEqualTo(ShipmentStatus.READY_FOR_DISPATCH);

var internationalShipment = (InternationalShipment) shipmentService.getShipment(internationalOrderId);

assertThat(internationalShipment).isNotNull();

assertThat(internationalShipment).usingRecursiveComparison()

.ignoringFields("status")

.isEqualTo(internationalEvent);

assertThat(internationalShipment.getStatus()).isEqualTo(ShipmentStatus.CUSTOMS_CHECK);

});

When we run the test now, it passes, which shows that each subclass was properly deserialized with the correct types and information.

It’s common to receive messages from services that might not use SqsTemplate to send messages, or perhaps the POJO or record representing the event is in a different package.

To simulate this scenario, let’s create a custom SqsTemplate in our test method, and configure it to send the messages without type information in the headers. For this scenario, we also need to inject an ObjectMapper that’s capable of serializing LocalDate instances, such as the one we’ve configured earlier or the one auto-configured by Spring Boot:

@Autowired

private ObjectMapper objectMapper;

var customTemplate = SqsTemplate.builder()

.sqsAsyncClient(sqsAsyncClient)

.configureDefaultConverter(converter -> {

converter.doNotSendPayloadTypeHeader();

converter.setObjectMapper(objectMapper);

})

.build();

customTemplate.send(to -> to.queue(queuesProperties.getSubclassDeserializationQueue())

.payload(internationalEvent);

customTemplate.send(to -> to.queue(queuesProperties.getSubclassDeserializationQueue())

.payload(domesticEvent);

Now, our test fails with messages similar to these in the stacktrace, as the framework has no way of knowing to which specific class to deserialize it to:

Could not read JSON: Unrecognized field "destinationCountry"

Could not read JSON: Unrecognized field "deliveryRouteCode"

To address this use case, the SqsMessagingMessageConverter class has the setPayloadTypeMapper method, which can be used to let the framework know the target class based on any property of the message. For this test, we’ll use a custom header as criteria.

First, let’s add our header configuration to our application.yml:

headers:

types:

shipping:

header-name: SHIPPING_TYPE

international: INTERNATIONAL

domestic: DOMESTIC

We’ll also create a properties class to hold those values:

@ConfigurationProperties(prefix = "headers.types.shipping")

public class ShippingHeaderTypesProperties {

private String headerName;

private String international;

private String domestic;

// ...getters and setters

}

Next, let’s enable the properties class in our Configuration class:

@EnableConfigurationProperties({ ShipmentEventsQueuesProperties.class, ShippingHeaderTypesProperties.class })

@Configuration

public class ShipmentServiceConfiguration {

// ...rest of code remains the same

}

We’ll now configure a custom SqsMessagingMessageConverter to use these headers and set it to the defaultSqsListenerContainerFactory bean:

@Bean

public SqsMessageListenerContainerFactory defaultSqsListenerContainerFactory(ObjectMapper objectMapper) {

SqsMessagingMessageConverter converter = new SqsMessagingMessageConverter();

converter.setPayloadTypeMapper(message -> {

if (!message.getHeaders()

.containsKey(typesProperties.getHeaderName())) {

return Object.class;

}

String eventTypeHeader = MessageHeaderUtils.getHeaderAsString(message, typesProperties.getHeaderName());

if (eventTypeHeader.equals(typesProperties.getDomestic())) {

return DomesticShipmentRequestedEvent.class;

} else if (eventTypeHeader.equals(typesProperties.getInternational())) {

return InternationalShipmentRequestedEvent.class;

}

throw new RuntimeException("Invalid shipping type");

});

converter.setObjectMapper(objectMapper);

return SqsMessageListenerContainerFactory.builder()

.sqsAsyncClient(sqsAsyncClient)

.configure(configure -> configure.messageConverter(converter))

.build();

}

After that, we add the headers to our custom template in the test method:

customTemplate.send(to -> to.queue(queuesProperties.getSubclassDeserializationQueue())

.payload(internationalEvent)

.header(headerTypesProperties.getHeaderName(), headerTypesProperties.getInternational()));

customTemplate.send(to -> to.queue(queuesProperties.getSubclassDeserializationQueue())

.payload(domesticEvent)

.header(headerTypesProperties.getHeaderName(), headerTypesProperties.getDomestic()));

When we run the test again, it passes, asserting that the proper subclass type was deserialized for each event.

9. Conclusion

In this article, we went through three common use cases for Message Conversion: POJO/record serialization and deserialization with out-of-the-box settings, using a custom ObjectMapper to handle different date formats and other specific configurations, and two different ways of deserializing a message to a subclass/interface implementation.

We tested each scenario by setting up a local test environment and creating live tests to assert our logic.

As usual, the complete code used in this article is available over on GitHub.

![]()