1. Overview

When using Spring Data JPA to implement the persistence layer, the repository typically returns one or more instances of the root class. However, more often than not, we don’t need all the properties of the returned objects.

In such cases, it may be desirable to retrieve data as objects of customized types. These types reflect partial views of the root class, containing only properties we care about. This is where projections come in handy.

2. Initial Setup

The first step is to set up the project and populate the database.

2.1. Maven Dependencies

For dependencies, please check out section 2 of this tutorial.

2.2. Entity Classes

Let’s define two entity classes:

@Entity

public class Address {

@Id

private Long id;

@OneToOne

private Person person;

private String state;

private String city;

private String street;

private String zipCode;

// getters and setters

}

And:

@Entity

public class Person {

@Id

private Long id;

private String firstName;

private String lastName;

@OneToOne(mappedBy = "person")

private Address address;

// getters and setters

}

The relationship between Person and Address entities is bidirectional one-to-one: Address is the owning side, and Person is the inverse side.

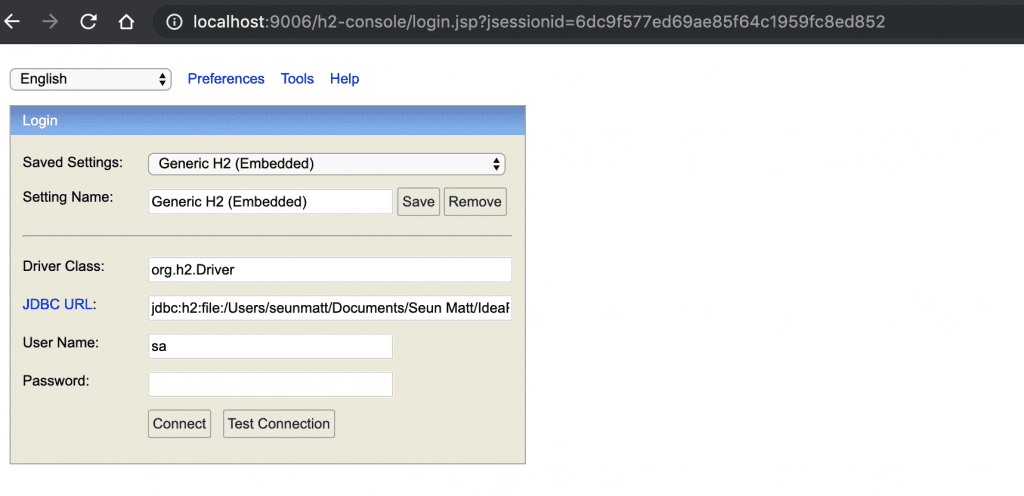

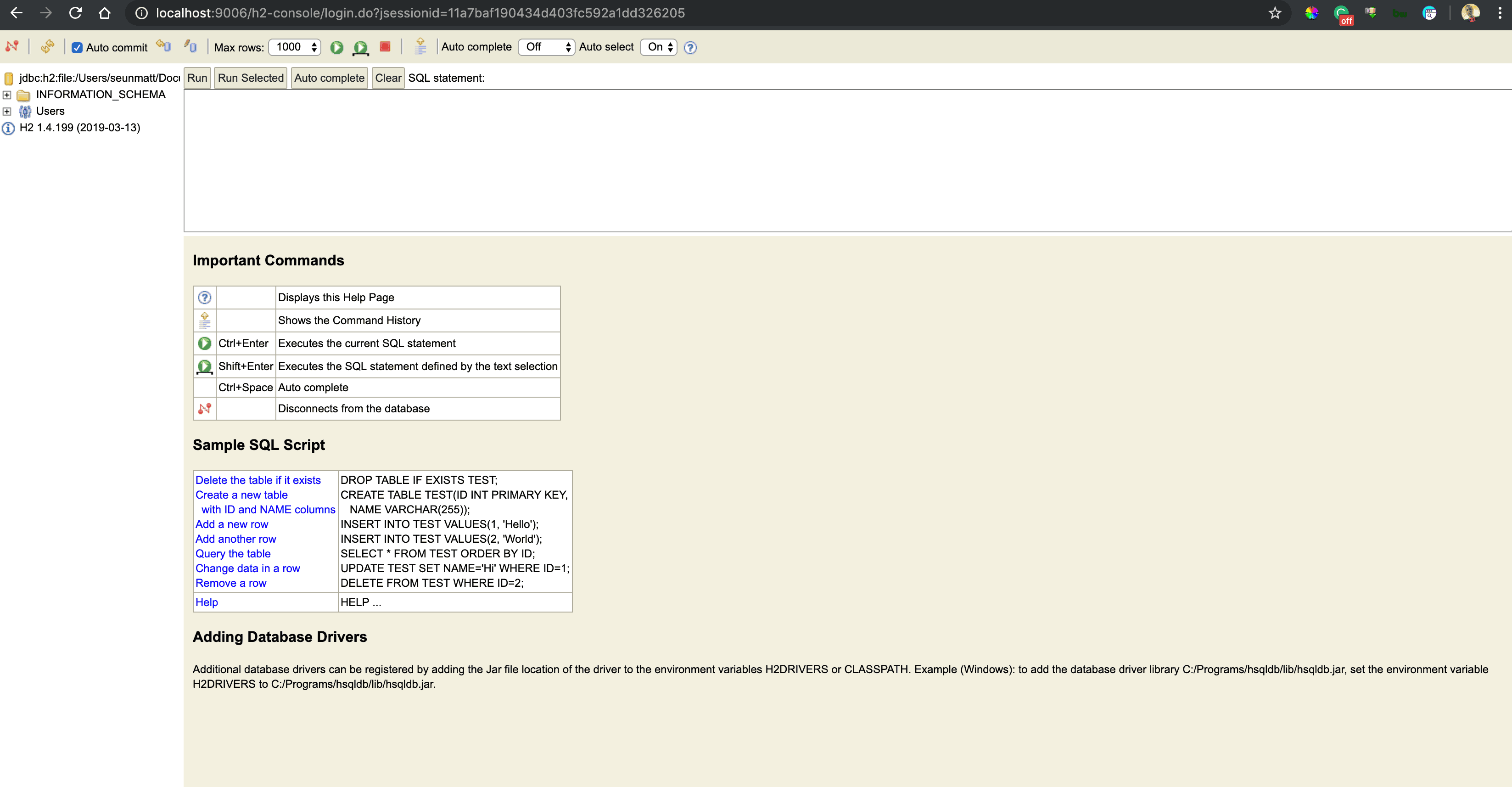

Notice in this tutorial, we use an embedded database — H2.

When an embedded database is configured, Spring Boot automatically generates underlying tables for the entities we defined.

2.3. SQL Scripts

We use the projection-insert-data.sql script to populate both the backing tables:

INSERT INTO person(id,first_name,last_name) VALUES (1,'John','Doe'); INSERT INTO address(id,person_id,state,city,street,zip_code) VALUES (1,1,'CA', 'Los Angeles', 'Standford Ave', '90001');

To clean up the database after each test run, we can use another script, named projection-clean-up-data.sql:

DELETE FROM address; DELETE FROM person;

2.4. Test Class

For confirming that projections produce correct data, we need a test class:

@DataJpaTest

@RunWith(SpringRunner.class)

@Sql(scripts = "/projection-insert-data.sql")

@Sql(scripts = "/projection-clean-up-data.sql", executionPhase = AFTER_TEST_METHOD)

public class JpaProjectionIntegrationTest {

// injected fields and test methods

}

With the given annotations, Spring Boot creates the database, injects dependencies, and populates and cleans up tables before and after each test method’s execution.

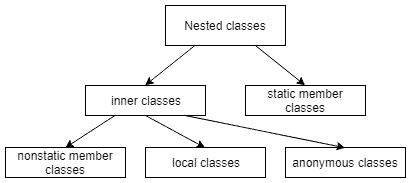

3. Interface-Based Projections

When projecting an entity, it’s natural to rely on an interface, as we won’t need to provide an implementation.

3.1. Closed Projections

Looking back at the Address class, we can see it has many properties, yet not all of them are helpful. For example, sometimes a zip code is enough to indicate an address.

Let’s declare a projection interface for the Address class:

public interface AddressView {

String getZipCode();

}

Then use it in a repository interface:

public interface AddressRepository extends Repository<Address, Long> {

List<AddressView> getAddressByState(String state);

}

It’s easy to see that defining a repository method with a projection interface is pretty much the same as with an entity class.

The only difference is that the projection interface, rather than the entity class, is used as the element type in the returned collection.

Let’s do a quick test of the Address projection:

@Autowired

private AddressRepository addressRepository;

@Test

public void whenUsingClosedProjections_thenViewWithRequiredPropertiesIsReturned() {

AddressView addressView = addressRepository.getAddressByState("CA").get(0);

assertThat(addressView.getZipCode()).isEqualTo("90001");

// ...

}

Behind the scenes, Spring creates a proxy instance of the projection interface for each entity object, and all calls to the proxy are forwarded to that object.

We can use projections recursively. For instance, here’s a projection interface for the Person class:

public interface PersonView {

String getFirstName();

String getLastName();

}

Now, let’s add a method with the return type PersonView – a nested projection – in the Address projection:

public interface AddressView {

// ...

PersonView getPerson();

}

Notice the method that returns the nested projection must have the same name as the method in the root class that returns the related entity.

Let’s verify nested projections by adding a few statements to the test method we’ve just written:

// ...

PersonView personView = addressView.getPerson();

assertThat(personView.getFirstName()).isEqualTo("John");

assertThat(personView.getLastName()).isEqualTo("Doe");

Note that recursive projections only work if we traverse from the owning side to the inverse side. Were we to do it the other way around, the nested projection would be set to null.

3.2. Open Projections

Up to this point, we’ve gone through closed projections, which indicate projection interfaces whose methods exactly match the names of entity properties.

There’s another sort of interface-based projections: open projections. These projections enable us to define interface methods with unmatched names and with return values computed at runtime.

Let’s go back to the Person projection interface and add a new method:

public interface PersonView {

// ...

@Value("#{target.firstName + ' ' + target.lastName}")

String getFullName();

}

The argument to the @Value annotation is a SpEL expression, in which the target designator indicates the backing entity object.

Now, we’ll define another repository interface:

public interface PersonRepository extends Repository<Person, Long> {

PersonView findByLastName(String lastName);

}

To make it simple, we only return a single projection object instead of a collection.

This test confirms open projections work as expected:

@Autowired

private PersonRepository personRepository;

@Testpublic void whenUsingOpenProjections_thenViewWithRequiredPropertiesIsReturned() {

PersonView personView = personRepository.findByLastName("Doe");

assertThat(personView.getFullName()).isEqualTo("John Doe");

}

Open projections have a drawback: Spring Data cannot optimize query execution as it doesn’t know in advance which properties will be used. Thus, we should only use open projections when closed projections aren’t capable of handling our requirements.

4. Class-Based Projections

Instead of using proxies Spring Data creates for us from projection interfaces, we can define our own projection classes.

For example, here’s a projection class for the Person entity:

public class PersonDto {

private String firstName;

private String lastName;

public PersonDto(String firstName, String lastName) {

this.firstName = firstName;

this.lastName = lastName;

}

// getters, equals and hashCode

}

For a projection class to work in tandem with a repository interface, the parameter names of its constructor must match properties of the root entity class.

We must also define equals and hashCode implementations – they allow Spring Data to process projection objects in a collection.

Now, let’s add a method to the Person repository:

public interface PersonRepository extends Repository<Person, Long> {

// ...

PersonDto findByFirstName(String firstName);

}

This test verifies our class-based projection:

@Test

public void whenUsingClassBasedProjections_thenDtoWithRequiredPropertiesIsReturned() {

PersonDto personDto = personRepository.findByFirstName("John");

assertThat(personDto.getFirstName()).isEqualTo("John");

assertThat(personDto.getLastName()).isEqualTo("Doe");

}

Notice with the class-based approach, we cannot use nested projections.

5. Dynamic Projections

An entity class may have many projections. In some cases, we may use a certain type, but in other cases, we may need another type. Sometimes, we also need to use the entity class itself.

Defining separate repository interfaces or methods just to support multiple return types is cumbersome. To deal with this problem, Spring Data provides a better solution: dynamic projections.

We can apply dynamic projections just by declaring a repository method with a Class parameter:

public interface PersonRepository extends Repository<Person, Long> {

// ...

<T> T findByLastName(String lastName, Class<T> type);

}

By passing a projection type or the entity class to such a method, we can retrieve an object of the desired type:

@Test

public void whenUsingDynamicProjections_thenObjectWithRequiredPropertiesIsReturned() {

Person person = personRepository.findByLastName("Doe", Person.class);

PersonView personView = personRepository.findByLastName("Doe", PersonView.class);

PersonDto personDto = personRepository.findByLastName("Doe", PersonDto.class);

assertThat(person.getFirstName()).isEqualTo("John");

assertThat(personView.getFirstName()).isEqualTo("John");

assertThat(personDto.getFirstName()).isEqualTo("John");

}

6. Conclusion

In this article, we went over various types of Spring Data JPA projections.

The source code for this tutorial is available over on GitHub. This is a Maven project and should be able to run as-is.