1. Overview

We often need to filter a List of objects by checking if any of their fields match a given String in many real-world Java applications. In other words, we want to search for a String and filter the objects that match in any of their properties.

In this tutorial, we’ll walk through different approaches to filtering a List by any matching fields in Java.

2. Introduction to the Problem

As usual, let’s understand the problem through examples.

Let’s say we have a Book class with fields like title, tags, intro, and pages:

class Book {

private String title;

private List<String> tags;

private String intro;

private int pages;

public Book(String title, List<String> tags, String intro, int pages) {

this.title = title;

this.tags = tags;

this.intro = intro;

this.pages = pages;

}

// ... getter and setter methods are omitted

}Next, let’s create four Book instances using the defined constructor and put them into a List<Book>:

static final Book JAVA = new Book(

"The Art of Java Programming",

List.of("Tech", "Java"),

"Java is a powerful programming language.",

400);

static final Book KOTLIN = new Book(

"Let's Dive Into Kotlin Codes",

List.of("Tech", "Java", "Kotlin"),

"It is big fun learning how to write Kotlin codes.",

300);

static final Book PYTHON = new Book(

"Python Tricks You Should Know",

List.of("Tech", "Python"),

"The path of being a Python expert.",

200);

static final Book GUITAR = new Book(

"How to Play a Guitar",

List.of("Art", "Music"),

"Let's learn how to play a guitar.",

100);

static final List<Book> BOOKS = List.of(JAVA, KOTLIN, PYTHON, GUITAR);Now, we want to perform a filter operation on BOOKS to find all Book objects that contain a keyword String in the title, tags, or intro. In other words, we would like to execute a full-text search on BOOKS.

For example, if we want to search for “Java”, the JAVA and KOTLIN instances should be in the result. This is because JAVA.title contains “Java” and KOTLIN.tags contains “Java”.

Similarly, if we search for “Art“, we expect JAVA and GUITAR to be found since JAVA.title and GUITAR.tags contain the word “Art.” When “Let’s“ is the keyword, KOTLIN and GUITAR should be in the result as KOTLIN.title and GUITAR.intro contain the keyword.

Next, let’s explore how to solve this problem and use these three keyword examples to check our solutions.

For simplicity, we assume all Book‘s properties are not null, and we’ll leverage unit test assertions to verify whether our solutions work as expected.

Next, let’s dive into the code.

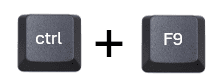

3. Using the Stream.filter() Method

Stream API provides the convenient filter() method, which allows us to filter objects in a Stream through a lambda expression easily.

Next, let’s solve the full-text search problem using this approach:

List<Book> fullTextSearchByLogicalOr(List<Book> books, String keyword) {

return books.stream()

.filter(book -> book.getTitle().contains(keyword)

|| book.getIntro().contains(keyword)

|| book.getTags().stream().anyMatch(tag -> tag.contains(keyword)))

.toList();

}As we can see, the above implementation is pretty straightforward. In the lambda expression that we pass to filter(), we check whether any property contains the keyword.

It’s worth mentioning that as Book.tags is a List<String>, we leverage Stream.anyMatch() to check if any tag in the List contains the keyword.

Next, let’s test whether this approach works correctly:

List<Book> byJava = fullTextSearchByLogicalOr(BOOKS, "Java");

assertThat(byJava).containsExactlyInAnyOrder(JAVA, KOTLIN);

List<Book> byArt = fullTextSearchByLogicalOr(BOOKS, "Art");

assertThat(byArt).containsExactlyInAnyOrder(JAVA, GUITAR);

List<Book> byLets = fullTextSearchByLogicalOr(BOOKS, "Let's");

assertThat(byLets).containsExactlyInAnyOrder(KOTLIN, GUITAR);The test passes if we give it a run.

In this example, we only need to check three properties in the Book class. However, a full-text search might check a class’s dozen properties in an actual application. In this case, the lambda expression would be pretty long and can make the Stream pipeline difficult to read and maintain.

Of course, we can extract the lambda expression as a method to solve it. Alternatively, we can create a function to generate the String representation for full-text search.

Next, let’s take a closer look at this approach.

4. Creating a String Representation For Filtering

We know toString() returns a String representation of an object. Similarly, we can create a method to provide an object’s String representation for full-text search:

class Book {

// ... unchanged codes omitted

public String strForFiltering() {

String tagsStr = String.join("\n", tags);

return String.join("\n", title, intro, tagsStr);

}

}

As the code above shows, the strForFiltering() function joins all full-text search required String values to a linebreak-separated String. If we take KOTLIN as an example, this method returns the following String:

String expected = """

Let's Dive Into Kotlin Codes

It is big fun learning how to write Kotlin codes.

Tech

Java

Kotlin""";

assertThat(KOTLIN.strForFiltering()).isEqualTo(expected);In this example, we use Java text block to present the multiline String.

Then, a full-text search would be an easy task for us. We just check if book.strForFilter()’s result contains keyword:

List<Book> fullTextSearchByStrForFiltering(List<Book> books, String keyword) {

return books.stream()

.filter(book -> book.strForFiltering().contains(keyword))

.toList();

}Next, let’s check if this solution works as expected:

List<Book> byJava = fullTextSearchByStrForFiltering(BOOKS, "Java");

assertThat(byJava).containsExactlyInAnyOrder(JAVA, KOTLIN);

List<Book> byArt = fullTextSearchByStrForFiltering(BOOKS, "Art");

assertThat(byArt).containsExactlyInAnyOrder(JAVA, GUITAR);

List<Book> byLets = fullTextSearchByStrForFiltering(BOOKS, "Let's");

assertThat(byLets).containsExactlyInAnyOrder(KOTLIN, GUITAR);The test passes. Therefore, this approach does the job.

5. Creating a General Full-Text Search Method

In this section, let’s try to create a general method to perform a full-text search on any object:

boolean fullTextSearchOnObject(Object obj, String keyword, String... excludedFields) {

Field[] fields = obj.getClass().getDeclaredFields();

for (Field field : fields) {

if (Arrays.stream(excludedFields).noneMatch(exceptName -> exceptName.equals(field.getName()))) {

field.setAccessible(true);

try {

Object value = field.get(obj);

if (value != null) {

if (value.toString().contains(keyword)) {

return true;

}

if (!field.getType().isPrimitive() && !(value instanceof String)

&& fullTextSearchOnObject(value, keyword, excludedFields)) {

return true;

}

}

} catch (InaccessibleObjectException | IllegalAccessException ignored) {

//ignore reflection exceptions

}

}

}

return false;

}The fullTextSearchOnObject() method accepts three parameters: the object we want to perform the search, the keyword, and the excluded field names.

The implementation uses reflection to retrieve all fields of the object. Then, we loop through the fields, skip excludedFields using Stream.nonMatch(), and obtain the field’s value by field.get(obj). Since we aim to perform a String-based search, we convert the value to a String using toString() and check if the field’s value contains the search term.

Our object may contain nested objects. Therefore, we recursively check the fields of nested objects. If any field is an object (other than a primitive or String), it calls fullTextSearchOnObject() on that field, enabling us to search through deeply nested structures.

Now, we can make use of fullTextSearchOnObject() to create a method to full-text filter a List of Book objects:

List<Book> fullTextSearchByReflection(List<Book> books, String keyword, String... excludeFields) {

return books.stream().filter(book -> fullTextSearchOnObject(book, keyword, excludeFields)).toList();

}Next, let’s run the same test to verify if this approach works as expected:

List<Book> byJava = fullTextSearchByReflection(BOOKS, "Java", "pages");

assertThat(byJava).containsExactlyInAnyOrder(JAVA, KOTLIN);

List<Book> byArt = fullTextSearchByReflection(BOOKS, "Art", "pages");

assertThat(byArt).containsExactlyInAnyOrder(JAVA, GUITAR);

List<Book> byLets = fullTextSearchByReflection(BOOKS, "Let's", "pages");

assertThat(byLets).containsExactlyInAnyOrder(KOTLIN, GUITAR);As we can see, we passed “pages” as the excluded fields in the test above. If it’s required, we can conveniently extend excluded fields for a custom full-text search:

List<Book> byArtExcludeTag = fullTextSearchByReflection(BOOKS, "Art", "tags", "pages");

assertThat(byArtExcludeTag).containsExactlyInAnyOrder(JAVA);This example showcases how to perform a full-text search only on title and intro fields. As GUITAR only contains the search keyword “Art” in tags, it gets filtered out.

Using reflection and recursion, we can implement a full-text search on a Java object that checks all fields, including nested fields, for a given String keyword. This approach allows us to dynamically search through an object’s fields without explicitly knowing the structure of the class.

6. Conclusion

In this article, we’ve explored different solutions to filtering a List by any field matching a String in Java.

These techniques will help us write cleaner, more maintainable code while providing a powerful way to search and filter Java objects.

As always, the complete source code for the examples is available over on GitHub.