1. Introduction

Dubbo is an open-source RPC and microservice framework from Alibaba.

Among other things, it helps enhance service governance and makes it possible for a traditional monolith applications to be refactored smoothly to a scalable distributed architecture.

In this article, we’ll give an introduction to Dubbo and its most important features.

2. Architecture

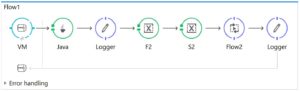

Dubbo distinguishes a few roles:

- Provider – where service is exposed; a provider will register its service to registry

- Container – where the service is initiated, loaded and run

- Consumer – who invokes remote services; a consumer will subscribe to the service needed in the registry

- Registry – where service will be registered and discovered

- Monitor – record statistics for services, for example, frequency of service invocation in a given time interval

![]()

(source: http://dubbo.io/images/dubbo-architecture.png)

Connections between a provider, a consumer and a registry are persistent, so whenever a service provider is down, the registry can detect the failure and notify the consumers.

The registry and monitor are optional. Consumers could connect directly to service providers, but the stability of the whole system would be affected.

3. Maven Dependency

Before we dive in, let’s add the following dependency to our pom.xml:

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>dubbo</artifactId>

<version>2.5.7</version>

</dependency>

The latest version can be found here.

4. Bootstrapping

Now let’s try out the basic features of Dubbo.

This is a minimally invasive framework, and lots of its features depend on external configurations or annotations.

It’s officially suggested that we should use XML configuration file because it depends on a Spring container (currently Spring 4.3.10).

We’ll demonstrate most of its features using XML configuration.

4.1. Multicast Registry – Service Provider

As a quick start, we’ll only need a service provider, a consumer and an “invisible” registry. The registry is invisible because we are using a multicast network.

In the following example, the provider only says “hi” to its consumers:

public interface GreetingsService {

String sayHi(String name);

}

public class GreetingsServiceImpl implements GreetingsService {

@Override

public String sayHi(String name) {

return "hi, " + name;

}

}

To make a remote procedure call, the consumer must share a common interface with the service provider, thus the interface GreetingsService must be shared with the consumer.

4.2. Multicast Registry – Service Registration

Let’s now register GreetingsService to the registry. A very convenient way is to use a multicast registry if both providers and consumers are on the same local network:

<dubbo:application name="demo-provider" version="1.0"/>

<dubbo:registry address="multicast://224.1.1.1:9090"/>

<dubbo:protocol name="dubbo" port="20880"/>

<bean id="greetingsService" class="com.baeldung.dubbo.remote.GreetingsServiceImpl"/>

<dubbo:service interface="com.baeldung.dubbo.remote.GreetingsService"

ref="greetingsService"/>

With the beans configuration above, we have just exposed our GreetingsService to an url under dubbo://127.0.0.1:20880 and registered the service to a multicast address specified in <dubbo:registry />.

In the provider’s configuration, we also declared our application metadata, the interface to publish and its implementation respectively by <dubbo:application />, <dubbo:service /> and <beans />.

The dubbo protocol is one of many protocols the framework supports. It is built on top of the Java NIO non-blocking feature and it’s the default protocol used.

We’ll discuss it in more detail later in this article.

4.3. Multicast Registry – Service Consumer

Generally, the consumer needs to specify the interface to invoke and the address of remote service, and that’s exactly what’s needed for a consumer:

<dubbo:application name="demo-consumer" version="1.0"/>

<dubbo:registry address="multicast://224.1.1.1:9090"/>

<dubbo:reference interface="com.baeldung.dubbo.remote.GreetingsService"

id="greetingsService"/>

Now everything’s set up, let’s see how they work in action:

public class MulticastRegistryTest {

@Before

public void initRemote() {

ClassPathXmlApplicationContext remoteContext

= new ClassPathXmlApplicationContext("multicast/provider-app.xml");

remoteContext.start();

}

@Test

public void givenProvider_whenConsumerSaysHi_thenGotResponse(){

ClassPathXmlApplicationContext localContext

= new ClassPathXmlApplicationContext("multicast/consumer-app.xml");

localContext.start();

GreetingsService greetingsService

= (GreetingsService) localContext.getBean("greetingsService");

String hiMessage = greetingsService.sayHi("baeldung");

assertNotNull(hiMessage);

assertEquals("hi, baeldung", hiMessage);

}

}

When the provider’s remoteContext starts, Dubbo will automatically load GreetingsService and register it to a given registry. In this case, it’s a multicast registry.

The consumer subscribes to the multicast registry and creates a proxy of GreetingsService in the context. When our local client invokes the sayHi method, it’s transparently invoking a remote service.

We mentioned that the registry is optional, meaning that the consumer could connect directly to the provider, via the exposed port:

<dubbo:reference interface="com.baeldung.dubbo.remote.GreetingsService"

id="greetingsService" url="dubbo://127.0.0.1:20880"/>

Basically, the procedure is similar to traditional web service, but Dubbo just makes it plain, simple and lightweight.

4.4. Simple Registry

Note that when using an “invisible” multicast registry, the registry service is not standalone. However, it’s only applicable to a restricted local network.

To explicitly set up a manageable registry, we can use a SimpleRegistryService.

After loading the following beans configuration into Spring context, a simple registry service is started:

<dubbo:application name="simple-registry" />

<dubbo:protocol port="9090" />

<dubbo:service interface="com.alibaba.dubbo.registry.RegistryService"

ref="registryService" registry="N/A" ondisconnect="disconnect">

<dubbo:method name="subscribe">

<dubbo:argument index="1" callback="true" />

</dubbo:method>

<dubbo:method name="unsubscribe">

<dubbo:argument index="1" callback="true" />

</dubbo:method>

</dubbo:service>

<bean class="com.alibaba.dubbo.registry.simple.SimpleRegistryService"

id="registryService" />

Note that the SimpleRegistryService class is not contained in the artifact, so we copied the source code directly from the Github repository.

Then we shall adjust the registry configuration of the provider and consumer:

<dubbo:registry address="127.0.0.1:9090"/>

SimpleRegistryService can be used as a standalone registry when testing, but it is not advised to be used in production environment.

4.5. Java Configuration

Configuration via Java API, property file, and annotations are also supported. However, property file and annotations are only applicable if our architecture isn’t very complex.

Let’s see how our previous XML configurations for multicast registry can be translated into API configuration. First, the provider is set up as follows:

ApplicationConfig application = new ApplicationConfig();

application.setName("demo-provider");

application.setVersion("1.0");

RegistryConfig registryConfig = new RegistryConfig();

registryConfig.setAddress("multicast://224.1.1.1:9090");

ServiceConfig<GreetingsService> service = new ServiceConfig<>();

service.setApplication(application);

service.setRegistry(registryConfig);

service.setInterface(GreetingsService.class);

service.setRef(new GreetingsServiceImpl());

service.export();

Now that the service is already exposed via the multicast registry, let’s consume it in a local client:

ApplicationConfig application = new ApplicationConfig();

application.setName("demo-consumer");

application.setVersion("1.0");

RegistryConfig registryConfig = new RegistryConfig();

registryConfig.setAddress("multicast://224.1.1.1:9090");

ReferenceConfig<GreetingsService> reference = new ReferenceConfig<>();

reference.setApplication(application);

reference.setRegistry(registryConfig);

reference.setInterface(GreetingsService.class);

GreetingsService greetingsService = reference.get();

String hiMessage = greetingsService.sayHi("baeldung");

Though the snippet above works like a charm as the previous XML configuration example, it is a little more trivial. For the time being, XML configuration should be the first choice if we intend to make full use of Dubbo.

5. Protocol Support

The framework supports multiple protocols, including dubbo, RMI, hessian, HTTP, web service, thrift, memcached and redis. Most of the protocols looks familiar, except for dubbo. Let’s see what’s new in this protocol.

The dubbo protocol keeps a persistent connection between providers and consumers. The long connection and NIO non-blocking network communication result in a fairly great performance while transmitting small-scale data packets (<100K).

There are several configurable properties, such as port, number of connections per consumer, maximum accepted connections, etc.

<dubbo:protocol name="dubbo" port="20880"

connections="2" accepts="1000" />

Dubbo also supports exposing services via different protocols all at once:

<dubbo:protocol name="dubbo" port="20880" />

<dubbo:protocol name="rmi" port="1099" />

<dubbo:service interface="com.baeldung.dubbo.remote.GreetingsService"

version="1.0.0" ref="greetingsService" protocol="dubbo" />

<dubbo:service interface="com.bealdung.dubbo.remote.AnotherService"

version="1.0.0" ref="anotherService" protocol="rmi" />

And yes, we can expose different services using different protocols, as shown in the snippet above. The underlying transporters, serialization implementations and other common properties relating to networking are configurable as well.

6. Result Caching

Natively remote result caching is supported to speed up access to hot data. It’s as simple as adding a cache attribute to the bean reference:

<dubbo:reference interface="com.baeldung.dubbo.remote.GreetingsService"

id="greetingsService" cache="lru" />

Here we configured a least-recently-used cache. To verify the caching behavior, we’ll change a bit in the previous standard implementation (let’s call it “special implementation”):

public class GreetingsServiceSpecialImpl implements GreetingsService {

@Override

public String sayHi(String name) {

try {

SECONDS.sleep(5);

} catch (Exception ignored) { }

return "hi, " + name;

}

}

After starting up provider, we can verify on the consumer’s side, that the result is cached when invoking more than once:

@Test

public void givenProvider_whenConsumerSaysHi_thenGotResponse() {

ClassPathXmlApplicationContext localContext

= new ClassPathXmlApplicationContext("multicast/consumer-app.xml");

localContext.start();

GreetingsService greetingsService

= (GreetingsService) localContext.getBean("greetingsService");

long before = System.currentTimeMillis();

String hiMessage = greetingsService.sayHi("baeldung");

long timeElapsed = System.currentTimeMillis() - before;

assertTrue(timeElapsed > 5000);

assertNotNull(hiMessage);

assertEquals("hi, baeldung", hiMessage);

before = System.currentTimeMillis();

hiMessage = greetingsService.sayHi("baeldung");

timeElapsed = System.currentTimeMillis() - before;

assertTrue(timeElapsed < 1000);

assertNotNull(hiMessage);

assertEquals("hi, baeldung", hiMessage);

}

Here the consumer is invoking the special service implementation, so it took more than 5 seconds for the invocation to complete the first time. When we invoke again, the sayHi method completes almost immediately, as the result is returned from the cache.

Note that thread-local cache and JCache are also supported.

7. Cluster Support

Dubbo helps us scale up our services freely with its ability of load balancing and several fault tolerance strategies. Here, let’s assume we have Zookeeper as our registry to manage services in a cluster. Providers can register their services in Zookeeper like this:

<dubbo:registry address="zookeeper://127.0.0.1:2181"/>

Note that we need these additional dependencies in the POM:

<dependency>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

<version>3.4.11</version>

</dependency>

<dependency>

<groupId>com.101tec</groupId>

<artifactId>zkclient</artifactId>

<version>0.10</version>

</dependency>

The latest versions of zookeeper dependency and zkclient can be found here and here.

7.1. Load Balancing

Currently, the framework supports a few load-balancing strategies:

- random

- round-robin

- least-active

- consistent-hash.

In the following example, we have two service implementations as providers in a cluster. The requests are routed using the round-robin approach.

First, let’s set up service providers:

@Before

public void initRemote() {

ExecutorService executorService = Executors.newFixedThreadPool(2);

executorService.submit(() -> {

ClassPathXmlApplicationContext remoteContext

= new ClassPathXmlApplicationContext("cluster/provider-app-default.xml");

remoteContext.start();

});

executorService.submit(() -> {

ClassPathXmlApplicationContext backupRemoteContext

= new ClassPathXmlApplicationContext("cluster/provider-app-special.xml");

backupRemoteContext.start();

});

}

Now we have a standard “fast provider” that responds immediately, and a special “slow provider” who sleeps for 5 seconds on every request.

After running 6 times with the round-robin strategy, we expect the average response time to be at least 2.5 seconds:

@Test

public void givenProviderCluster_whenConsumerSaysHi_thenResponseBalanced() {

ClassPathXmlApplicationContext localContext

= new ClassPathXmlApplicationContext("cluster/consumer-app-lb.xml");

localContext.start();

GreetingsService greetingsService

= (GreetingsService) localContext.getBean("greetingsService");

List<Long> elapseList = new ArrayList<>(6);

for (int i = 0; i < 6; i++) {

long current = System.currentTimeMillis();

String hiMessage = greetingsService.sayHi("baeldung");

assertNotNull(hiMessage);

elapseList.add(System.currentTimeMillis() - current);

}

OptionalDouble avgElapse = elapseList

.stream()

.mapToLong(e -> e)

.average();

assertTrue(avgElapse.isPresent());

assertTrue(avgElapse.getAsDouble() > 2500.0);

}

Moreover, dynamic load balancing is adopted. The next example demonstrates that, with round-robin strategy, the consumer automatically chooses the new service provider as a candidate when the new provider comes online.

The “slow provider” is registered 2 seconds later after the system starts:

@Before

public void initRemote() {

ExecutorService executorService = Executors.newFixedThreadPool(2);

executorService.submit(() -> {

ClassPathXmlApplicationContext remoteContext

= new ClassPathXmlApplicationContext("cluster/provider-app-default.xml");

remoteContext.start();

});

executorService.submit(() -> {

SECONDS.sleep(2);

ClassPathXmlApplicationContext backupRemoteContext

= new ClassPathXmlApplicationContext("cluster/provider-app-special.xml");

backupRemoteContext.start();

return null;

});

}

The consumer invokes the remote service once per second. After running 6 times, we expect the average response time to be greater than 1.6 seconds:

@Test

public void givenProviderCluster_whenConsumerSaysHi_thenResponseBalanced()

throws InterruptedException {

ClassPathXmlApplicationContext localContext

= new ClassPathXmlApplicationContext("cluster/consumer-app-lb.xml");

localContext.start();

GreetingsService greetingsService

= (GreetingsService) localContext.getBean("greetingsService");

List<Long> elapseList = new ArrayList<>(6);

for (int i = 0; i < 6; i++) {

long current = System.currentTimeMillis();

String hiMessage = greetingsService.sayHi("baeldung");

assertNotNull(hiMessage);

elapseList.add(System.currentTimeMillis() - current);

SECONDS.sleep(1);

}

OptionalDouble avgElapse = elapseList

.stream()

.mapToLong(e -> e)

.average();

assertTrue(avgElapse.isPresent());

assertTrue(avgElapse.getAsDouble() > 1666.0);

}

Note that the load balancer can be configured both on the consumer’s side and on the provider’s side. Here’s an example of consumer-side configuration:

<dubbo:reference interface="com.baeldung.dubbo.remote.GreetingsService"

id="greetingsService" loadbalance="roundrobin" />

7.2. Fault Tolerance

Several fault tolerance strategies are supported in Dubbo, including:

- fail-over

- fail-safe

- fail-fast

- fail-back

- forking.

In the case of fail-over, when one provider fails, the consumer can try with some other service providers in the cluster.

The fault tolerance strategies are configured like the following for service providers:

<dubbo:service interface="com.baeldung.dubbo.remote.GreetingsService"

ref="greetingsService" cluster="failover"/>

To demonstrate service fail-over in action, let’s create a fail-over implementation of GreetingsService:

public class GreetingsFailoverServiceImpl implements GreetingsService {

@Override

public String sayHi(String name) {

return "hi, failover " + name;

}

}

We can recall that our special service implementation GreetingsServiceSpecialImpl sleeps 5 seconds for each request.

When any response that takes more than 2 seconds is seen as a request failure for the consumer, we have a fail-over scenario:

<dubbo:reference interface="com.baeldung.dubbo.remote.GreetingsService"

id="greetingsService" retries="2" timeout="2000" />

After starting two providers, we can verify the fail-over behavior with the following snippet:

@Test

public void whenConsumerSaysHi_thenGotFailoverResponse() {

ClassPathXmlApplicationContext localContext

= new ClassPathXmlApplicationContext(

"cluster/consumer-app-failtest.xml");

localContext.start();

GreetingsService greetingsService

= (GreetingsService) localContext.getBean("greetingsService");

String hiMessage = greetingsService.sayHi("baeldung");

assertNotNull(hiMessage);

assertEquals("hi, failover baeldung", hiMessage);

}

8. Summary

In this tutorial, we took a small bite of Dubbo. Most users are attracted by its simplicity and rich and powerful features.

Aside from what we introduced in this article, the framework has a number of features yet to be explored, such as parameter validation, notification and callback, generalized implementation and reference, remote result grouping and merging, service upgrade and backward compatibility, to name just a few.

As always, the full implementation can be found over on Github.

![]()