1. Introduction

In this article, we'll understand the core architecture and important elements of a geospatial application. We'll begin by understanding what a geospatial application is and typical challenges in building one.

One of the important aspects of a geospatial application is representing useful data on intuitive maps. Although, in this article, we'll mostly focus on handling geospatial data at the backend and the options available to us in the industry today.

2. What Are Geospatial Applications?

Let's begin by understanding what we mean by a geospatial application. These are basically applications that make use of geospatial data to deliver their core features.

In simple terms, geospatial data are any data that represent places, locations, maps, navigation, and so on. Even without any fancy definition, we're quite surrounded by these applications. For instance, our favorite ride-sharing applications, food-delivery applications, and movie-booking applications are all geospatial applications.

Geospatial data is basically information that describes objects, events, or other features with a location on or near the earth's surface. For instance, think of an application that can suggest to us the nearest theaters playing our favorite Shakespeare plays in the evening today. It can do so by combining the location information of theaters with the attribute information of the plays and the temporal information of the events.

There are several other useful applications of geospatial data that deliver day-to-day value to us — for example, when we try to locate a cab nearby at any hour of the day that is willing to take us to our destination. Or when we can't wait for that important shipment to arrive and, thankfully, can locate where exactly it is in transit.

In fact, this has become a fundamental requirement of several applications that we use quite often these days.

3. Geospatial Technologies

Before we understand the nuances of building a geospatial application, let's go through some of the core technologies that empower such applications. These are the underlying technologies that help us generate, process, and present geospatial data in a useful form.

Remote Sensing (RS) is the process of detecting and monitoring the physical characteristics of an area by measuring its reflected and emitted radiation at a distance. Typically this is done using remote sensing satellites. It has significant usage in the fields of surveying, intelligence, and also commercial applications.

Global Positioning Systems (GPS) refers to a satellite-based navigation system based on a network of 24 satellites flying in the Medium Earth Orbit (MEO). It can provide geolocation and time information to a suitable receiver anywhere on the Earth where it has an unobstructed line-of-sight to four or more GPS satellites.

Geographic Information Systems (GIS) is a system that creates, manages, analyzes, and maps all types of data. For instance, it helps us to integrate location data with more descriptive information like what is present in that location. It helps improve communication and decision-making in several industries.

4. Challenges in Building a Geospatial Application

To understand what design choices we should make when building a geospatial application, it's important to know the challenges involved. Typically, geospatial applications require real-time analysis of a large volume of geospatial data. For instance, finding the quickest alternate route to a recent hit place with a natural disaster is crucial for first responders.

So basically, one of the underpinning requirements of a geospatial application is storing tons of geospatial data and facilitating arbitrary queries with very low latency. Now, it's also important to understand the nature of spatial data and why it requires special handling. Basically, spatial data represents objects defined in geometric space.

Let's imagine that we have several locations of interest around a city. A location is typically described by its latitude, longitude, and (possibly) elevation:

![]()

Now, what we're really interested in is to find the nearby locations to a given location. So, we need to compute the distance from this location to all the possible locations. Such queries are quite atypical of regular database queries we're familiar with. These are known as spatial queries. They involve geometric data types and consider the spatial relationship between these geometries.

We already know that no production database would probably survive without efficient indices. That's also true for spatial data. However, due to its nature, regular indices would not be very efficient for spatial data and the types of spatial queries we want to perform. Hence, we need specialized indices known as spatial indices that can help us perform spatial operations more efficiently.

5. Spatial Data Types and Queries

Now that we understand the challenges in dealing with spatial data, it's important to note several types of spatial data. Moreover, we can perform several interesting queries on them to serve unique requirements. We'll cover some of these data types and the operations we can perform on them.

We normally talk about spatial data with respect to a spatial reference system. This is composed of a coordinate system and a datum. There are several coordinate systems like affine, cylindrical, cartesian, ellipsoidal, linear, polar, spherical, and vertical. A datum is a set of parameters that define the position of the origin, the scale, and the orientation of a coordinate system.

Broadly speaking, many databases supporting spatial data divide them into two categories, geometry and geography:

![]()

Geometry stores spatial data on a flat coordinate system. This helps us represent shapes like points, lines, and regions with their coordinates in cartesian space. Geography stores spatial data based on a round-earth coordinate system. This is useful in representing the same shapes on the surface of the Earth with latitude and longitude coordinates.

There are two fundamental types of inquiry we can make with spatial data. These are basically to find nearest neighbors or to send different types of range queries. We've already seen examples of queries to find the nearest neighbors earlier. The general idea is to identify a certain number of items nearest to a query point.

The other important type of query is the range query. Here, we're interested to know all the items that fall within a query range. The query range can be a rectangle or a circle with a certain radius from a query point. For instance, we can use this kind of query to identify all Italian restaurants within a two-mile radius from where we're standing.

6. Data Structures for Spatial Data

Now, we'll understand some of the data structures that are more appropriate for building spatial indices. This will help us understand how they are different from regular indices and why they're more efficient in handling spatial operations. Invariably, almost all these data structures are variations of the tree data structure.

6.1. Regular Database Index

A database index is basically a data structure that improves the speed of data retrieval operations. Without an index, we would have to go through all the rows to search for the row we're interested in. But, for a table of significant size, even going through the index can take a significant amount of time.

However, it's important to reduce the number of steps to fetch a key and reduce the number of disk operations to do so. A B-tree or a balanced tree is a self-balancing tree data structure that stores several sorted key-value pairs in every node. This helps pull a larger set of keys in the processor cache in a single read operation from the disk.

While a B-tree works pretty well, generally, we use a B+tree for building a database index. A B+tree is very similar to a B-tree except for the fact that it stores values or data only at the leaf nodes:

![]()

Here, all the leaf nodes are also linked and, hence, provide ordered access to the key-value pairs. The benefit here is that the leaf nodes provide the first level of the index, while the internal nodes provide a multilevel index.

A regular database index focuses on ordering its keys on a single dimension. For instance, we can create an index on one of the attributes like zip code in our database table. This will help us to query all locations with a certain zip code or within a range of zip codes.

6.2. Spatial Database Index

In geospatial applications, we're often interested in nearest neighbor or range queries. For instance, we may want to find all locations within 10 miles of a particular point. A regular database index does not prove to be very useful here. In fact, there are other more suitable data structures to build spatial indices.

One of the most commonly used data structures is the R-tree. The R-tree was first proposed by Antonin Guttman in 1984 and is suitable for storing spatial objects like locations. The fundamental idea behind R-tree is to group nearby objects and represent them with their minimum bounding rectangle in the next higher level of the tree:

![]()

For most operations, an R-tree is not very different from a B-tree. The key difference is in using the bounding rectangles to decide whether to search inside a subtree or not. For better performance, we should ensure that rectangles don't cover too much empty space and that they don't overlap too much. Most interestingly, an R-tree can extend to cover three or even more dimensions!

Another data structure for building a spatial index is the Kd-tree, which is a slight variation of the R-tree. The Kd-tree splits the data space into two instead of partitioning it into multiple rectangles. Hence, the tree nodes in a Kd-tree represent separating planes and not bounding boxes. While Kd-tree proves to be easier to implement and is faster, it's not suitable for data that is always changing.

The key idea behind these data structures is basically partitioning data into axis-aligned regions and storing them in tree nodes. In fact, there are quite a few other such data structures that we can use, like BSP-tree and R*-tree.

7. Databases with Native Support

We've already seen how spatial data differ from regular data and why they need special treatment. Hence, what we require to build a geospatial application is a database that can natively support storing spatial data types and that can perform spatial queries efficiently. We refer to such a database management system as a spatial database management system.

Almost all mainstream databases have started to provide some level of support for spatial data. This includes some popular database management systems like MySQL, Microsoft SQL Server, PostgreSQL, Redis, MongoDB, Elasticsearch, and Neo4J. However, there are some purpose-built spatial databases available as well, such as GeoMesa, PostGIS, and Oracle Spatial.

7.1. Redis

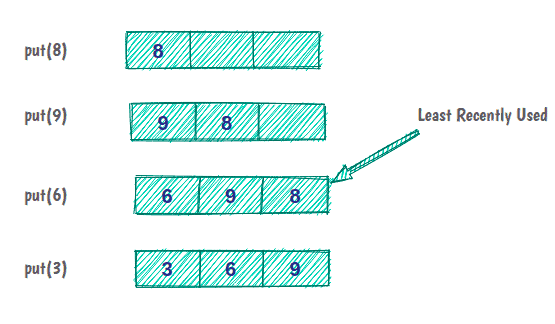

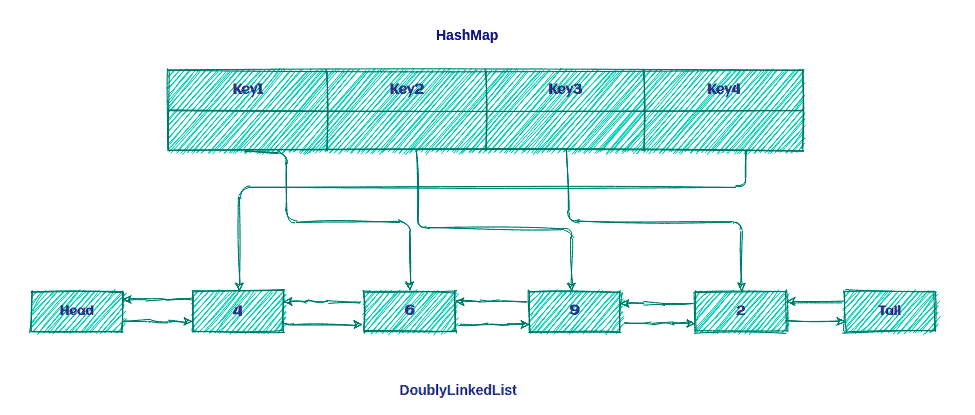

Redis is an in-memory data structure store that we can use as a database, a cache, or a message broker. It can minimize the network overhead and latency as it performs operations efficiently in memory. Redis supports various data structures like Hash, Set, Sorted Set, List, and String. Of particular interest for us are Sorted Sets that add an ordered view to members, sorted by scores.

Geospatial indexing is implemented in Redis using Sorted Sets as the underlying data structure. Redis actually encodes the latitude and longitude into the score of the Sorted Set using the geohash algorithm. Geo Set is the key data structure implemented with a Sorted Set and supports geospatial data in Redis at a more abstract level.

Redis provides simple commands to work with the geospatial index and perform common operations like creating new sets and adding or updating members in the set. For instance, to create a new set and add members to it from the command line, we can use the GEOADD command:

GEOADD locations 20.99 65.44 Vehicle-1 23.99 55.45 Vehicle-2

Here, we're adding the location of a few vehicles to a Geo Set called “locations”.

Redis also provides several ways to read the index, like ZRANGE, ZSCAN, and GEOPOS. Further, we can use the command GEODIST to compute the distance between the members in a set. But the most interesting commands are those that allow us to search the index by location. For instance, we can use the command GEORADIUSYMEMBER to search members that are within a radius range of a particular member:

GEORADIUSBYMEMBER locations Vehicle-3 1000 m

Here, we're interested in finding all other vehicles within a one-kilometer radius of the third vehicle.

Redis is quite powerful and simple in providing support for storing a large volume of geospatial data and performing low latency geospatial queries.

7.2. MongoDB

MongoDB is a document-oriented database management system that uses JSON-like documents with an optional schema to store data. It provides several ways to search documents, like field queries, range queries, and regular expressions. We can even index the documents to primary and secondary indices. Moreover, MongoDB with sharding and replication provides high availability and horizontal scalability.

We can store spatial data in MongoDB either as GeoJSON objects or as legacy coordinate pairs. GeoJSON objects are useful for storing location data over an Earth-like surface, whereas legacy coordinate pairs are useful for storing data that we can represent in a Euclidean plane.

To specify the GeoJSON data, we can use an embedded document with a field named type to indicate GeoJSON object type and another field named coordinates to indicate the object's coordinates:

db.vehicles.insert( {

name: "Vehicle-1",

location: { type: "Point", coordinates: [ 83.97, 70.77 ] }

} )

Here, we're adding a document in the collection named vehicles. The embedded document is a GeoJSON object of the type Point with its longitude and latitude coordinates.

Further, MongoDB provides multiple geospatial index types like 2dsphere and 2d to support geospatial queries. A 2dsphere supports queries that calculate geometries on an Earth-like sphere:

db.vehicles.createIndex( { location : "2dsphere" } )

Here, we're creating a 2dsphere index on the location field of our collection.

Lastly, MongoDB offers several geospatial query operators to facilitate searching through the geospatial data. Some of the operators are geoIntersects, geoWithin, near, and nearSphere. These operators can interpret geometry on a flat surface or a sphere.

For instance, let's see how we can use the near operator:

db.places.find(

{

location:

{ $near:

{

$geometry: { type: "Point", coordinates: [ 93.96, 30.78 ] },

$minDistance: 500,

$maxDistance: 1000

}

}

}

)

Here, we're searching for documents that are at least 500 meters and at most 1,000 meters from the mentioned GeoJSON Point.

The power of representing JSON-like data with flexible schema, scale efficiency, and inherent support for geospatial data makes MongoDB quite suitable for geospatial applications.

7.3. PostGIS

PostgreSQL is a relational database management system that provides SQL compliance and features ACID transactions. It's quite versatile in supporting a wide variety of workloads. PostgreSQL includes built-in support for synchronous replication and built-in support for regular B-tree and hash table indices. PostGIS is a spatial database extender for PostgreSQL.

Basically, PostGIS adds support for storing geospatial data in PostgreSQL and executing location queries in SQL. It adds geometry types for Points, LineStrings, Polygons, and more. Further, it provides spatial indices using R-tree-over-GiST (Generalized Search Tree). Lastly, it also adds spatial operators for geospatial measurements and set operations.

We can create a database in PostgreSQL as always and enable the PostGIS extension to start using it. Fundamentally, data is stored in rows and columns, but PostGIS introduces a geometry column with data in a specific coordinate system defined by a Spatial Reference Identifier (SRID). PostGIS also adds many options for loading different GIS data formats.

PostGIS supports both geometry and geography data types. We can use regular SQL queries to create a table and insert a geography data type:

CREATE TABLE vehicles (name VARCHAR, geom GEOGRAPHY(Point));

INSERT INTO vehicles VALUES ('Vehicle-1', 'POINT(44.34 82.96)');

Here, we've created a new table “vehicles” and have added the location of a particular vehicle using the Point geometry.

PostGIS adds quite a few spatial functions to perform spatial operations on the data. For instance, we can use spatial function ST_AsText to read geometry data as text:

SELECT name, ST_AsText(geom) FROM vehicles;

Of course, for us, a more useful query is to look for all vehicles in the near vicinity of a given point:

SELECT geom FROM vehicles

WHERE ST_Distance( geom, 'SRID=4326;POINT(43.32 68.35)' ) < 1000

Here, we're searching for all vehicles within a one-kilometer radius of the provided point.

PostGIS adds the spatial capabilities to PostgreSQL, allowing us to leverage well-known SQL semantics for spatial data. Moreover, we can benefit from all the advantages of using PostgreSQL.

8. Industry Standards and Specifications

While we've seen that the support for spatial data is growing in the database layer, what about the application layer? For building geospatial applications, we need to write code that is capable of handling spatial data efficiently.

Moreover, we need standards and specifications to represent and transfer spatial data between different components. Further, language bindings can support us in building a geospatial application in a language like Java.

In this section, we'll cover some of the standardization that has taken place in the field of geospatial applications, the standards they have produced, and the libraries that are available for us to use.

8.1. Standardization Efforts

There has been a lot of development in this area, and through the collaborative efforts of multiple organizations, several standards, and best practices have been established. Let's first go through some of the organizations contributing to the advancement and standardization of geospatial applications across different industries.

Environmental Systems Research Institute (ESRI) is perhaps one of the oldest and largest international suppliers of Geographic Information System (GIS) software and geodatabase management applications. They develop a suite of GIS software under the name ArcGIS targeted for multiple platforms like desktop, server, and mobile. It has also established and promotes data formats for both vector and raster data types — for instance, Shapefile, File Geodatabase, Esri Grid, and Mosaic.

Open Geospatial Consortium (OGC) is an international industry consortium of more than 300 companies, government agencies, and universities participating in a consensus process to develop publicly available interface specifications. These specifications enable complex spatial information and services accessible and useful to all kinds of applications. Currently, the OGC standard comprises more than 30 standards, including Spatial Reference System Identifier (SRID), Geography Markup Language (GML), and Simple Features – SQL (SFS).

Open Source Geospatial Foundation (OSGeo) is a non-profit, non-government organization that supports and promotes the collaborative development of open geospatial technologies and data. It promotes geospatial specifications like Tile Map Service (TMS). Moreover, it also helps in the development of several geospatial libraries like GeoTools and PostGIS. It also works on applications like QGIS, a desktop GIS for data viewing, editing, and analysis. These are just a few of the projects that OSGeo promotes under its umbrella.

8.2. Geospatial Standards: OGC GeoAPI

The GeoAPI Implementation Standard defines, through the GeoAPI library, a Java language API including a set of types and methods that we can use to manipulate geographic information. The underlying structure of the geographic information should follow the specification adopted by the Technical Committee 211 of the International Organization for Standardization (ISO) and by the OGC.

GeoAPI provides Java interfaces that are implementation neutral. Before we can actually use GeoAPI, we have to pick from a list of third-party implementations. We can perform several geospatial operations using the GeoAPI. For instance, we can get a Coordinate Reference System (CRS) from an EPSG code. Then, we can perform a coordinate operation like map projection between a pair of CRSs:

CoordinateReferenceSystem sourceCRS =

crsFactory.createCoordinateReferenceSystem("EPSG:4326"); // WGS 84

CoordinateReferenceSystem targetCRS =

crsFactory.createCoordinateReferenceSystem("EPSG:3395"); // WGS 84 / World Mercator

CoordinateOperation operation = opFactory.createOperation(sourceCRS, targetCRS);

double[] sourcePt = new double[] {

27 + (59 + 17.0 / 60) / 60, // 27°59'17"N

86 + (55 + 31.0 / 60) / 60 // 86°55'31"E

};

double[] targetPt = new double[2];

operation.getMathTransform().transform(sourcePt, 0, targetPt, 0, 1);

Here, we're using the GeoAPI to perform a map projection to transform a single CRS point.

There are several third-party implementations of GeoAPI available as wrappers around existing libraries — for instance, NetCDF Wrapper, Proj.6 Wrapper, and GDAL Wrapper.

8.3. Geospatial Libraries: OSGeo GeoTools

GeoTools is an OSGeo project that provides an open-source Java library for working with geospatial data. The GeoTools data structure is basically based on the OGC specifications. It defines interfaces for key spatial concepts and data structures. It also comes with a data access API supporting feature access, a stateless low memory rendered, and a parsing technology using XML schema to bind to GML content.

To create geospatial data in GeoTools, we need to define a feature type. The simplest way is to use the class SimpleFeatureType:

SimpleFeatureTypeBuilder builder = new SimpleFeatureTypeBuilder();

builder.setName("Location");

builder.setCRS(DefaultGeographicCRS.WGS84);

builder

.add("Location", Point.class);

.length(15)

.add("Name", String.class);

SimpleFeatureType VEHICLE = builder.buildFeatureType();

Once we have our feature type ready, we can use this to create the feature with SimpleFeatureBuilder:

SimpleFeatureBuilder featureBuilder = new SimpleFeatureBuilder(VEHICLE);

DefaultFeatureCollection collection = new DefaultFeatureCollection();

GeometryFactory geometryFactory = JTSFactoryFinder.getGeometryFactory(null);

We're also instantiating the collection to store our features and the GeoTools factory class to create a Point for the location. Now, we can add specific locations as features to our collection:

Point point = geometryFactory.createPoint(new Coordinate(13.46, 42.97));

featureBuilder.add(point);

featureBuilder.add("Vehicle-1");

collection.add(featureBuilder.buildFeature(null))

This is just scratching the surface of what we can do with the GeoTools library. GeoTools provides support for working with both vector and raster data types. It also allows us to work with data in a standard format like shapefile.

9. Conclusion

In this tutorial, we went through the basics of building a geospatial application. We discussed the nature of and challenges in building such an application. This helped us understand the different types of spatial data and data structures we can make use of.

Further, we went through some of the open-source databases with native support for storing spatial data, building spatial indices, and performing spatial operations. Lastly, we also covered some of the industry collaborations driving the standardization efforts in geospatial applications.

The post

Architecture of a Geospatial Application with Java first appeared on

Baeldung.

![]()

![]()