1. Introduction

In this tutorial, we’ll learn how to schedule tasks to run only once. Scheduled tasks are common for automating processes like reports or sending notifications. Typically, we set these to run periodically. Still, there are scenarios where we might want to schedule a task to execute only once at a future time, such as initializing resources or performing data migration.

We’ll explore several ways to schedule tasks to run only once in a Spring Boot application. From using the @Scheduled annotation with an initial delay to more flexible approaches like TaskScheduler and custom triggers, we’ll learn how to ensure that our tasks are executed just once, with no unintended repetitions.

2. TaskScheduler With Start-Time Only

While the @Scheduled annotation provides a straightforward way to schedule tasks, it’s limited in terms of flexibility. When we need more control over task planning (especially for one-time executions), Spring’s TaskScheduler interface offers a more versatile alternative. Using TaskScheduler, we can programmatically schedule tasks with a specified start time, providing greater flexibility for dynamic scheduling scenarios.

The simplest method within TaskScheduler allows us to define a Runnable task and an Instant, representing the exact time we want it to execute. This approach enables us to schedule tasks dynamically without relying on fixed annotations. Let’s write a method for scheduling a task to run at a specific point in the future:

private TaskScheduler scheduler = new SimpleAsyncTaskScheduler();

public void schedule(Runnable task, Instant when) {

scheduler.schedule(task, when);

}All the other methods in TaskScheduler are for periodic executions, so this method is helpful for one-off tasks. Most importantly, we’re using a SimpleAsyncTaskScheduler for demonstration purposes, but we can switch to any other implementation appropriate to the tasks we need to run.

Scheduled tasks are challenging to test, but we can use a CountDownLatch to wait for the execution time we choose and ensure it only executes once. Let’s call countdown() our latch as the task and schedule it for a second in the future:

@Test

void whenScheduleAtInstant_thenExecutesOnce() throws InterruptedException {

CountDownLatch latch = new CountDownLatch(1);

scheduler.schedule(latch::countDown,

Instant.now().plus(Duration.ofSeconds(1)));

boolean executed = latch.await(5, TimeUnit.SECONDS);

assertTrue(executed);

}We’re using the version of latch.await() that accepts a timeout, so we never end up waiting indefinitely. If it returns true, we assert that the task finished successfully and that our latch had only one countDown() call.

3. Using @Scheduled With Initial Delay Only

One of the simplest ways to schedule a one-time task in Spring is by using the @Scheduled annotation with an initial delay and leaving out the fixedDelay or fixedRate attributes. Typically, we use @Scheduled to run tasks at regular intervals, but when we specify only an initialDelay, the task will execute once after the specified delay and not repeat:

@Scheduled(initialDelay = 5000)

public void doTaskWithInitialDelayOnly() {

// ...

}In this case, our method will run 5 seconds (5000 milliseconds) after the component containing this method initializes. Since we didn’t specify any rate attributes, the method won’t repeat after this initial execution. This approach is interesting when we need to run a task just once after the application starts or when we want to delay the execution of a task for some reason.

For example, this is handy for running CPU-intensive tasks a few seconds after the application has started, allowing other services and components to initialize properly before consuming resources. However, one limitation of this approach is that the scheduling is static. We can’t dynamically adjust the delay or execution time at runtime. It’s also worth noting that the @Scheduled annotation requires the method to be part of a Spring-managed component or service.

3.1. Before Spring 6

Before Spring 6, it wasn’t possible to leave out the delay or rate attributes, so our only option was to specify a theoretically unreachable delay:

@Scheduled(initialDelay = 5000, fixedDelay = Long.MAX_VALUE)

public void doTaskWithIndefiniteDelay() {

// ...

}In this example, the task will execute after the initial 5-second delay, and the subsequent execution won’t happen before millions of years, effectively making it a one-time task. While this approach works, it’s not ideal if we need flexibility or cleaner code.

4. Creating a PeriodicTrigger Without a Next Execution

Our final option is to implement a PeriodicTrigger. Using it over TaskScheduler benefits us in cases where we need more reusable, complex scheduling logic. We can override nextExecution() to only return the next execution time if we haven’t triggered it yet.

Let’s start by defining a period and initial delay:

public class OneOffTrigger extends PeriodicTrigger {

public OneOffTrigger(Instant when) {

super(Duration.ofSeconds(0));

Duration difference = Duration.between(Instant.now(), when);

setInitialDelay(difference);

}

// ...

}Since we want this to execute only once, we can set anything as a period. And since we must pass a value, we’ll pass a zero. Ultimately, we calculate the difference between the desired moment we want our task to execute and the current time since we need to pass a Duration to our initial delay.

Then, to override nextExecution(), we check the last completion time in our context:

@Override

public Instant nextExecution(TriggerContext context) {

if (context.lastCompletion() == null) {

return super.nextExecution(context);

}

return null;

}A null completion means it hasn’t fired yet, so we let it call the default implementation. Otherwise, we return null, which makes this a trigger that only executes once. Finally, let’s create a method to use it:

public void schedule(Runnable task, PeriodicTrigger trigger) {

scheduler.schedule(task, trigger);

}4.1. Testing the PeriodicTrigger

Finally, we can write a simple test to ensure our trigger behaves as expected. In this test, we use a CountDownLatch to track whether the task executes. We schedule the task with our OneOffTrigger and verify that it runs exactly once:

@Test

void whenScheduleWithRunOnceTrigger_thenExecutesOnce() throws InterruptedException {

CountDownLatch latch = new CountDownLatch(1);

scheduler.schedule(latch::countDown, new OneOffTrigger(

Instant.now().plus(Duration.ofSeconds(1))));

boolean executed = latch.await(5, TimeUnit.SECONDS);

assertTrue(executed);

}5. Conclusion

In this article, we explored solutions for scheduling a task to run only once in a Spring Boot application. We started with the most straightforward option, using the @Scheduled annotation without a fixed rate. We then moved on to more flexible solutions, like using the TaskScheduler for dynamic scheduling and creating custom triggers that ensure tasks only execute once.

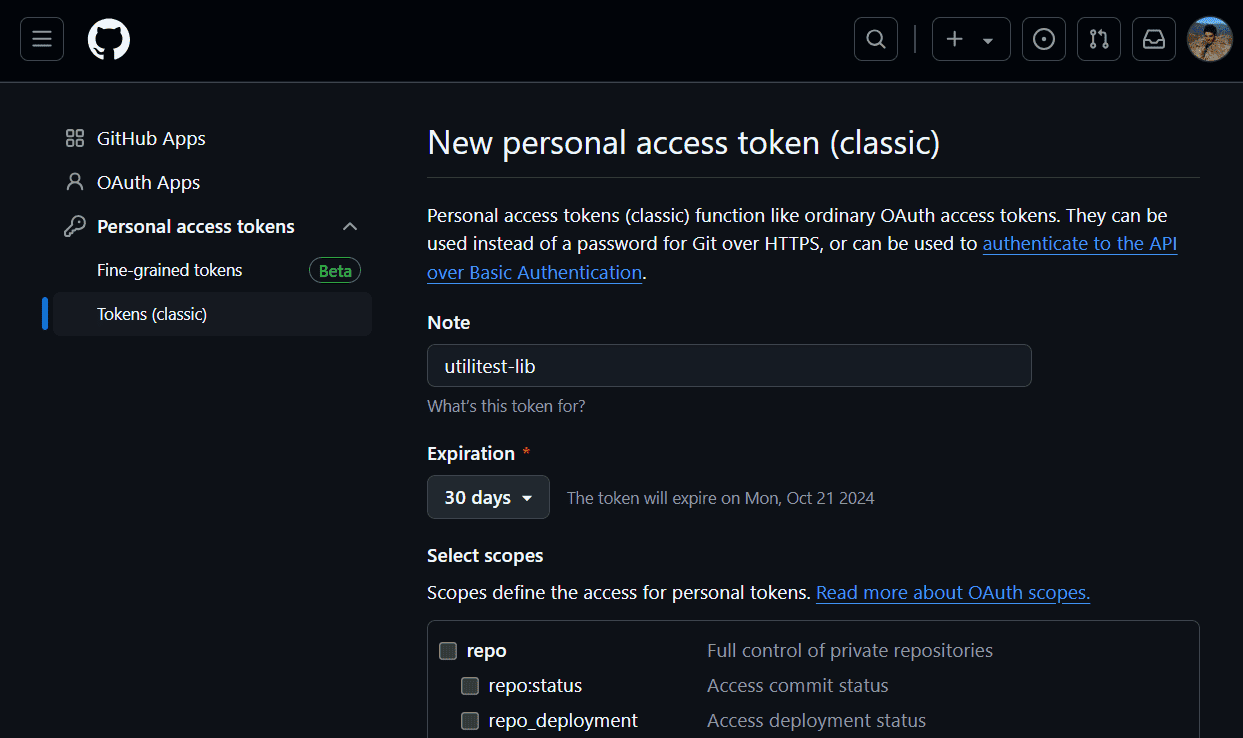

Each approach provides different levels of control, so we choose the method that best fits our use case. As always, the source code is available over on GitHub.